TL;DR:

- Meta AI has introduced a new AI tool called the Segment Anything Model (SAM), which is capable of identifying objects in images, even if it has never seen them before.

- SAM represents a major advancement in the field of AI, allowing computers to understand and isolate elements in previously unseen images.

- SAM works by searching for related pixels in an image and identifying common components and has a general understanding of what objects are.

- Prior to SAM’s development, segmentation required manual definition of masks or complex automated techniques that required significant technical expertise and computing resources.

- SAM integrates both approaches in a fully automated system, leveraging over 1 billion masks to recognize new objects, making it easier for practitioners to use.

- SAM has been referred to as “Photoshop’s ‘Magic Wand’ tool on steroids” and has potential uses in AR/VR, movie creation, gaming, and presentations.

- A working model of SAM is available online, allowing users to select or upload images and use SAM to outline or identify objects in the image.

- Similar technology has been used for photo tagging, moderation, and disallowed content tagging on Facebook and Instagram, as well as generating recommended posts.

Main AI News:

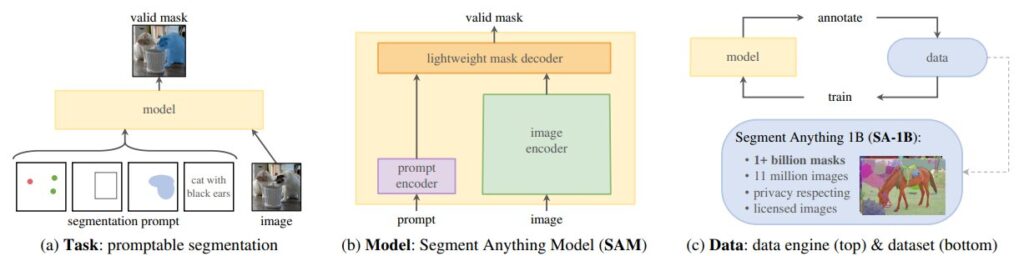

Meta AI is making waves with its latest innovation – the Segment Anything Model (SAM), a cutting-edge tool capable of identifying objects in an image, even if it has never seen them before. This groundbreaking technology was introduced in a recent article on the arXiv pre-print server.

SAM represents a major step forward in one of the most challenging areas of AI development: enabling computers to understand and isolate elements in an image that they have not seen before. This concept, described by former National Security Commission on Artificial Intelligence chair Robert O. Work as “finding the needle in the haystack,” is now a reality with SAM.

The tool works by searching for related pixels in an image and identifying common components that make up all parts of the picture. SAM has learned a general understanding of what objects are and, as a result, can generate masks for any object in any image or video, even those it has not encountered during its training process.

The task of recognizing objects in an image is called segmentation, and it is something that humans do instinctively. We effortlessly recognize items on our office desks, such as smartphones, cables, computer screens, lamps, melting candy bars, and cups of coffee. However, without prior programming, a computer must struggle to differentiate all components in a two-dimensional image, especially when there are overlapping items, shadows, or irregular shapes.

In a recent blog post, Meta AI announced the successful deployment of SAM, marking a significant milestone in the development of AI technology.

Prior to SAM’s development, segmentation typically required a manual definition of masks. Automated segmentation techniques allowed for object detection, but according to Meta AI, this process was complex and required “thousands or even tens of thousands of examples” of objects, as well as significant technical expertise and computing resources to train the segmentation model.

SAM, however, integrates both approaches in a fully automated system, leveraging over 1 billion masks to recognize new objects. This ability to generalize means that practitioners will no longer need to collect their own data and fine-tune models for specific use cases, according to Meta AI’s blog.

One reviewer has referred to SAM as “Photoshop’s ‘Magic Wand’ tool on steroids,” highlighting its powerful capabilities. SAM can be activated through user clicks or text prompts, and Meta researchers have high hopes for its future use in the AR/VR space. By focusing on an object, users can outline, define, and “lift” it into a 3D image, making it a valuable tool for movie creation, gaming, and presentations.

A working model of SAM is available online, allowing users to select images from a gallery or upload their own photos. By tapping on the screen or drawing a rectangle around an object of interest, SAM can outline the nose, face, or body or identify every object in an image. Although SAM has not yet been applied to Facebook, similar technology has been used for photo tagging, moderation, and disallowed content tagging on both Facebook and Instagram, as well as generating recommended posts.

Conlcusion:

The introduction of the Segment Anything Model (SAM) by Meta AI represents a significant advancement in the field of AI and image recognition technology. SAM’s ability to identify objects in images, even if it has never seen them before, is a major step forward in the challenge of enabling computers to understand and isolate elements in previously unseen images. This new tool is easier to use and more flexible than prior approaches, as it integrates both manual and automated techniques in a fully automated system. SAM has a wide range of potential applications, from AR/VR to movie creation, gaming, and presentations. With its powerful capabilities, SAM is poised to have a significant impact on the market and the future of AI technology.