- Emo, a robot developed by researchers at Columbia Engineering, can anticipate and replicate human facial expressions in real-time interaction.

- Led by Hod Lipson, the team addressed challenges in nonverbal communication by equipping Emo with 26 actuators and high-resolution cameras.

- Emo’s AI models analyze subtle facial changes to predict expressions and generate corresponding motor commands.

- The robot learns to mimic human expressions through self-modeling, enhancing interaction quality and building trust.

- Future goals include integrating verbal communication to further enhance Emo’s capabilities.

Main AI News:

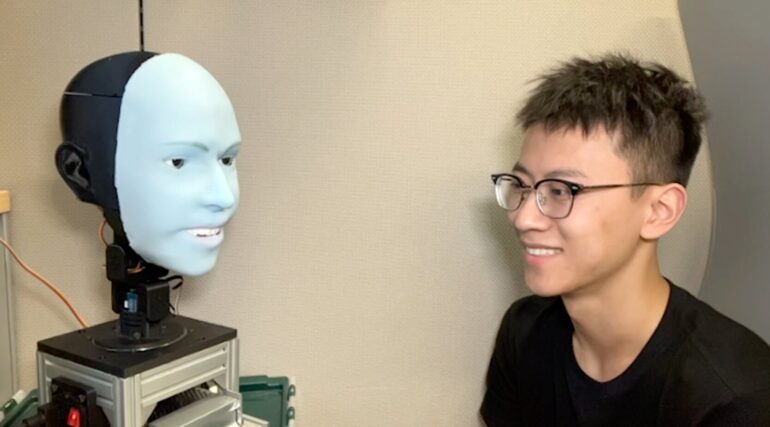

Robotic facial interaction has reached a new level, as a team of researchers at Columbia Engineering unveils Emo, a robot capable of not only making facial expressions but also anticipating and replicating them in real-time interaction with humans. Published in Science Robotics, this groundbreaking study marks a significant leap forward in human-robot interaction (HRI) technology.

Led by Hod Lipson, a prominent figure in artificial intelligence (AI) and robotics, the team at the Creative Machines Lab has spent over five years tackling the challenges of nonverbal communication in robots. While verbal communication has seen advancements, the realm of facial expressions has remained a hurdle. However, Emo addresses this gap by predicting a person’s facial expressions milliseconds before they occur and co-expressing them simultaneously.

The development of Emo posed two primary challenges: designing a mechanically versatile robotic face and ensuring the expressions generated are natural and timely. To overcome these hurdles, the team equipped Emo with 26 actuators and a soft silicone skin, allowing for a wide range of expressions and easy customization. High-resolution cameras integrated into Emo’s eyes enable crucial nonverbal communication, such as making eye contact.

The key to Emo’s ability lies in its AI models, which analyze subtle facial changes to predict expressions and generate corresponding motor commands. Through a process akin to self-modeling, Emo learns to mimic human expressions by observing and imitating them, much like humans practice in front of a mirror.

Lead author Yuhang Hu emphasizes the significance of this advancement in HRI, noting that Emo’s ability to integrate human facial expressions enhances interaction quality and builds trust between humans and robots. Looking ahead, the team aims to integrate verbal communication into Emo, further enhancing its capabilities.

Despite the promising applications of this technology, Lipson highlights the importance of ethical considerations. As robots evolve to mimic human behavior more closely, developers and users must exercise prudence. However, Lipson also underscores the exciting potential of this advancement, envisioning a future where interacting with robots feels as natural as conversing with a friend.

Conclusion:

The development of Emo represents a significant advancement in human-robot interaction technology. This breakthrough has the potential to revolutionize various markets, including healthcare, customer service, and education, by offering robots capable of empathetic and natural interaction with humans. As Emo continues to evolve and integrate additional features, its impact on the market is expected to grow, driving demand for more advanced robotic solutions tailored for diverse applications. Businesses should closely monitor these developments and consider how they can leverage such technology to enhance their products and services.