- Google AI introduces MathWriting, a dataset revolutionizing handwritten mathematical expression (HME) recognition.

- MathWriting comprises 230k human-written and 400k synthetic samples, surpassing previous datasets like IM2LATEX-100K.

- The dataset facilitates both online and offline HME recognition and is compatible with formats like InkML and existing datasets such as CROHME.

- MathWriting offers a broader array of tokens, encompassing 254 distinct entities, enabling representation across diverse scientific fields.

- Comparative analysis shows MathWriting’s superiority over existing datasets, with significantly more samples and distinct labels.

- The dataset is released under the Creative Commons license, employing normalized LATEX notation as ground truth for training and evaluation.

Main AI News:

In recent years, there has been remarkable progress in online text recognition models driven by sophisticated model architectures and expansive datasets. However, the recognition of mathematical expressions (MEs), a task of greater complexity, has not received commensurate attention. Unlike plain text, MEs possess a structured two-dimensional format where the spatial arrangement of symbols holds paramount importance. Handwritten MEs (HMEs) present even more formidable challenges due to inherent ambiguities and the requirement for specialized hardware. Acquiring handwritten samples proves to be costly as it necessitates human involvement, further exacerbated by the need for dedicated input devices such as touchscreens or digital pens. Consequently, enhancing ME recognition necessitates tailored strategies distinct from those employed in text recognition.

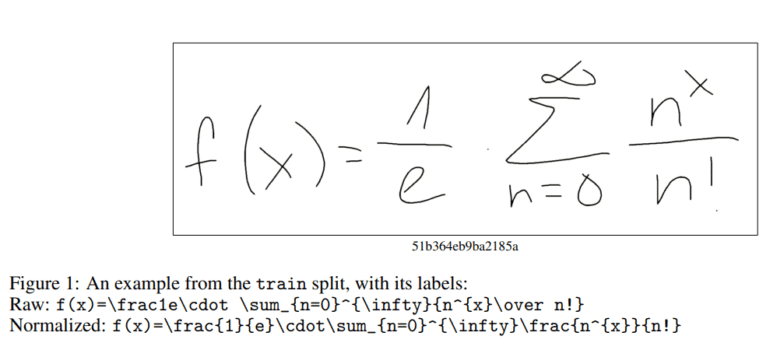

Introducing MathWriting, a groundbreaking dataset for online HME developed by Google Research. Comprising a staggering 230,000 human-written samples and 400,000 synthetic samples, MathWriting eclipses previous offline HME datasets like IM2LATEX-100K. This resource facilitates both online and offline HME recognition, serving as a cornerstone for research endeavors by furnishing an abundance of data. MathWriting harmonizes seamlessly with other online datasets, such as CROHME and Detexify, which are presented in the versatile InkML format. The process of rasterizing inks significantly augments offline HME datasets. This initiative sets a new standard for ME recognition, featuring standardized ground truth expressions for simplified training and rigorous evaluation, complemented by comprehensive code examples available on GitHub for effortless integration.

Comparative analysis between MathWriting and CROHME23 reveals MathWriting’s supremacy, boasting nearly 3.9 times more samples and 4.5 times more distinct labels post normalization. While there exists a substantial overlap of labels between the two datasets (47,000), the majority are dataset-specific. Noteworthy is MathWriting’s abundance of human-written inks in contrast to CROHME23. Furthermore, MathWriting encompasses a broader spectrum of tokens, comprising 254 distinct entities, including Latin capitals, a substantial portion of the Greek alphabet, and matrices—enabling representation across diverse scientific domains such as quantum mechanics, differential calculus, and linear algebra.

The MathWriting dataset encompasses 253,000 human-written expressions and 6,000 isolated symbols meticulously curated for training, validation, and testing, alongside 396,000 synthetic expressions. Encompassing 244 mathematical symbols and ten syntactic tokens, this dataset, released under the Creative Commons license, adopts normalized LATEX notation as ground truth. The benchmark for handwritten mathematical expression recognition leverages MathWriting’s test split and employs the character error rate (CER) metric. Various recognition models, including CTC Transformer and OCR, underscore the dataset’s efficacy. The data collection process engaged human contributors who meticulously transcribed rendered expressions through an Android application, followed by minimal post-processing and label normalization to augment model performance.

In contrast to the CROHME23 dataset, the MathWriting dataset provides a comprehensive understanding of handwritten mathematical expressions. Armed with extensive label and ink statistics, MathWriting furnishes invaluable insights into the diversity of expressions and writing styles. It underscores the pivotal role of synthetic data in enriching model diversity while shedding light on challenges like device discrepancies and noise sources such as stray strokes and inaccuracies in ground truth. Despite inherent recognition hurdles, MathWriting emerges as an indispensable resource for training and evaluating handwriting recognition models, offering invaluable glimpses into real-world recognition scenarios.

Conclusion:

Google’s MathWriting dataset represents a significant advancement in the field of handwritten mathematical expression recognition. Its extensive human-written and synthetic data integration, compatibility with existing datasets, and standardized ground truth notation offer researchers a comprehensive resource for training and evaluating recognition models. This development underscores the increasing importance of accurate mathematical expression recognition in various industries, from education to finance, driving the need for more sophisticated AI solutions in the market.