- Large Language Models (LLMs) excel in NLP tasks but face challenges in complex reasoning.

- AoR framework evaluates reasoning chains, enhancing LLM decision-making precision.

- Dynamic sampling adjusts chain sampling based on task complexity, optimizing accuracy and computational efficiency.

- Experimental results demonstrate AoR’s superiority over traditional ensemble methods in various reasoning tasks.

- AoR adapts well across different LLM architectures and reduces computational overhead by 20%.

Main AI News:

Recent years have witnessed a surge in the prowess of Large Language Models (LLMs), revolutionizing Natural Language Processing (NLP) capabilities. These models, adept at both understanding and generating human-like text, have become indispensable across various applications, from machine translation to intricate reasoning tasks. Yet, a persistent challenge looms: the gap between LLMs’ reasoning abilities and human-level expertise. This challenge is most pronounced in tasks requiring nuanced reasoning, where conventional models struggle to consistently deliver accurate outcomes due to flawed voting mechanisms.

Existing strategies such as Chain-of-Thought (CoT) prompting and Complexity-based prompting have sought to address this gap by introducing intermediary steps and filtering reasoning chains by complexity. However, these methods often fall short in ensuring consistent accuracy. Recognizing this, researchers from Fudan University, the National University of Singapore, and the Midea AI Research Center have unveiled a groundbreaking hierarchical reasoning aggregation framework dubbed AoR (Aggregation of Reasoning).

Unlike traditional ensemble methods that rely on answer frequency, AoR focuses on evaluating reasoning chains, thus elevating the precision and reliability of LLMs’ decision-making. Central to AoR’s innovation is dynamic sampling, a technique that adjusts the number of reasoning chains based on task complexity. This dynamic adaptation optimizes accuracy while mitigating computational overhead.

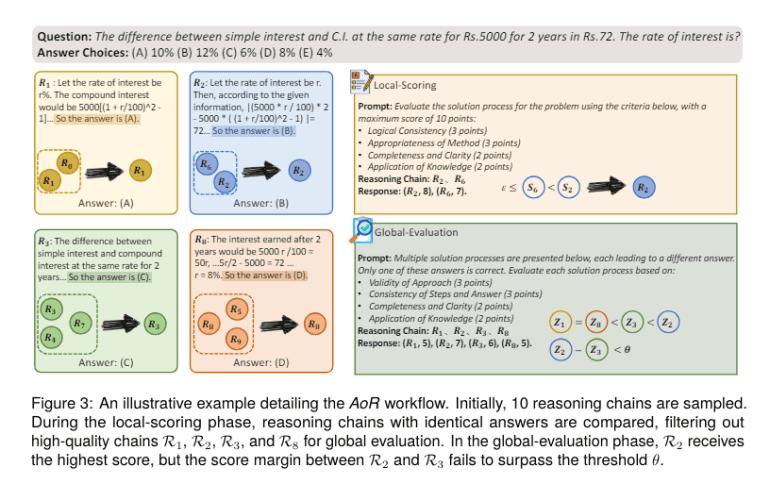

The AoR framework operates through a meticulous two-phase process: local scoring and global evaluation. In the local scoring phase, reasoning chains yielding identical answers undergo scrutiny for soundness and appropriateness of steps. The top-performing chains progress to the global evaluation phase, where logical coherence and consistency with answers are rigorously assessed. This stringent evaluation ensures that the final answer derives from the most logically sound reasoning chain.

Experimental findings underscore AoR’s superiority in complex reasoning tasks over traditional ensemble methods. For instance, on the AQuA dataset, AoR achieved a remarkable accuracy improvement of up to 7.2% compared to the Self-Consistency method. Moreover, AoR’s adaptability across various LLM architectures, including GPT-3.5-Turbo-0301, demonstrates a superior performance ceiling. Notably, AoR’s dynamic sampling capability strikes a balance between performance and computational cost, reducing overhead by 20% while maintaining high accuracy.

In mathematical and commonsense reasoning tasks, AoR surpassed baseline approaches across multiple datasets. Under CoT prompting, AoR exhibited an average performance boost of 2.37% compared to the DiVeRSe method and 3.09% compared to Self-Consistency, with significant gains in datasets like GSM8K and MultiArith. This performance enhancement underscores the efficacy of AoR in enhancing reasoning capabilities across diverse domains.

Crucially, dynamic sampling emerges as a linchpin of AoR’s success, enabling precise adjustments in reasoning chain sampling based on confidence levels. This strategy optimizes computational efficiency, focusing efforts on complex queries to yield accurate results. For instance, in the AQuA dataset, dynamic sampling significantly reduced the number of samples required, ensuring precise outcomes while optimizing computational resources.

Conclusion:

The introduction of the AoR framework marks a significant advancement in LLM decision-making capabilities, particularly in complex reasoning tasks. This innovation not only enhances precision and reliability but also optimizes computational efficiency, offering promising prospects for businesses relying on NLP technologies. As AoR continues to demonstrate superior performance over traditional methods, its adoption is likely to shape the landscape of NLP applications, driving increased efficiency and accuracy in diverse industries.