- Foundation models pivotal in AI’s impact on economy and society.

- FMTI v1.1 evaluates transparency across 14 developers using 100 indicators.

- Collaboration between academia and industry enhances completeness of transparency reports.

- Scores reveal room for improvement, with mean score at 57.93 out of 100.

- Societal impact underscores importance of transparency efforts.

Main AI News:

Recent years have witnessed the central role of foundation models in shaping the landscape of AI’s impact on both the economy and society. Among the critical factors shaping the discourse around these models is transparency, which serves as a cornerstone for accountability, fostering healthy competition, and facilitating comprehension, especially concerning the datasets underpinning these models. Governments worldwide are responding to this imperative by enacting regulatory frameworks such as the EU AI Act and the US AI Foundation Model Transparency Act, aiming to bolster transparency standards within the AI ecosystem.

In the quest for transparency, the Foundation Model Transparency Index (FMTI) emerged as a pivotal tool for evaluation. Introduced in October 2023, the FMTI provides a comprehensive assessment of transparency across ten major developers, including industry giants like OpenAI, Google, and Meta, leveraging a robust framework comprising 100 indicators. However, the inaugural release of FMTI v1.0 unveiled a landscape characterized by significant opacity, with an average score of merely 37 out of 100. Notably, there existed considerable divergence in transparency disclosures among the assessed entities.

Building upon its predecessor, FMTI v1.1 represents a significant stride towards fostering transparency within the foundation model ecosystem. This iteration, unveiled following six months of rigorous evaluation, maintains the original set of 100 transparency indicators. Notable contributions from esteemed institutions such as Stanford University, MIT, and Princeton University have underpinned this endeavor, reflecting a collective commitment to advancing transparency in AI development.

Key to the evolution of FMTI v1.1 was the active engagement of developers, with fourteen entities participating in the process. Through self-reporting mechanisms, developers contributed fresh insights, thereby enhancing the completeness, clarity, and scalability of transparency disclosures. Notably, this collaborative approach facilitated the unveiling of previously undisclosed information, with an average of 16.6 new indicators per developer.

The methodology underpinning FMTI v1.1 revolves around four key stages: indicator selection, developer engagement, information collation, and scoring. With a focus on the upstream resources, the model itself, and downstream applications, developers were tasked with furnishing detailed transparency reports for their flagship models. Noteworthy was the shift towards direct developer submissions, a move aimed at ensuring the comprehensiveness and accuracy of disclosed information.

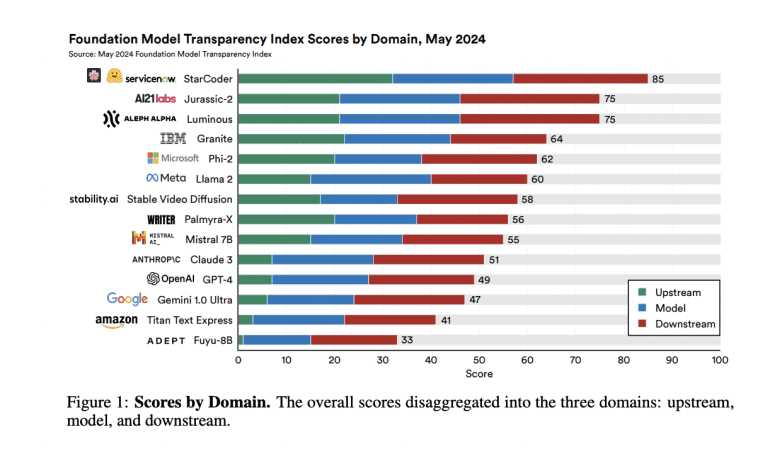

The culmination of FMTI v1.1 saw a comprehensive evaluation of transparency across a spectrum of domains. Notably, while initial scores exhibited significant variance, with eleven out of fourteen developers scoring below 65, the process unearthed substantial room for improvement. The mean and median scores stood at 57.93 and 57, respectively, with a standard deviation of 13.98, reflecting the diverse landscape of transparency disclosures within the ecosystem.

Crucially, the societal significance of foundation models underscores the importance of ongoing transparency efforts. The FMTI serves as a barometer for gauging progress in this realm, offering stakeholders invaluable insights to inform decision-making processes. By fostering an environment of transparency and accountability, the Index contributes towards nurturing a more informed, equitable, and responsible AI ecosystem.

Conclusion:

The findings from FMTI v1.1 underscore the ongoing imperative for transparency within the foundation model ecosystem. While progress has been made, there remains substantial room for improvement across the evaluated entities. For stakeholders in the AI market, these insights emphasize the growing importance of transparency as a cornerstone for fostering trust, driving innovation, and ensuring ethical AI development practices.