- Alibaba Group introduces AlphaMath, leveraging Monte Carlo Tree Search (MCTS) for automated generation and refinement of training data for expansive language models (ELMs).

- Traditional methods in computational mathematics struggle with logical and numerical errors in multi-step mathematical problem-solving.

- AlphaMath integrates MCTS with policy and value models, autonomously producing high-quality training data without manual annotation.

- Enhanced models demonstrate over 90% accuracy on complex problem sets, marking a significant advancement in autonomous mathematical reasoning.

- The integration of MCTS in AlphaMath reduces the need for manual data annotation while maintaining high levels of accuracy and reliability.

Main AI News:

The realm of computational mathematics continually seeks avenues to fortify the cognitive capacities of expansive language models (ELMs). These models serve as linchpins across various domains, spanning from data analytics to artificial intelligence, where the precision in mathematical conundrum resolution holds paramount importance. Augmenting these models’ prowess to navigate intricate computations and logical deductions autonomously stands as a cornerstone in propelling technological and scientific inquiry forward.

A pivotal hurdle within this sphere pertains to the recurrent logical and numerical discrepancies encountered by ELMs when grappling with multifaceted mathematical quandaries. Conventional methodologies often hinge on the integration of code interpreters to manage numerical computations. Nevertheless, these methodologies frequently necessitate revisions when rectifying the logical fallacies that surface during the stepwise problem-solving progression.

Distinguished studies in computational mathematics encompass frameworks such as Chain of Thought (CoT) and Program of Thought (PoT), which harness external code interpreters via models like the Program-Aided Language (PAL). Additionally, frameworks like the REACT framework, DeepSeekMath, and MARIO models amalgamate coding environments to heighten the accuracy of mathematical reasoning. Furthermore, supervised fine-tuning models such as MAmmoTH and MathCoder leverage annotated datasets to refine ELM capabilities, with a focal point on meticulous problem-solving. However, these methodologies often entail substantial expenses and laborious manual dataset curation.

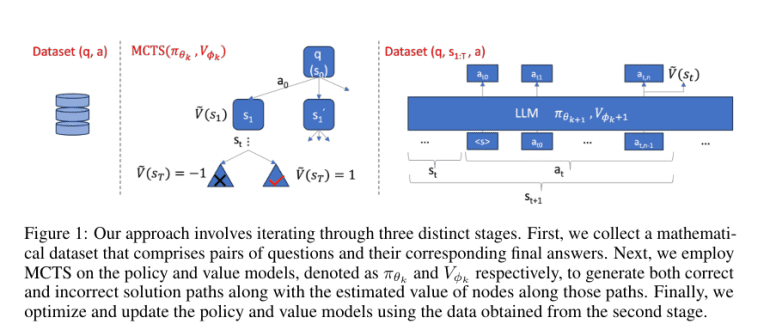

Alibaba Group researchers have introduced a pioneering methodology dubbed AlphaMath, which capitalizes on the Monte Carlo Tree Search (MCTS) to automate the formulation and enhancement of training data for ELMs in mathematical reasoning. This approach ingeniously obviates the necessity for manual data annotation, a prevalent bottleneck in traditional model training, by amalgamating pre-trained ELMs with algorithmic refinements to independently generate and ameliorate training inputs.

The modus operandi of AlphaMath revolves around the fusion of MCTS with a policy model and a value model. Initially, these models draw upon a dataset comprising solely of queries and their corresponding ultimate solutions, eschewing intricate solution pathways. The MCTS algorithm progressively devises and assesses potential solution trajectories, refining them based on the estimated values gleaned from the value model. This iterative process not only engenders high-caliber training data but also fine-tunes the model’s problem-solving strategies. The training and evaluation procedures are executed leveraging the MATH dataset, renowned for its intricacy, thereby scrutinizing the models’ adeptness under arduous circumstances.

The implementation of the MCTS methodology in AlphaMath has yielded noteworthy enhancements in the model’s efficacy on the MATH dataset. Specifically, the fortified models showcased a solution accuracy rate surpassing 90% on intricate problem sets, marking a surge from the baseline accuracy rates previously documented. These findings underscore a substantive stride in the model’s capability to autonomously resolve convoluted mathematical quandaries with minimal errors, affirming the efficacy of MCTS integration in mitigating the requisites for manual data annotation while upholding elevated levels of accuracy and dependability in mathematical reasoning assignments.

Conclusion:

The introduction of AlphaMath by Alibaba Group signifies a significant advancement in mathematical reasoning for large language models. By leveraging Monte Carlo Tree Search, AlphaMath addresses critical challenges such as logical and numerical errors, while streamlining the data generation and refinement process. This innovation not only enhances model performance but also reduces the reliance on manual data annotation, thereby potentially lowering costs and improving efficiency in various industries reliant on mathematical problem-solving.