TL;DR:

- AMD has launched its Instinct MI300X GPUs, designed for AI and HPC applications.

- The MI300X is an upgrade to the MI300A, offering x86 CPU cores and CDNA 3-based processors.

- LaminiAI CEO Sharon Zhou confirms the deployment of servers equipped with up to eight MI300X accelerators.

- AMD claims the MI300X as the fastest AI chip, surpassing Nvidia’s H100 and upcoming H200 GPUs.

- MI300X features 153 billion transistors, 304 GPU compute units, 192GB of HBM3 memory, and 5.3 TB/s memory bandwidth.

- Lisa Su, AMD’s CEO, highlights MI300X’s superior performance, flexibility, memory capacity, and bandwidth.

Main AI News:

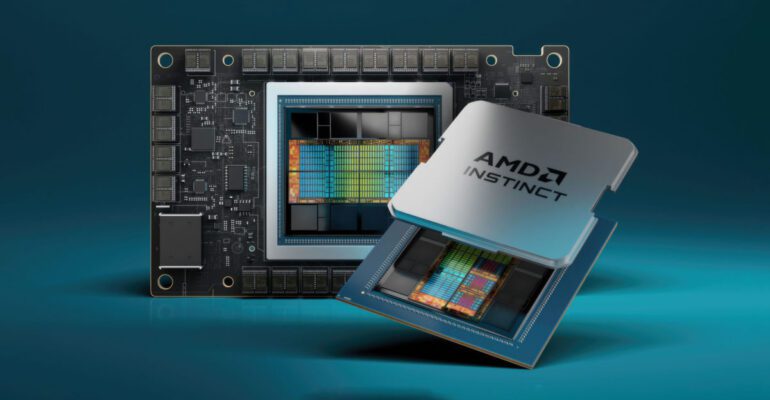

AMD’s groundbreaking Instinct MI300X GPUs, designed for AI and high-performance computing (HPC) applications, have commenced shipment, as reported by Tom’s Hardware. Serving as the larger sibling to AMD’s pioneering Instinct MI300A, the MI300X stands out as the first data center accelerated processing unit boasting both general-purpose x86 CPU cores and CDNA 3-based processors tailored for AI and HPC tasks.

According to Sharon Zhou, CEO of LaminiAI, servers incorporating up to eight AMD MI300X accelerators are now operational. LaminiAI, known for its self-serve AI platform catering to AI engineers utilizing LLMs, heralded this development in a tweet. “The first AMD MI300X live in production,” Zhou announced. “Like freshly baked bread, 8x MI300X is online if you’re building on open LLMs and you’re blocked on compute, [let me know]. Everyone should have access to this wizard technology called LLMs; that is to say, the next batch of LaminiAI LLM pods are here.”

Renowned as the swiftest AI chip globally, the MI300X serves as a standalone accelerator integral to the two-exaflop supercomputer El Capitan at Lawrence Livermore National Laboratory. AMD asserts that the MI300X outpaces Nvidia’s H100 and even the forthcoming H200 GPUs. Sporting 153 billion transistors, the MI300X utilizes 3D packaging with 5- and 6-nanometer processes. Boasting 304 GPU compute units, 192GB of HBM3 memory, and 5.3 TB/s of memory bandwidth, it delivers 163.4 teraflops of peak FP32 performance and 81.7 teraflops of peak FP64 performance.

Comparatively, Nvidia’s H100 SXM offers 68 teraflops of peak FP32 and 34 teraflops of FP64 performance, while the newer H100 NVL model narrows the gap with 134 teraflops of FP32 performance and 68 teraflops of FP64 performance. Lisa Su, AMD’s CEO, emphasized the MI300X’s attributes during its launch, stating, “It’s the highest performance accelerator in the world for generative AI.” Su further elaborated on AMD’s design decisions, highlighting the MI300X’s enhanced flexibility, memory capacity, and bandwidth, resulting in 2.4 times more memory capacity and 1.6 times more memory bandwidth compared to competitors.

Conclusion:

The release of AMD’s Instinct MI300X GPUs marks a significant advancement in the AI and HPC market. With superior performance metrics and enhanced capabilities compared to competitors, AMD is poised to gain a stronger foothold in this rapidly evolving sector, potentially reshaping the landscape of data center accelerators and driving innovation across industries.