- Researchers introduce Blink, a novel benchmark for assessing multimodal LLMs.

- Blink focuses on core visual perception abilities that are often overlooked in existing evaluations.

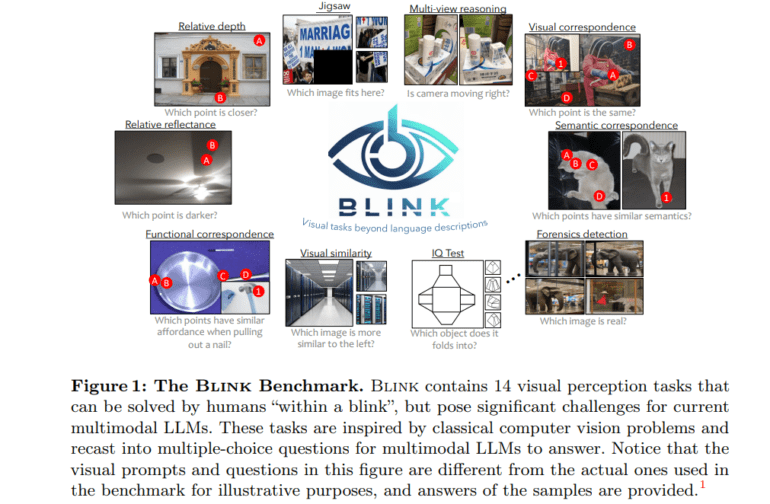

- Fourteen classic computer vision challenges, from pattern matching to visual understanding, are included.

- Tasks are transformed into question-and-answer sessions, enhancing evaluation dynamics.

- Blink comprises 3,800 questions and 7,300 curated photographs, challenging LLMs across diverse stimuli.

- Human performance surpasses machine capabilities, highlighting the complexity of visual perception.

- Integration of specialized insights into LLM architectures is deemed necessary for improved performance.

Main AI News:

In the realm of computer vision, the quest to decipher images as more than just flat “patterns” has long been underway. Early endeavors delved into understanding images as projections of intricate 3D scenes, prompting the creation of intermediary tasks aimed at unraveling optical properties, geometric reasoning, visual correspondence, and more. However, in the era dominated by large language models (LLMs), the focus shifted towards tasks articulated in natural language, sidelining core perceptual challenges.

A collaborative endeavor led by esteemed researchers from renowned institutions such as the University of Pennsylvania and the Allen Institute for AI, among others, sheds light on the overlooked realm of visual perception in evaluating multimodal LLMs. This groundbreaking study unveils Blink, a pioneering benchmark meticulously crafted to assess core visual perception abilities often neglected in existing evaluations.

Blink introduces a comprehensive array of fourteen classic computer vision challenges, ranging from basic pattern matching to advanced visual understanding, all designed to push the boundaries of multimodal LLM capabilities. Each challenge within Blink is carefully curated to demand genuine comprehension of image content, eschewing superficial labeling for deep perceptual insights.

Revolutionizing conventional evaluation metrics, Blink presents a paradigm shift by transforming traditional tasks into dynamic question-and-answer sessions, incorporating both textual and pictorial responses. With 3,800 questions and 7,300 meticulously selected photographs, Blink offers a diverse array of stimuli, ranging from urban landscapes to natural vistas, meticulously curated to challenge the perceptual prowess of multimodal LLMs.

In rigorous evaluations conducted on Blink, seventeen multimodal LLMs underwent thorough scrutiny, revealing a stark dissonance between human perceptual prowess and machine capabilities. Despite the challenges posed by Blink’s tasks, humans effortlessly outperform machines, underscoring the intrinsic complexity of visual perception. Notably, the performance gap between multimodal LLMs and expert vision models highlights the need for deeper integration of specialized insights into LLM architectures.

The findings of this seminal study debunk prior claims regarding the perceptual capacities of multimodal LLMs, paving the way for a reevaluation of their true capabilities. Moreover, Blink emerges as a pivotal platform for bridging the gap between conventional perceptual paradigms and state-of-the-art LLM capabilities, heralding a new era of advancements in the field of multimodal AI.

Conclusion:

The introduction of Blink marks a pivotal shift in multimodal LLM evaluation, emphasizing the importance of core visual perception abilities. This underscores the need for continued research and development in integrating specialized insights into LLM architectures to bridge the performance gap between machines and human perception, opening avenues for advancements in the multimodal AI market.