- Cohere AI introduces Aya23 models, revolutionizing multilingual NLP.

- Aya23 family includes 8B and 35B parameter models, optimizing text generation.

- Models support 23 languages, offering accuracy and coherence across linguistic diversity.

- Leveraging transformer architecture, Aya23 undergoes Instruction Fine-Tuning for enhanced performance.

- Thorough evaluation demonstrates significant advancements in multilingual text generation.

Main AI News:

In the realm of natural language processing (NLP), the quest to enable computers to comprehend, interpret, and produce human language continues to evolve. This encompasses a wide array of tasks, from language translation to sentiment analysis and text generation, all aimed at fostering seamless interaction between machines and humans through language. Achieving this feat demands sophisticated models adept at navigating the intricacies of human languages, encompassing syntax, semantics, and context.

Traditional models often grapple with the daunting task of efficiently handling diverse languages, requiring substantial training and resources. The nuances of syntax, semantics, and context across varied languages present a formidable challenge, especially in our increasingly globalized world where the demand for multilingual applications continues to surge.

Enter transformer-based models, hailed as the most promising tools in NLP. Models like BERT and GPT, powered by deep learning techniques, have demonstrated remarkable prowess in understanding and generating text across various NLP tasks. However, their efficacy in handling multiple languages has been a subject of scrutiny, often necessitating extensive fine-tuning to achieve satisfactory performance across different linguistic landscapes. This fine-tuning process, while indispensable, can be resource-intensive and time-consuming, hindering the accessibility and scalability of such models.

Enter the Aya23 models, a groundbreaking innovation from Cohere AI poised to revolutionize multilingual capabilities in NLP. The Aya23 family encompasses models boasting 8 billion and 35 billion parameters, establishing them as among the most extensive and potent multilingual models in existence. Let’s delve into the specifics of each:

Aya23-8B:

- Featuring 8 billion parameters, this model stands as a formidable powerhouse for multilingual text generation.

- With support for 23 languages, including Arabic, Chinese, English, French, German, and Spanish, it is meticulously optimized to churn out accurate and contextually relevant text across these linguistic domains.

Aya23-35B:

- With a staggering 35 billion parameters under its belt, this model offers an even greater capacity for tackling complex multilingual tasks.

- Like its predecessor, it supports 23 languages, boasting enhanced performance in maintaining consistency and coherence in generated text, making it a prime candidate for applications demanding precision and comprehensive linguistic coverage.

At the heart of the Aya23 models lies an optimized transformer architecture, empowering them to generate text with unparalleled accuracy and coherence based on input prompts. These models undergo a meticulous fine-tuning process dubbed Instruction Fine-Tuning (IFT), honing their ability to adeptly follow human instructions. This fine-tuning process plays a pivotal role in bolstering the models’ proficiency in producing coherent and contextually appropriate responses across multiple languages, particularly in languages where training data may be scarce.

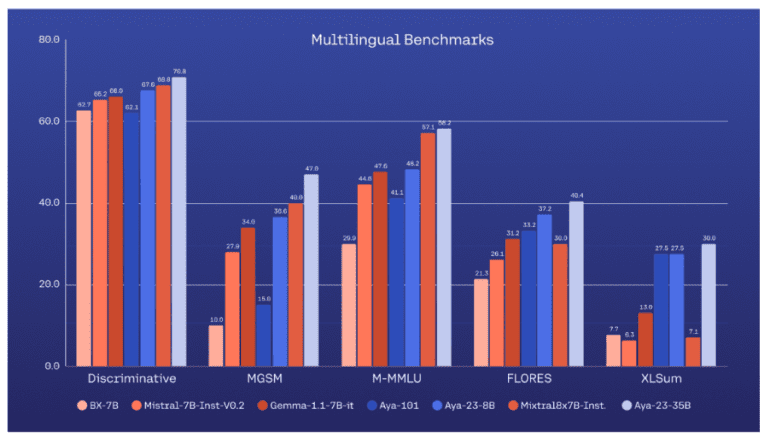

Thorough evaluation has underscored the exemplary performance of the Aya23 models in multilingual text generation. Whether it’s the 8-billion parameter variant or the 35-billion parameter behemoth, both iterations showcase significant strides in generating precise and contextually relevant text across all 23 supported languages. Notably, these models excel in maintaining coherence and consistency in their generated text, attributes critical for applications spanning translation, content creation, and conversational agents.

Conclusion:

Cohere AI’s unveiling of the Aya23 models marks a significant leap forward in the NLP domain, particularly in the realm of multilingual capabilities. With support for 23 languages and parameter variants catering to diverse needs, these models are poised to address the growing demand for accurate and coherent text generation across linguistic landscapes. This innovation underscores the potential for transformative applications in translation services, content creation, and conversational AI, promising a paradigm shift in how we interact with language across global markets.