TL;DR:

- Dolphins, a novel vision-language model, is developed by a collaborative team as a conversational driving assistant.

- It excels in in-context learning, integrates visual understanding, and addresses complex AV scenarios.

- Dolphins’ fine-tuning with multimodal datasets enhances its capabilities for autonomous vehicles (AVs).

- The model showcases human-like reasoning, interpretability, and rapid adaptation in intricate driving scenarios.

- Computational challenges are acknowledged, with a focus on customized, power-efficient model versions.

- Dolphins signifies a significant leap toward autonomous driving and advanced AI capabilities in AVs.

Main AI News:

In the realm of artificial intelligence research, a groundbreaking vision-language model, christened ‘Dolphins,’ has emerged. Developed collaboratively by teams hailing from the esteemed University of Wisconsin-Madison, NVIDIA, the University of Michigan, and Stanford University, Dolphins represents a remarkable advancement in the field of conversational driving assistants. This innovative model is poised to revolutionize the landscape of autonomous vehicles (AVs) by imbibing them with human-like abilities, including rapid learning, adaptation, error recovery, and interpretability during interactive dialogues.

Unlike its predecessors, Dolphins transcends the limitations of conventional language models like DriveLikeHuman and GPT-Driver, which often lack robust visual features essential for navigating the complex world of autonomous driving. Dolphins, on the other hand, seamlessly integrates language understanding with visual perception, demonstrating unparalleled prowess in in-context learning and the seamless handling of diverse video inputs. Drawing inspiration from Flamingo’s success in multimodal in-context learning, Dolphins leverages this approach to augment comprehension by intertwining textual and visual data.

At the core of this research endeavor lies the formidable challenge of achieving complete autonomy in vehicular systems. Dolphins is meticulously crafted to equip AVs with a human-like understanding of their surroundings and the ability to respond adeptly to intricate scenarios. It is a well-known fact that existing data-driven and modular autonomous driving systems grapple with integration and performance issues. In this context, Dolphins emerges as a beacon of hope, showcasing advanced understanding, instantaneous learning, and swift error recovery. Moreover, it places a significant emphasis on interpretability, fostering trust and transparency, thereby bridging the gap between current autonomous systems and the coveted realm of human-like driving capabilities.

To achieve its remarkable feats, Dolphins harnesses the power of OpenFlamingo and GCoT, bolstering its reasoning capabilities within the AV context. It relies on both real-world and synthetic AV datasets to fine-tune its capabilities, creating a robust foundation for tackling intricate driving scenarios. In a bid to facilitate detailed conversational tasks, a multimodal in-context instruction tuning dataset has been meticulously curated, further enhancing Dolphins’ training and evaluation processes.

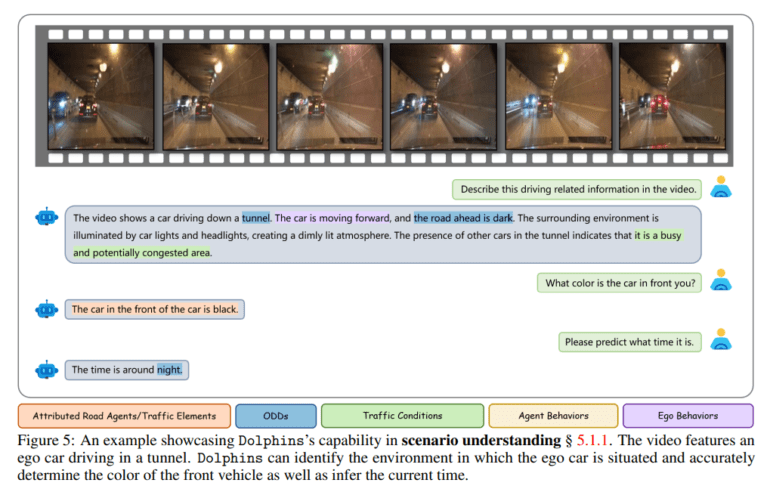

The versatility of Dolphins is truly impressive. It excels in a wide array of autonomous vehicle tasks, exhibiting human-like traits such as instantaneous adaptation and error correction. Dolphins boasts the ability to pinpoint precise driving locations, assess traffic conditions with finesse, and decipher the behaviors of road agents. This remarkable fine-grained capability is a result of its grounding in a comprehensive image dataset and its subsequent fine-tuning within the specialized domain of autonomous driving. The multimodal in-context instruction tuning dataset plays an instrumental role in honing these skills to perfection.

As a conversational driving assistant, Dolphins is a pinnacle of excellence. It seamlessly navigates a myriad of AV tasks, particularly excelling in interpretability and rapid adaptation. However, it also acknowledges the computational challenges that lie ahead, particularly in achieving high frame rates on edge devices and managing power consumption effectively. To address these challenges, the proposition of crafting customized and distilled versions of VLMs, such as Dolphins, emerges as a promising avenue. This approach aims to strike a harmonious balance between the insatiable computational demands of advanced AI models and the imperative need for power efficiency. The journey towards unlocking the full potential of AVs, empowered by advanced AI capabilities like Dolphins, demands a commitment to continuous exploration and innovation.

Conclusion:

The introduction of Dolphins, a cutting-edge vision-language model, marks a pivotal moment in the autonomous driving industry. Its advanced capabilities and emphasis on interpretability promise to bridge the gap between existing AV systems and human-like driving, setting a new standard for intelligent and efficient autonomous vehicles. This development underscores the growing importance of AI in the AV market, driving innovation and pushing the boundaries of what is achievable in autonomous driving technology.