- SliCK framework introduced for evaluating new knowledge integration in LLMs.

- Categorizes knowledge into levels, aiding nuanced model training.

- Leveraged PaLM model fine-tuned on datasets with varying knowledge proportions.

- Study quantifies model performance across knowledge categories using EM metrics.

- Results show SliCK enhances fine-tuning process, optimizing model accuracy.

Main AI News:

Cutting-edge research in computational linguistics continuously seeks methods to enhance the performance of large language models (LLMs) while integrating new knowledge seamlessly. The challenge lies in preserving accuracy as these models expand their knowledge bases, crucial for various language processing applications.

Traditionally, supervised fine-tuning has been a popular method, involving incremental training on data aligned with or extending beyond pre-existing knowledge. However, its efficacy has been inconsistent. This process exposes the model to examples it may partially recognize or be unaware of, compelling it to refine its responses accordingly. The success of this approach is typically gauged by how well models maintain performance when encountering data that aligns with or extends their existing knowledge.

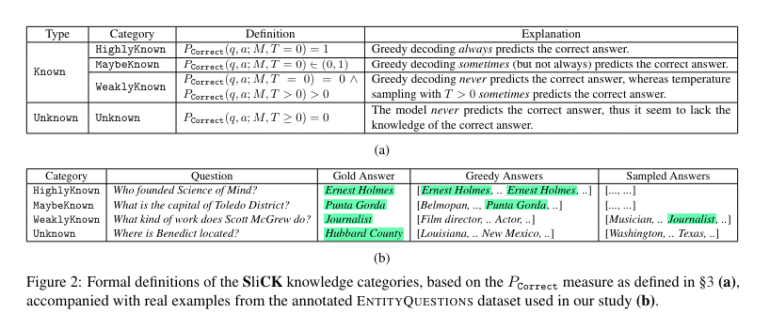

A collaborative effort between Technion – Israel Institute of Technology and Google Research introduces SliCK, a pioneering framework tailored to evaluating the integration of new knowledge within LLMs. What sets this methodology apart is its categorization of knowledge into distinct levels, ranging from HighlyKnown to Unknown. This granular approach offers insights into how different types of information impact model performance, underscoring the nuanced nature of model training.

Leveraging the PaLM model, a robust LLM developed by Google, the study fine-tuned datasets meticulously crafted to encompass varying proportions of knowledge categories: HighlyKnown, MaybeKnown, WeaklyKnown, and Unknown. These datasets, derived from a curated subset of factual questions sourced from Wikidata relations, enable a controlled exploration of the model’s learning dynamics. By quantifying the model’s performance across these categories using exact match (EM) metrics, the experiment assesses how effectively the model assimilates new information while mitigating the risk of hallucinations. This structured methodology offers a comprehensive understanding of the impact of fine-tuning with both familiar and novel data on model accuracy.

The study’s results underscore the efficacy of the SliCK categorization in refining the fine-tuning process. Models trained using this structured approach, particularly with a 50% Known and 50% Unknown mix, exhibit an optimized balance, achieving a 5% higher accuracy in generating correct responses compared to models trained predominantly on Unknown data. Conversely, when the proportion of Unknown data surpasses 70%, the models’ susceptibility to hallucinations escalates by approximately 12%. These findings underscore SliCK’s pivotal role in quantitatively assessing and mitigating the risk of errors as new information is integrated during LLM fine-tuning.

Conclusion:

The introduction of the SliCK framework marks a significant advancement in the field of language processing, offering a structured approach to evaluating and integrating new knowledge within LLMs. By categorizing knowledge levels and meticulously assessing model performance, SliCK enhances the fine-tuning process, leading to optimized accuracy. This development underscores the importance of nuanced model training methodologies in meeting the evolving demands of language processing applications.