TL;DR:

- FreeNoise is an innovative AI method for generating longer videos based on multiple text prompts.

- Developed by visionary researchers, it overcomes limitations in existing video generation models.

- FreeNoise enhances pretrained video diffusion models, ensuring content consistency.

- It utilizes noise sequence rescheduling and temporal attention for efficiency.

- A motion injection method maintains visual consistency across text prompts.

- Extensive experiments and user studies confirm its superiority in content consistency, video quality, and alignment.

- FreeNoise reduces time costs by approximately 17% compared to previous methods.

- Future research aims to refine techniques and explore broader applications.

Main AI News:

In the ever-evolving landscape of artificial intelligence, a groundbreaking innovation has emerged—FreeNoise. This cutting-edge method is set to reshape the way we generate videos, pushing the boundaries of what was previously thought possible. FreeNoise allows for the creation of longer videos, spanning up to 512 frames, all driven by multiple text prompts.

The Brainchild of Visionaries

Conceived by visionary researchers, FreeNoise addresses the limitations that have hindered existing video generation models. It not only extends the capabilities of pretrained video diffusion models but also maintains content consistency, delivering a truly remarkable breakthrough in AI-driven video production.

Unveiling the Technology

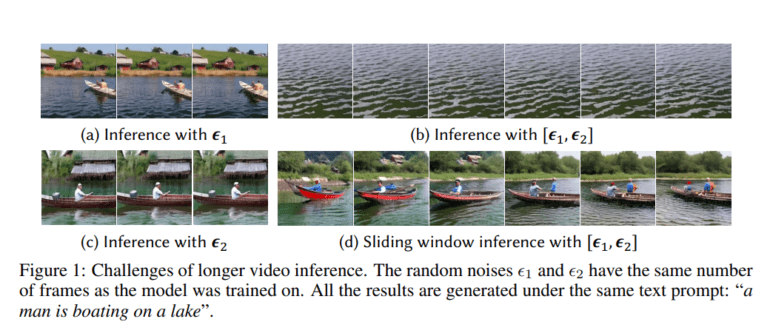

So, what exactly sets FreeNoise apart? At its core, FreeNoise employs a sequence rescheduling technique for long-range correlation, coupled with window-based temporal attention. This dynamic duo enables the generation of longer videos based on multiple text prompts, all while keeping the time cost at an absolute minimum. In a world where time is money, FreeNoise offers an unparalleled advantage.

The Motion Magic

One of FreeNoise’s standout features is its motion injection method, ensuring that videos generated from multiple text prompts maintain a consistent layout and object appearance. This innovation enhances the user experience, aligning video content with the intended text prompts seamlessly.

Proven Excellence

The superiority of the FreeNoise paradigm is not mere speculation; it’s backed by extensive experiments and user studies. FreeNoise outperforms baseline methods in critical aspects such as content consistency, video quality, and video-text alignment. Users have spoken, and they clearly prefer FreeNoise-generated videos.

Efficiency at Its Core

In a world where efficiency is paramount, FreeNoise shines. This paradigm enhances pretrained video diffusion models, allowing them to produce longer, multi-text conditioned videos with minimal time cost—approximately 17% less than prior methods. It’s a game-changer in the world of AI video generation.

The Future of FreeNoise

As we look ahead, the future of FreeNoise appears even more promising. Ongoing research aims to further refine the noise rescheduling technique and enhance the motion injection method, making multi-text video generation even more seamless. Advanced evaluation metrics for video quality and content consistency are also on the horizon, promising a more comprehensive model assessment.

Beyond Video Generation

FreeNoise’s applicability knows no bounds. Its potential extends beyond video generation, with possibilities in domains like image generation and text-to-image synthesis. Scaling FreeNoise to handle longer videos and complex text conditions opens up exciting avenues for research in text-driven video generation.

Conclusion:

FreeNoise is not just an AI method; it’s a revolution in the making. With its ability to generate longer videos, maintain content consistency, and minimize time costs, it’s poised to redefine the way we approach video generation. Stay tuned for what the future holds as FreeNoise continues to shape the landscape of AI-driven creativity.