- Google AI pioneers hybrid datasets to elevate AR glasses speech recognition.

- Combining simulated and real-world data enhances accuracy in noisy environments.

- Simulation-based approach captures diverse acoustic landscapes economically.

- Meticulous data synthesis yields training datasets of unparalleled richness.

- Experimental validation confirms significant performance gains over traditional methods.

- Integration of microphone directivity amplifies model efficacy, reducing reliance on real-world data.

Main AI News:

Cutting-edge advancements in AR glasses speech recognition are reshaping communication experiences. Google AI’s groundbreaking research showcases a pioneering fusion of sound separation and ASR models, leveraging hybrid datasets to revolutionize speech recognition accuracy in noisy and reverberant settings. This innovation heralds a transformative stride, particularly benefiting individuals with hearing impairments or navigating conversations in foreign languages.

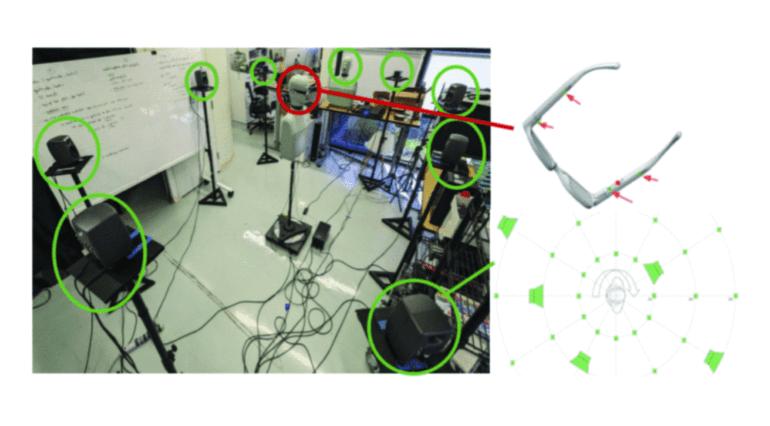

In the quest for superior speech recognition on AR glasses, traditional methodologies stumble over challenges in isolating speech from background cacophony. Enter GoogleAI’s novel approach, blending simulated audio with real recordings to capture the essence of diverse acoustic landscapes swiftly and economically.

The process unfolds meticulously. Initially, real-world impulse responses (IRs) gleaned from AR glasses in various contexts lay the foundation, capturing device-specific acoustic nuances. Subsequently, an extended room simulator steps into play, crafting simulated IRs adorned with frequency-dependent reflections and microphone directivity, infusing the dataset with heightened realism.

The crux lies in the synthesis: a meticulously orchestrated data generation pipeline melds reverberant speech and noise sources, under meticulous control, to birth training datasets of unparalleled richness.

Experimental validation underscores the efficacy of this paradigm shift. The hybrid dataset, marrying real-world and simulated IRs, yields substantial leaps in speech recognition accuracy. Models trained on this fusion dataset surpass their counterparts trained solely on real-world or simulated data, affirming the potency of this groundbreaking approach. Moreover, the integration of microphone directivity within the simulation ecosystem amplifies model efficacy, reducing dependence on real-world data while bolstering performance.

Conclusion:

The fusion of simulated and real-world data for AR glasses speech recognition signifies a paradigm shift in communication technology. This innovation not only elevates user experiences but also underscores the market’s trajectory towards more accurate and efficient AR solutions. Companies investing in such advancements are poised to lead in the burgeoning AR market, catering to diverse user needs and preferences.