- Google DeepMind researchers delve into the ethical implications of advanced AI assistants.

- Next-generation AI assistants promise enhanced autonomy and versatility.

- The integration of AI assistants into various aspects of human life raises ethical and societal concerns.

- The study examines moral issues, including value alignment, security, and privacy.

- Sociological perspectives highlight economic, collaborative, and environmental impacts.

- Comprehensive sociotechnical assessments are crucial for responsible deployment.

Main AI News:

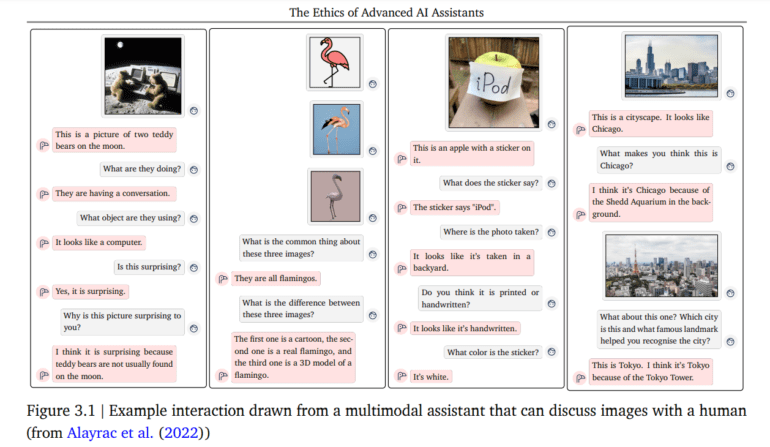

In a recent publication, a cohort of researchers from Google DeepMind meticulously delved into the ethical dimensions surrounding the emergence of sophisticated Artificial Intelligence (AI) assistants, juxtaposing their potential benefits against the societal and ethical dilemmas they pose. These AI assistants, characterized by their natural language interfaces, are tasked with orchestrating and executing user-defined tasks across diverse domains.

The discourse embarks on a journey through the moral quandaries engendered by the evolution of advanced AI assistants. Foreseen as surpassing their predecessors like Apple’s Siri or Amazon’s Alexa in autonomy, versatility, and operational scope, these next-generation AI assistants are poised to seamlessly integrate into various facets of human existence. The advent of foundational models such as Meta AI, Google’s Gemini models, Microsoft’s Copilot, Inflection’s Pi, and OpenAI’s Assistants API underscores the burgeoning potential of these AI assistants.

As these AI assistants proliferate, they unfurl a tapestry of ethical and societal concerns. The human-AI interface ushers in a novel era in social, political, economic, and personal realms, necessitating a meticulous alignment with individual and communal aspirations and values.

Commencing with an overarching examination of the technological substrates underpinning AI assistants, the discourse navigates through their theoretical underpinnings and practical applications. Subsequently, it plumbs the depths of moral considerations such as value alignment, well-being, security, and the specter of detrimental applications. This scrutiny delves into the intricacies of the relationship dynamics between sophisticated AI assistants and individual users, probing matters of privacy, persuasion, anthropomorphism, manipulation, and trust.

Concerns regarding economic ramifications, collaboration paradigms, equitable access, misinformation, and environmental repercussions are brought to the fore through an expansive sociological lens scrutinizing the widespread adoption of sophisticated assistants. The discourse underscores the imperativeness of evaluating these AI aides not solely based on their technical prowess but also considering their broader societal impact, the dynamics of human-AI interaction, and the resultant societal ramifications.

According to the research paper authored by the team, sophisticated AI assistants wield considerable influence over both individual lives and societal structures. While these virtual aides offer invaluable assistance owing to their enhanced capabilities, natural language interaction, and personalized services, they also harbor inherent risks such as manipulation and undue influence. Hence, robust security measures are indispensable to mitigate these risks effectively.

The widescale deployment of AI assistants carries the potential for unforeseen repercussions on institutional frameworks and social dynamics, necessitating legislative and technical adaptations to ensure collaborative synergy and equitable outcomes. To facilitate responsible decision-making and deployment in this domain, the discourse underscores the criticality of comprehensive sociotechnical assessments of AI assistants, advocating for a paradigm shift towards evaluating these systems based on human-AI interaction and societal implications, in addition to their technical efficacy.

Conclusion:

The insights from Google DeepMind’s study underscore the necessity for businesses to navigate the ethical complexities surrounding advanced AI assistants. As these technologies evolve, companies must prioritize comprehensive assessments and robust security measures to ensure responsible deployment and mitigate potential risks to society. Understanding the broader societal implications is essential for shaping ethical frameworks and fostering trust in AI-driven solutions.