- Google introduces the Infini-attention technique for LLMs, enabling them to retain and utilize more information during interactions.

- Infini-attention divides input sequences into smaller segments and employs attention mechanisms to identify relevant portions.

- The technique creates compressed memory representations of past interactions, stored for efficient retrieval and utilization.

- LLMs with Infini-attention can integrate contextual information from past interactions, leading to more accurate responses and logical reasoning abilities.

- The adoption of Infini-attention promises enhanced accuracy, logical inference, and creativity in AI-generated content.

Main AI News:

In the realm of artificial intelligence (AI), large language models (LLMs) stand as remarkable creations, capable of generating text, translating languages, and providing informative responses to inquiries. However, these models encounter a bottleneck—they can only manage a limited amount of information at once. This limitation is akin to conversing with someone who can only recall the most recent few sentences spoken. Addressing this challenge, Google researchers have unveiled Infini-attention, a groundbreaking technique empowering LLMs to retain and utilize a more extensive array of information during interactions. This breakthrough promises to revolutionize the scope of conversations with AI models, offering the potential for richer and more nuanced exchanges.

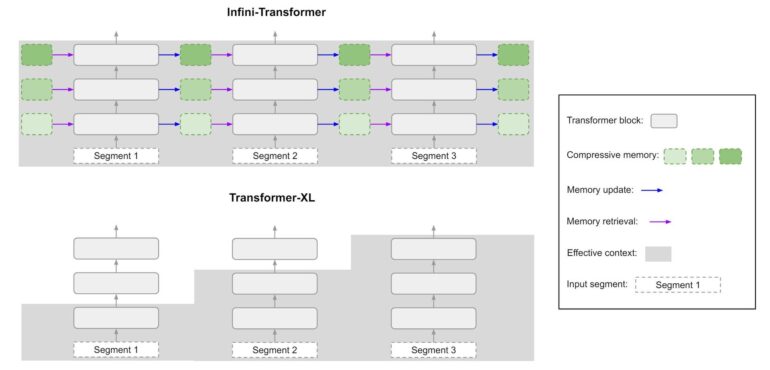

Understanding the intricate mechanism behind Infini-attention is pivotal. Unlike conventional LLMs, which process data in fixed-size segments, this innovative approach allows for the effective utilization of information from past interactions. By amalgamating existing attention mechanisms with efficient memory management techniques, Infini-attention transforms the landscape of conversational AI. Here’s a closer look at its core functionalities:

- Chunking and Attention: Infini-attention partitions the input sequence into smaller segments and employs an attention mechanism to identify relevant portions within each chunk. This mechanism assigns weights to elements within the chunk, signifying their significance in the current context.

- Memory Creation: Instead of discarding or heavily compressing past chunks, Infini-attention utilizes attention weights to create a compressed memory representation, capturing the essence of processed information.

- Memory Storage: These compressed memory representations are stored in a dedicated memory space within the LLM, facilitating efficient retrieval and utilization. While specific implementation details remain undisclosed, techniques such as key-value stores or hierarchical structures likely play a role in enabling efficient access based on relevance.

- Contextual Integration: When processing new information, the LLM can retrieve relevant compressed memories from the dedicated storage space, leveraging attention weights to ensure alignment with the current context. This integration enables a more comprehensive understanding of the overall input sequence.

The adoption of Infini-attention heralds a new era of AI interactions, promising several potential benefits:

- Enhanced Accuracy and Informativeness: By incorporating a broader range of relevant information, LLMs can generate more precise and informative responses to complex queries.

- Logical Reasoning and Inference: Infini-attention enables LLMs to follow complex arguments by recalling past interactions, facilitating tasks requiring logical reasoning and inference.

- Creative Content Generation: With access to a broader context, LLMs can produce more creative and coherent text formats tailored to specific situations, ranging from scripts to poems and emails.

As the adoption of AI chatbots continues to proliferate, it’s imperative to navigate the delicate balance between context and clarity. While a broader context can enrich interactions for complex tasks, it’s essential to avoid overwhelming AI models with irrelevant details. Achieving this equilibrium ensures that AI companions evolve beyond mere repositories of information, becoming intelligent and engaging conversational partners.

Conclusion:

Google’s introduction of Infini-attention marks a significant advancement in the capabilities of language models, particularly in their ability to handle complex conversations. This innovation opens up opportunities for more accurate and engaging interactions with AI, potentially reshaping the market landscape by offering more sophisticated AI companions and content generation tools. As businesses integrate these advancements into their products and services, they can expect to deliver more personalized and insightful experiences to their users, driving increased engagement and satisfaction.