- Hippocrates framework introduced by researchers offers open-source solution for healthcare applications of large language models (LLMs).

- Overcomes limitations of prior models by granting full access to extensive resources, fostering innovation and collaboration in medical AI research.

- Integrates continual pre-training and reinforcement learning, enriched by insights from human experts, enhancing practical applicability in medical settings.

- The methodical approach includes continual pre-training on a comprehensive corpus of medical texts, fine-tuning with specialized datasets, and rigorous evaluation across diverse medical benchmarks.

- Hippo-7B models demonstrate remarkable efficacy, surpassing competing models in accuracy and consistently outperforming established medical LLMs across various benchmarks.

Main AI News:

The landscape of healthcare is undergoing a profound transformation propelled by artificial intelligence (AI), which introduces sophisticated computational methods to tackle challenges across diagnostics and treatment planning. Within this dynamic realm, large language models (LLMs) stand as formidable assets, adept at parsing intricate medical data and offering insights poised to revolutionize patient care and research.

One of the primary hurdles in healthcare lies in the complexity of medical data alongside the exacting standards of accuracy and efficiency essential in medical diagnostics. For AI to thrive in this domain, it must not only grapple with vast data volumes but also deliver precise, actionable insights within real-time clinical contexts.

In prior research endeavors, innovations such as Meditron 70B and MedAlpaca have showcased the potential of supervised fine-tuning and LLaMA architecture in medical dialogue processing. Meanwhile, BioGPT has illustrated transformer adaptability in generating biomedical text, and the PMC-LLaMA model has bolstered performance through domain-specific pre-training. Nevertheless, these advancements are hindered by limited access to proprietary datasets and the intricate task of training models attuned to the nuances of medical terminology and patient data.

Enter “Hippocrates,” a groundbreaking open-source framework developed by researchers from Koç University, Hacettepe University, Yıldız Technical University, and Robert College. Unlike its predecessors reliant on proprietary data, Hippocrates liberates its extensive resources for widespread access, fostering a culture of innovation and collaboration in medical AI research. What sets this framework apart is its integration of continual pre-training and reinforcement learning, enriched by insights from human experts, thereby enhancing its practical applicability in medical settings.

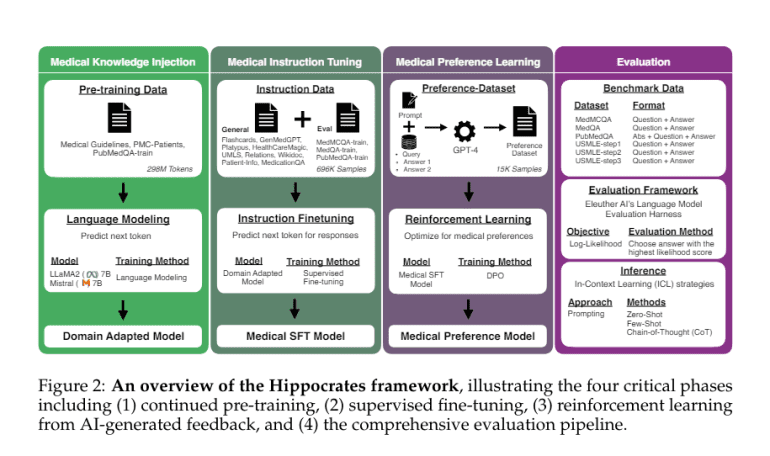

The Hippocrates framework follows a methodical approach commencing with continual pre-training on a vast corpus of medical texts. Subsequently, models like the Hippo family, boasting 7B parameters, undergo fine-tuning using specialized datasets such as MedQA and PMC-Patients databases. This iterative process harnesses instruction tuning and reinforcement learning techniques to align model outputs with expert medical insights. Rigorous evaluation via the EleutherAI evaluation framework ensures thorough testing across diverse medical benchmarks, validating the models’ efficacy and reliability.

The efficacy of the Hippocrates framework shines through, as evidenced by the Hippo-7B models achieving a remarkable 5-shot accuracy of 59.9% on the MedQA dataset, surpassing competing 70B parameter models’ 58.5% accuracy. This notable advancement underscores the framework’s effectiveness. Moreover, these models consistently outshine established medical LLMs across various benchmarks, affirming the robustness of the training and fine-tuning methodologies employed. Such results underscore Hippocrates’ capacity to elevate the precision and reliability of AI applications in the medical sphere.

Conclusion:

The introduction of the Hippocrates framework signifies a significant leap forward in the integration of AI technologies within the healthcare sector. By addressing key limitations of previous models and offering an open-source solution, Hippocrates not only enhances the precision and reliability of AI applications in medical contexts but also fosters a culture of collaboration and innovation. This advancement has the potential to reshape the market landscape, driving further adoption of AI technologies in healthcare and catalyzing breakthroughs in patient care and medical research.