- IBM introduces a hybrid AI pattern for smaller language models (SLMs).

- Large language models (LLMs) face limitations in edge and constrained environments.

- SLMs tailored to specialized domains offer efficiency and performance benefits.

- IBM’s Mistral AI Model and Granite 7B model exemplify resource-efficient alternatives.

- Embracing hybrid AI enables enterprises to balance data privacy, performance, and scalability.

Main AI News:

In the dynamic landscape of artificial intelligence, the emergence of large language models (LLMs) has revolutionized how people interact with AI-driven applications. These sophisticated tools boast the capability to generate, summarize, translate, and even engage in conversations. However, despite their prowess, LLMs have predominantly resided in cloud environments due to their immense computational requirements and data dependencies.

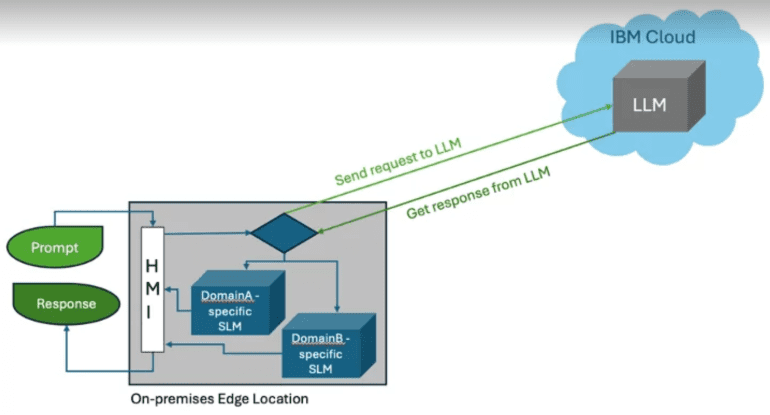

IBM, a trailblazer in AI innovation, has recently unveiled a groundbreaking approach challenging the notion that “bigger is always better” in the realm of AI. With their latest press release titled “Bigger isn’t always better: How hybrid AI pattern enables smaller language models,” IBM introduces a paradigm-shifting concept centered around hybrid AI models, specifically designed to empower smaller language models (SLMs) for enhanced performance and efficiency, particularly in resource-constrained environments and on mobile devices.

At the core of this innovation lies the recognition of the limitations posed by traditional LLMs. While these models excel in processing vast amounts of data from diverse sources, they often face challenges related to data privacy, latency, and scalability when deployed in edge and constrained environments. To address these shortcomings, IBM advocates for a paradigm shift towards hybrid AI models, combining the strengths of both LLMs and SLMs.

The press release delves into the fundamentals of LLMs, elucidating their role in natural language processing (NLP) and the monumental computational resources required for their training and deployment. Despite their unparalleled capabilities, LLMs are confined to the data on which they were trained, limiting their real-time applicability and accuracy. This inherent constraint necessitates the exploration of alternative approaches, such as hybrid AI models.

Enterprises operating in specialized domains, such as telecommunications, healthcare, and oil and gas, stand to reap substantial benefits from adopting SLMs tailored to their specific needs. Unlike their larger counterparts, SLMs are trained on domain-specific data and boast smaller parameter sizes, enabling efficient deployment in enterprise data centers or even on mobile devices with minimal computational overhead.

IBM’s advocacy for hybrid AI models transcends mere conjecture, as evidenced by the introduction of the Mistral AI Model and Granite 7B model on their Watson platform. These compact yet powerful models exemplify IBM’s commitment to democratizing AI by offering resource-efficient alternatives to traditional LLMs.

In embracing a multimodal AI strategy, IBM emphasizes the importance of leveraging domain-specific models built on internal enterprise data to drive differentiation and unlock actionable insights. By embracing hybrid AI, enterprises can strike a balance between data privacy, performance, and scalability, thereby maximizing the value derived from AI investments.

Ultimately, the message conveyed by IBM’s press release resonates with enterprises across diverse industries, urging them to reconsider the conventional wisdom that “bigger is better” in the realm of AI. Through the adoption of hybrid AI models and the cultivation of domain-specific SLMs, enterprises can unlock new opportunities for innovation, differentiation, and value creation in an increasingly AI-driven world.

Conclusion:

The unveiling of IBM’s hybrid AI pattern marks a significant paradigm shift in the landscape of language models. By championing the adoption of smaller, domain-specific models alongside traditional LLMs, IBM addresses the inherent limitations of large-scale AI deployments. This strategic move not only enhances the efficiency and performance of AI applications but also empowers enterprises to unlock new avenues for innovation and differentiation. As the market continues to evolve, organizations that embrace hybrid AI stand poised to gain a competitive edge by maximizing the value derived from AI investments and driving meaningful business outcomes.