- Mora, a new multi-agent framework, aims to revolutionize video generation technology.

- Traditional models struggle with producing extended videos, while closed-source models hinder innovation.

- Mora replicates and expands upon the capabilities demonstrated by closed-source models like Sora.

- Leveraging collaboration among specialized AI agents, Mora decomposes video generation into distinct subtasks.

- Experimental results show Mora’s competitive performance in generating high-quality videos.

- Despite a performance gap compared to Sora, Mora’s open-source nature and multi-agent architecture offer significant advantages in accessibility and innovation potential.

Main AI News:

In a collaborative effort between Lehigh University and Microsoft, a groundbreaking multi-agent framework dubbed Mora has emerged to propel video generation technology into new frontiers. Despite substantial advancements in image and text synthesis, the realm of video generation has largely remained untapped. Traditional models have faltered in producing extended videos, typically lasting beyond a mere 10 seconds, thereby constraining their practical applicability. Moreover, the dominance of closed-source models such as Sora by OpenAI has impeded innovation and hindered replication efforts within the academic sphere.

The latest research paper aims to replicate and expand upon the capabilities exhibited by Sora, paving the way for diverse applications in video generation tasks. While models like Pika and Gen-2 have showcased commendable performance, they still grapple with limitations in generating longer videos and fail to match the prowess demonstrated by Sora. Enter Mora: a paradigm-shifting framework that harnesses the collaborative power of advanced visual AI agents to achieve generalized video generation.

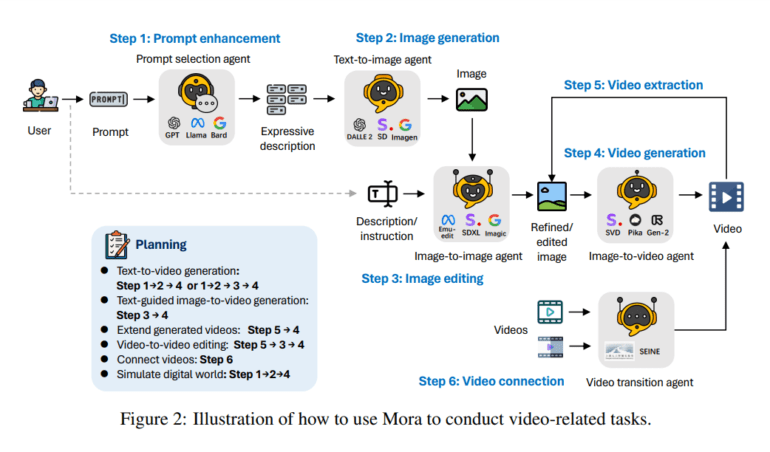

Unlike its predecessors, Mora adopts a holistic approach, decomposing the video generation process into discrete subtasks, each entrusted to a specialized agent. These include prompt selection, text-to-image generation, image-to-video generation, and video-to-video editing. Through orchestrated collaboration among these agents, Mora endeavors to replicate and surpass the video generation capabilities showcased by Sora.

Mora’s multi-agent architecture heralds a structured yet adaptable methodology for video generation. By leveraging specialized AI agents tailored to different facets of the generation pipeline, Mora can seamlessly tackle a myriad of video generation challenges. From text-to-video synthesis to extending generated videos and even simulating digital environments, Mora empowers users with an array of capabilities. Each agent is finely tuned to handle specific input-output transformations, ensuring the coherence and quality of the generated videos.

Experimental evaluations underscore Mora’s competitive performance, with metrics affirming its adeptness in producing videos closely mirroring those crafted by Sora. Although a performance gap persists, particularly in holistic evaluations, Mora’s open-source architecture and multi-agent framework bestow significant advantages in terms of accessibility, extensibility, and innovation potential. With Mora at the helm, the future of video generation appears brighter than ever before, promising boundless possibilities for creators and innovators alike.

Conclusion:

The introduction of the Mora framework marks a significant advancement in the field of video generation technology. By addressing the limitations of existing models and harnessing the collaborative power of specialized AI agents, Mora promises to unlock new possibilities for creators and innovators. Its open-source architecture not only fosters accessibility but also paves the way for unprecedented levels of innovation in the market, ultimately shaping the future landscape of video generation.