TL;DR:

- VIS4ML aims to enhance human-in-the-loop machine learning with data visualization tools.

- Researchers have raised concerns about the validity of claims within the field.

- Issues include inadequate testing, narrow evaluations, and unsupported generalizability claims.

- Transparency and acknowledgment of tool limitations are advocated.

- Collaboration and rigorous testing within specific domains are essential.

Main AI News:

In the realm of machine learning, the significance of data visualization tools for unraveling the intricacies of these systems cannot be overstated. Over the past few years, a new field has emerged, known as Visualization for Machine Learning (VIS4ML), which seeks to empower domain experts engaged in human-in-the-loop processes with a suite of visualizations. These visual aids are intended to facilitate a broad spectrum of tasks, including model design, training, engineering, interpretation, assessment, and debugging.

However, a critical examination of 52 recent VIS4ML research publications by Hariharan Subramonyam, an assistant professor at Stanford Graduate School of Education, and Jessica Hullman of Northwestern University, raises concerns about the claims being made within this domain. Subramonyam asserts that researchers in the VIS4ML space are falling short in several key aspects.

One glaring issue is the lack of ecologically valid testing of VIS4ML tools. Researchers are making grandiose claims about the applicability of their tools without subjecting them to real-world scenarios. Subramonyam and Hullman’s analysis, which has gained acceptance for publication at IEEE VIS, is available for scrutiny on ArXiv.org.

VIS4ML’s Noble Ambitions

VIS4ML researchers aspire to bridge the gap between humans and machine learning models to enhance the latter’s performance. While this aspiration is commendable, it presents a formidable challenge. Many machine learning models operate as complex black boxes, shrouding their internal mechanisms in secrecy. To shed light on these enigmatic models, novel data visualization tools are imperative.

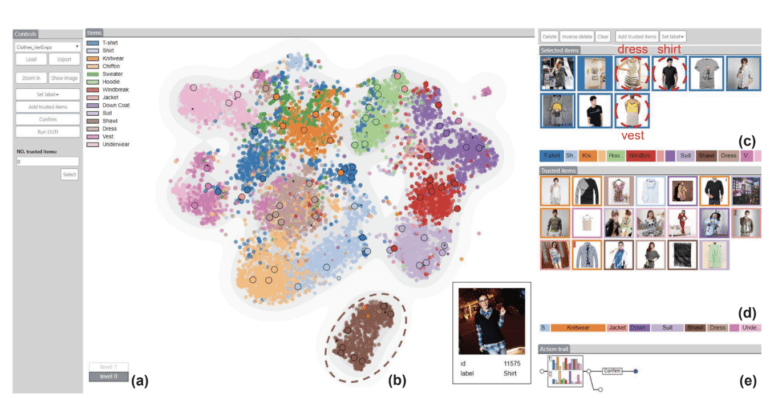

Certain VIS4ML researchers have taken commendable strides in creating innovative visualization tools. For example, they have developed tools that generate scatter plots illustrating clusters in high-dimensional data, making it easier to identify mislabeled items. Other tools provide insights into convolutional network layers or visualize potential features of an ML model to aid decision-making.

The Generalizability Challenge

Despite the progress made in developing VIS4ML tools, Subramonyam and Hullman’s analysis reveals some disconcerting patterns. These tools are frequently tested by a limited group of experts, often those involved in their design. Furthermore, they are typically evaluated using only standard, popular datasets, resulting in a narrow assessment of their utility. As Subramonyam points out, the criteria for measuring these tools’ usefulness are overly restricted.

In addition, only a third of the 52 reviewed VIS4ML papers went beyond seeking expert opinions on tool utility to report whether the tool actually improved ML model performance. The remaining papers relied on hypothetical claims, asserting that the tools would enhance model performance across all types and datasets without providing empirical evidence.

A Call for Transparency

To address these shortcomings, Subramonyam advocates for greater transparency among VIS4ML researchers. He emphasizes the need to acknowledge and communicate the limitations of their tools, curbing unsupported claims of generalizability.

For those committed to advancing human-in-the-loop ML, Subramonyam insists on a more rigorous evaluation of VIS4ML tools, grounded in real-world contexts. To facilitate this, he and Hullman outline concrete guidelines for transparency in their research.

Furthermore, Subramonyam underscores the importance of collaboration between visualization solution developers and the communities they aim to serve. Human-centered AI, he argues, requires a multidisciplinary approach and rigorous testing within specific domains and workflows to ensure practical effectiveness. Tackling this challenge demands a broader perspective and a commitment to real-world impact.

Conclusion:

The challenges and lack of transparency in the Visualization for Machine Learning (VIS4ML) field could impact its credibility. To thrive in the market, VIS4ML researchers should prioritize rigorous testing, transparency, and collaboration with the end-users to ensure practical effectiveness in real-world applications.