- AI’s growth demands hardware aligning with power constraints; In-Memory Computing (IMC) emerges as a promising solution.

- Hardware-aware Neural Architecture Search (HW-NAS) optimizes neural networks for IMC hardware, balancing efficiency and performance.

- Challenges persist, including the absence of unified frameworks and benchmark standards for IMC hardware.

- Recent HW-NAS frameworks integrate hardware parameters, automating optimization for energy, latency, and memory.

- IMC revolutionizes traditional architectures by processing data within memory, enhancing efficiency.

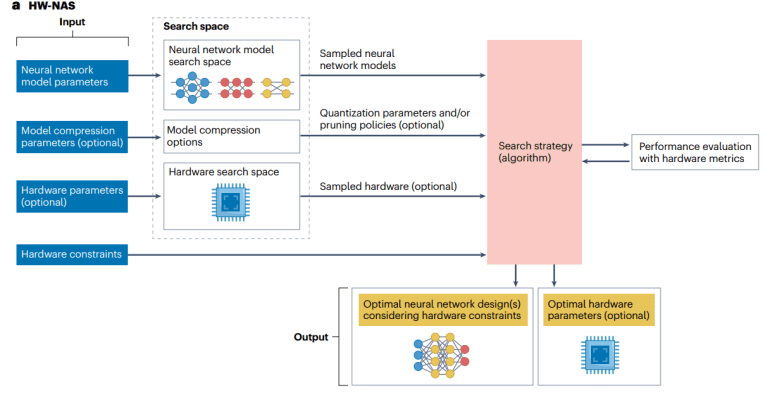

- HW-NAS integrates model compression, neural network search, hyperparameter exploration, and hardware optimization.

- The search space spans neural network operations and hardware design, aiming for optimal performance within hardware constraints.

Main AI News:

In today’s landscape, the exponential advancement of AI and its intricate neural networks necessitates hardware that aligns with power and resource constraints. Enter in-memory computing (IMC), a beacon of hope for crafting diverse IMC devices and architectures. But the journey from concept to deployment demands a robust hardware-software co-design toolchain, one that deftly navigates through devices, circuits, and algorithms. The rise of the Internet of Things (IoT) further complicates matters, ushering in a era of heightened data generation, thereby elevating the need for advanced AI processing capabilities. Enter efficient deep learning accelerators, particularly crucial for edge processing, leveraging IMC to slash data movement costs, enhance energy efficiency, and reduce latency. But achieving such feats necessitates automated optimization across myriad design parameters.

Enter the realm of hardware-aware neural architecture search (HW-NAS), where researchers from esteemed institutions like King Abdullah University of Science and Technology, Rain Neuromorphics, and IBM Research are pioneering the path towards efficient neural networks tailored for IMC hardware. HW-NAS charts a course towards optimized neural network models, meticulously considering the unique features and constraints of IMC hardware. This approach not only fine-tunes hardware and software but orchestrates a symphony of efficiency, balancing performance with computational demands. Yet, challenges loom large, from the absence of a unified framework to benchmark disparities across diverse neural network models and IMC architectures.

HW-NAS, a leap beyond traditional neural architecture search, stands as a testament to innovation by seamlessly integrating hardware parameters, thus automating the optimization of neural networks within hardware constraints like energy consumption, latency, and memory size. Recent frameworks, born from the early 2020s, usher in a new era of joint optimization for neural networks and IMC hardware parameters, from crossbar size to ADC/DAC resolution. Yet, existing NAS surveys often cast a blind eye to the nuanced intricacies of IMC hardware. This review aims to rectify that by delving into HW-NAS methods tailored specifically for IMC, offering critical comparisons of current frameworks, and outlining a roadmap for future endeavors. It underscores the imperative to infuse IMC design optimizations into HW-NAS frameworks, alongside offering pragmatic recommendations for seamless implementation in IMC hardware-software co-design initiatives.

In the realm of traditional von Neumann architectures, the perennial challenge of high energy consumption in data transfers between memory and computing units persists, despite strides in processor parallelism. IMC emerges as a beacon of hope, processing data within memory confines, thus slashing data movement costs and elevating latency and energy efficiency. These IMC systems harness diverse memory types like SRAM, RRAM, and PCM, meticulously organized in crossbar arrays to execute operations with precision. The optimization saga spans across devices, circuits, and architectures, often leveraging HW-NAS to co-optimize models and hardware for deep learning accelerators, deftly balancing performance, computation demands, and scalability.

HW-NAS for IMC stands at the crossroads of innovation, integrating four potent deep learning techniques: model compression, neural network model search, hyperparameter exploration, and hardware optimization. These methodologies explore expansive design spaces, seeking the optimal synergy between neural network architectures and hardware configurations. Model compression techniques, including quantization and pruning, pare down excesses, while neural network model search meticulously handpicks layers, operations, and connections. Hyperparameter exploration fine-tunes parameters for a fixed network, whereas hardware optimization delicately adjusts components such as crossbar size and precision. The search space, expansive and dynamic, spans neural network operations and hardware design, all in pursuit of efficient performance within predefined hardware constraints.

Conclusion:

The convergence of hardware and software optimization, facilitated by techniques like HW-NAS for IMC, signifies a significant leap forward in maximizing AI efficiency. This integration promises not only improved performance but also enhanced energy efficiency and scalability, presenting lucrative opportunities for the market to capitalize on advanced AI processing capabilities. Companies that invest in these technologies stand to gain a competitive edge in an increasingly AI-driven landscape.