- KIVI is a plug-and-play quantization algorithm tailored for key-value (KV) caches in large language models (LLMs).

- Unlike traditional quantization methods, KIVI eliminates the need for extensive fine-tuning, making it easily integrable for researchers and developers.

- Tests reveal KIVI’s exceptional ability to reduce memory usage by up to 2.6 times compared to existing methods.

- Real-world scenarios demonstrate throughput improvements of up to 3.47 times while maintaining comparable accuracy to full-precision baselines.

- KIVI’s efficiency in memory optimization holds promise for enhancing performance and scalability across diverse LLM applications.

Main AI News:

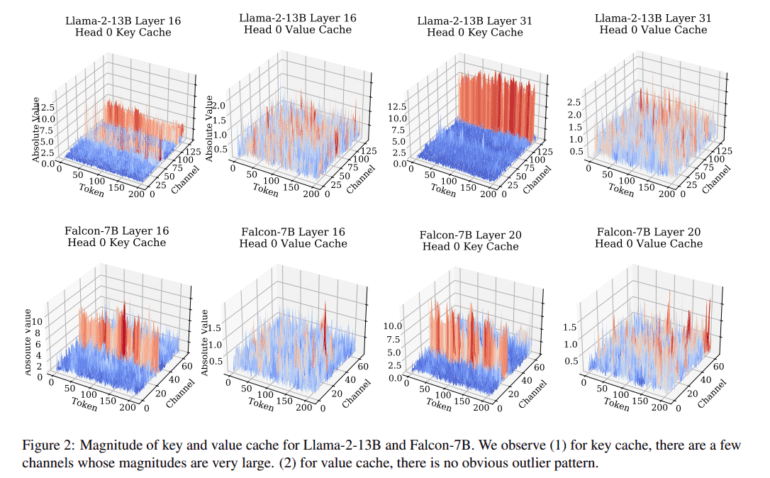

In the realm of large language models (LLMs), efficiency is paramount. With the demand for faster text generation and more accurate responses, the challenge lies in optimizing memory usage without compromising performance. Enter KIVI, a groundbreaking plug-and-play quantization algorithm tailored specifically for key-value (KV) caches in LLMs.

Traditionally, reducing memory footprint through quantization has been a tedious task, often requiring extensive fine-tuning to achieve satisfactory results. This cumbersome process has hindered researchers and developers from harnessing the full potential of quantization methods effectively.

However, KIVI changes the game entirely. With its innovative approach, KIVI streamlines the quantization process, eliminating the need for intricate fine-tuning altogether. This means researchers and developers can seamlessly integrate KIVI into their LLMs without investing significant time and resources into optimization.

But the true testament to KIVI’s prowess lies in its performance. Rigorous testing has demonstrated that KIVI excels in reducing memory usage while maintaining exceptional performance levels. Compared to existing quantization techniques, KIVI can achieve a remarkable memory usage reduction of up to 2.6 times. This translates to significant throughput improvements, with real-world scenarios witnessing boosts of up to 3.47 times.

For instance, in a test environment featuring Mistral-v0.2, KIVI showcased unparalleled efficiency. Despite utilizing 5.3 times less memory for the KV cache, KIVI maintained comparable accuracy to the full-precision baseline. This remarkable feat underscores KIVI’s potential to revolutionize memory efficiency in LLMs, paving the way for enhanced performance and scalability across various applications.

Conclusion:

The introduction of KIVI represents a significant leap forward in the field of large language models. By streamlining memory optimization through its plug-and-play approach, KIVI not only addresses the pressing challenge of memory usage but also unlocks new possibilities for accelerating LLM performance. Its efficiency has the potential to reshape the market landscape, empowering businesses to leverage advanced language capabilities with improved speed and scalability.