TL;DR:

- Meta, Microsoft, and OpenAI will adopt AMD’s Instinct MI300X AI chip, signaling a shift from Nvidia.

- AMD’s MI300X offers enhanced performance and 192GB of HBM3 memory, making it a strong alternative.

- The key challenge for AMD is convincing Nvidia-dependent companies to transition to their GPUs.

- Price competitiveness is essential, as AMD aims to provide cost-effective solutions compared to Nvidia.

- Major players like Meta, Microsoft, and Oracle have committed to using MI300X for AI workloads.

- OpenAI will support AMD GPUs in its Triton software for AI research.

- While AMD’s initial sales projections are modest, the AI GPU market is expected to reach $400 billion in the next four years.

Main AI News:

Meta, Microsoft, and OpenAI have announced their intent to embrace AMD’s latest AI innovation, the Instinct MI300X, signaling a shift away from Nvidia’s costly graphics processors that have long dominated the artificial intelligence landscape. This strategic move could potentially disrupt the AI chip market, challenging Nvidia’s dominant position and offering cost-effective alternatives for AI model development.

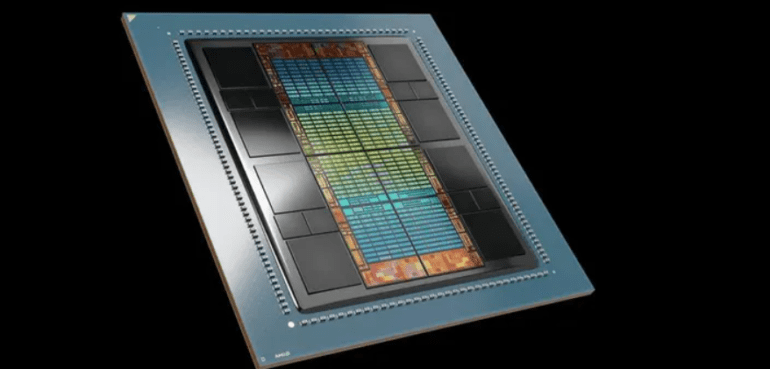

AMD’s forthcoming high-end chip, slated for an early release next year, boasts a new architecture with the standout feature of 192GB of cutting-edge, high-performance HBM3 memory. This advancement not only accelerates data transfer but also accommodates larger AI models. Lisa Su, AMD’s CEO, directly compared the MI300X and its associated systems to Nvidia’s primary AI GPU, the H100, emphasizing the superior user experience enabled by the MI300X’s performance gains.

However, the pivotal question facing AMD lies in whether established companies accustomed to Nvidia’s offerings will invest the resources necessary to incorporate another GPU supplier. Su acknowledged the effort required for this transition, emphasizing AMD’s commitment to addressing this challenge.

Price competitiveness is also crucial. While AMD has not disclosed MI300X pricing details, Nvidia’s chips can command approximately $40,000 each. Su highlighted the necessity for AMD’s chip to offer cost savings in both acquisition and operation to entice customers away from Nvidia’s offerings.

AMD has already secured commitments from prominent GPU consumers, including Meta and Microsoft, who were the largest purchasers of Nvidia H100 GPUs in 2023. Meta intends to utilize MI300X GPUs for AI inference tasks such as AI stickers, image editing, and assistant operation. Microsoft’s CTO, Kevin Scott, announced plans to provide access to MI300X chips through its Azure web service, and Oracle’s cloud platform is set to leverage these chips as well.

OpenAI has also pledged support for AMD GPUs in its Triton software product, which is aimed at AI research and chip feature utilization. While AMD’s sales projections for the MI300X are conservative, with an estimated $2 billion in total data center GPU revenue for 2024, the broader AI GPU market is expected to surge to $400 billion over the next four years, presenting significant growth potential and highlighting the significance of high-end AI chips.

Su remains pragmatic, acknowledging Nvidia’s current market dominance but expressing confidence in AMD’s ability to carve out a substantial share of the expanding AI chip market. As the industry evolves and demand for advanced AI capabilities grows, AMD is positioning itself as a viable contender in this competitive arena, with an eye on capturing a meaningful piece of the projected $400 billion market by 2027.

Conclusion:

Tech giants’ adoption of AMD’s AI chip represents a significant shift in the market, challenging Nvidia’s dominance. While AMD faces the challenge of convincing established players to switch, their competitive pricing and enhanced performance could reshape the AI chip landscape. The projected growth of the AI GPU market underscores the immense opportunity and competitiveness in this sector.