- Meta Research unveils the Large Language Model Transparency Tool (LLM-TT), an open-source toolkit.

- LLM-TT dissects Transformer-based language models, offering insights into information flow and component contributions.

- Key functionalities include model selection, graph browsing, token representation adjustment, and interactive exploration.

- LLM-TT enhances understanding, fairness, and accountability in AI deployments.

Main AI News:

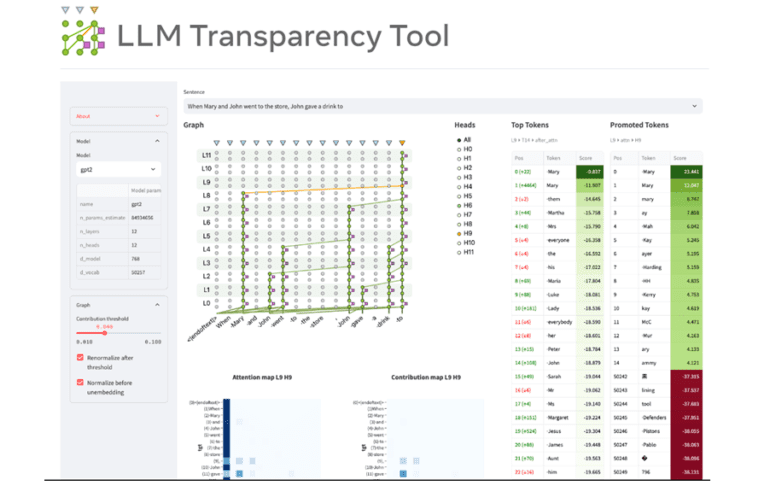

In today’s rapidly evolving landscape of artificial intelligence, understanding the inner workings of language models is crucial for ensuring trust and reliability in AI deployments. Meta Research introduces the Large Language Model Transparency Tool (LLM-TT), an open-source interactive toolkit designed to dissect Transformer-based language models with precision and clarity.

LLM-TT, a brainchild of Meta Research, stands out as an invaluable resource for analysts and developers seeking to unravel the complexities of Transformer-based language models. This sophisticated tool not only dissects the intricate pathways of information flow from input to output but also enables users to delve into the nuanced contributions of individual attention heads and neurons.

The TransformerLens hooks embedded within LLM-TT offer a seamless integration experience that is compatible with a wide array of models sourced from platforms like Hugging Face. This feature empowers users to witness firsthand how information traverses through the neural network during inference, while also providing the means to scrutinize the influence of specific components on model outputs.

As the demand for transparency and accountability in AI technologies continues to surge, the necessity for tools like LLM-TT becomes increasingly evident. Decision-making processes and content generation powered by language models are pervasive across various applications, making it imperative to understand and monitor their functionality.

By shedding light on the decision-making processes of language models, LLM-TT fills a critical void in the landscape of AI transparency. It equips users with the ability to verify model behavior, uncover biases, and ensure alignment with ethical standards, thereby bolstering trust and reliability in AI deployments.

Key functionalities of LLM-TT include:

- Selection of a model and prompt to initiate inference.

- Browsing a contribution graph and selecting tokens to build the graph.

- Adjustment of contribution threshold and selection of token representations.

- Visualization of token projection to the output vocabulary, showcasing promoted or suppressed tokens.

- Interactive elements such as clickable edges reveal details about contributing attention heads, and heads display promotion or suppression details.

- Interactive exploration of Feedforward Network (FFN) blocks and neurons within these blocks, enabling detailed inspection.

Conclusion:

The introduction of Meta AI’s LLM Transparency Tool signifies a significant advancement in the field of AI transparency and accountability. As organizations strive to adopt ethical and informed AI technologies, the availability of tools like LLM-TT provides invaluable support. By enabling detailed insights into the inner workings of Transformer-based language models, LLM-TT fosters a culture of transparency, fairness, and accountability, ultimately shaping a more responsible and trustworthy AI market.