- Evolution from Large Language Models (LLMs) to Small Language Models (SLMs) is reshaping language model architectures.

- Transformers, fundamental to both LLMs and SLMs, face challenges like low inductive bias and quadratic complexity.

- State Space Models (SSMs) like S4 aim to handle longer sequences efficiently but encounter limitations in processing information-dense data.

- Mamba, a selective state space sequence modeling technique, addresses some of the limitations but faces stability issues when scaling to large networks.

- Microsoft’s SiMBA architecture combines Mamba for sequence modeling with Einstein FFT (EinFFT) for channel modeling.

- EinFFT enhances global visibility and energy concentration for crucial data pattern extraction.

- SiMBA’s Channel Mixing component integrates Spectral Transformation, Spectral Gating Network, and Inverse Spectral Transformation.

- Performance evaluations demonstrate SiMBA’s superiority across various metrics, outperforming state-of-the-art models.

- SiMBA achieves remarkable performance on the ImageNet 1K dataset with an 84.0% top-1 accuracy, surpassing prominent convolutional networks and leading transformers.

Main AI News:

In the realm of language models, the evolution from Large Language Models (LLMs) to Small Language Models (SLMs) marks a significant shift. Both LLMs and SLMs rely on transformers as their fundamental components, showcasing their prowess across various domains with attention networks. However, attention networks come with their share of challenges, including low inductive bias and quadratic complexity concerning input sequence length.

To tackle these challenges, State Space Models (SSMs) like S4 have emerged, aiming to handle longer sequence lengths efficiently. Despite their efficacy, S4 and similar models face limitations in processing information-dense data, particularly in domains such as computer vision, and encounter difficulties with discrete scenarios like genomic data.

Addressing these limitations, Mamba, a selective state space sequence modeling technique, was introduced. However, Mamba faces stability issues, especially when scaling to large networks for computer vision datasets.

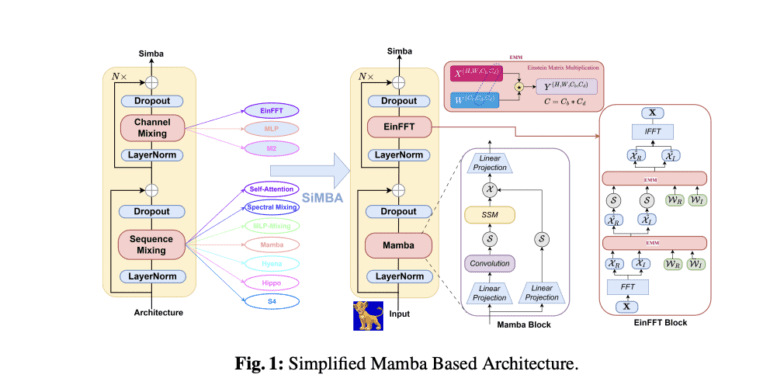

Enter SiMBA, the latest architectural innovation from Microsoft. SiMBA combines the power of Mamba for sequence modeling with Einstein FFT (EinFFT) for channel modeling. By incorporating EinFFT, SiMBA overcomes the instability issues observed in Mamba when scaling to large networks, providing a robust solution for both small-scale and large-scale networks.

SiMBA’s versatility shines through its incorporation of various models, including convolutional models, transformers, MLP-mixers, spectralmixers, and hybrid models combining different approaches. The Channel Mixing component of SiMBA integrates Spectral Transformation, Spectral Gating Network utilizing Einstein Matrix multiplication, and Inverse Spectral Transformation. EinFFT leverages frequency-domain channel mixing, enhancing global visibility and energy concentration for extracting crucial data patterns.

Moreover, the combination of Mamba with MLP for channel mixing bridges performance gaps for small-scale networks. However, the addition of EinFFT ensures stability even for large networks, making SiMBA a comprehensive solution for a wide range of applications.

Performance evaluations showcase SiMBA’s superiority across various metrics, including Mean Squared Error (MSE) and Mean Absolute Error (MAE), surpassing state-of-the-art models. Notably, evaluations on the ImageNet 1K dataset demonstrate SiMBA’s exceptional performance with an 84.0% top-1 accuracy, outperforming prominent convolutional networks and leading transformers.

Microsoft’s SiMBA emerges as a groundbreaking architecture, offering unparalleled performance and versatility in handling diverse time series forecasting tasks and modalities. Its remarkable achievements solidify SiMBA’s position as a frontrunner in the field of vision and time series analysis.

Conclusion:

The introduction of Microsoft’s SiMBA architecture marks a significant advancement in the field of vision and time series analysis. SiMBA’s ability to address stability issues while delivering superior performance across diverse metrics positions it as a leading solution in the market, offering unparalleled capabilities for handling complex data tasks. Its success underscores the importance of innovation and adaptability in meeting the evolving needs of the market. Businesses operating in these domains should take note of SiMBA’s capabilities and consider integrating them into their workflows to gain a competitive edge.