- MIT introduces Cross-Layer Attention (CLA) for Transformer architecture.

- CLA reduces memory footprint of key-value (KV) cache, crucial for large language models (LLMs).

- It enables sharing of key and value heads across adjacent layers, enhancing efficiency.

- CLA works independently or in tandem with Multi-Query Attention (MQA) and Grouped-Query Attention (GQA).

- CLA experiments show favorable accuracy/memory tradeoffs compared to MQA or GQA alone.

- CLA2 configuration proves most effective, consistently reducing KV cache storage.

- CLA facilitates higher learning rates and benefits across different parameter scales.

Main AI News:

Efficient management of key-value (KV) cache poses a challenge for large language models (LLMs), where its size scales with sequence length and batch size. This overhead restricts batch sizes for long sequences and demands costly techniques like offloading in memory-scarce scenarios. Moreover, persistent storage and retrieval of KV caches over extended durations are desirable to avoid redundant computations. Yet, the size of KV cache directly impacts storage costs and retrieval feasibility. As LLMs increasingly tackle longer input sequences, optimizing KV cache memory becomes pivotal in crafting efficient transformer-based models.

Traditionally, strategies like Multi-Query Attention (MQA) and Grouped-Query Attention (GQA) aimed at reducing KV cache size. While the original transformer architecture employed Multi-Head Attention (MHA), where each query head interacts with distinct key/value heads, MQA groups query heads to share a single key/value head, reducing storage overhead during decoding. GQA extends this concept by allowing varying group sizes. As KV cache size scales with the number of distinct key/value heads, MQA and GQA effectively mitigate storage requirements. However, their memory reduction capabilities have limitations.

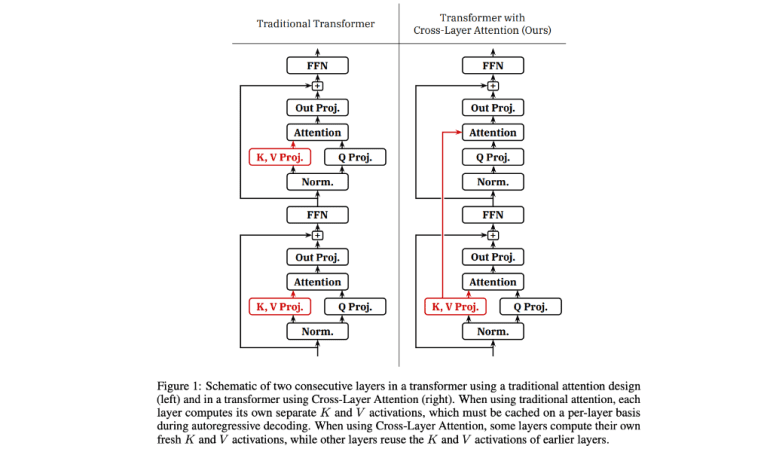

In a recent paper, MIT researchers propose Cross-Layer Attention (CLA), extending the notion of key/value head sharing. CLA facilitates sharing key and value heads not only within a layer but also across adjacent layers. By computing key/value projections for a subset of layers and reusing KV activations from prior layers, CLA significantly reduces the KV cache memory footprint. The reduction factor equals the sharing factor or slightly less if not evenly dividing the number of layers.

CLA operates independently of MQA and GQA and can be combined with either technique. The sharing factor determines the number of layers sharing the output of each KV projection, offering various CLA configurations. For instance, CLA2 shares each KV projection among adjacent layers, while CLA3 extends it to groups of three layers.

Now, let’s delve into CLA’s benefits:

- Reduces memory footprint of intermediate KV activation tensors during training.

- Fully compatible with standard tensor parallelism techniques.

- Slightly decreases parameters in the model and required FLOPs during passes.

- Enables larger batch sizes and longer KV cache persistence, potentially enhancing inference latency. However, unlike MQA and GQA, CLA doesn’t directly affect memory bandwidth or core attention computation latency during decoding.

To evaluate CLA, researchers trained transformer-based models from scratch at 1 billion and 3 billion parameter scales. Their experiments aimed to understand accuracy/memory tradeoffs, CLA’s comparison with GQA or MQA, optimal CLA configurations, and consistency across scales.

Key findings include:

- CLA enables favorable accuracy/memory tradeoffs compared to plain GQA or MQA.

- CLA2 was most effective in the experimental regime.

- CLA combined with MQA consistently decreased KV cache storage.

- CLA models benefited from higher learning rates.

- Benefits were consistent across 1B- and 3B-parameter scales. MQA-CLA2 achieved the lowest validation perplexity with a 2× KV cache reduction and minimal perplexity degradation.

Researchers anticipate CLA’s greatest impact on LLMs handling extremely long sequences, leaving end-to-end efficiency evaluations for future work.

Conclusion:

MIT’s Cross-Layer Attention (CLA) proposal signifies a significant advancement in optimizing Transformer-based language models. With CLA’s ability to efficiently manage key-value cache, LLMs can operate more effectively, offering improved performance and scalability. This innovation presents opportunities for businesses to leverage more efficient language models, potentially leading to enhanced productivity and innovation in various sectors relying on natural language processing technologies.