TL;DR:

- Nomic AI introduces nomicembed-text-v1, an open-source long context text embedding model.

- The model surpasses its predecessors with an impressive sequence length of 8192 tokens.

- It combines open weights, open data, and a 137M parameter design under an Apache-2 license.

- Meticulous stages of data preparation and model training were involved in its development.

- Innovations in architecture include rotary positional embeddings, SwiGLU activation, and Flash Attention integration.

- Performance evaluation on benchmarks like GLUE and MTEB demonstrates exceptional prowess.

- The model’s transparency and openness set a new standard in the AI community.

Main AI News:

In today’s dynamic business landscape, the realm of natural language processing (NLP) continually demands advancements in handling extensive textual contexts. Recent strides in this domain, elucidated by Lewis et al. (2021), Izacard et al. (2022), and Ram et al. (2023), have markedly propelled language models, notably through the evolution of text embeddings. These embeddings, as the backbone of myriad applications such as retrieval-augmented generation for large language models (LLMs) and semantic search, play a pivotal role in transforming sentences or documents into low-dimensional vectors. This transformation captures the semantic essence, thereby facilitating clustering, classification, and information retrieval tasks.

Nonetheless, a glaring constraint persists in the limited context length handled by existing models. Notable open-source models on the MTEB benchmark, including E5 by Wang et al. (2022), GTE by Li et al. (2023), and BGE by Xiao et al. (2023), are confined to a 512-token context length, hindering their efficacy in scenarios necessitating a broader document context understanding. Conversely, models exceeding a context length of 2048 tokens, such as Voyage-lite-01-instruct by Voyage (2023) and text-embedding-ada-002 by Neelakantan et al. (2022), remain proprietary.

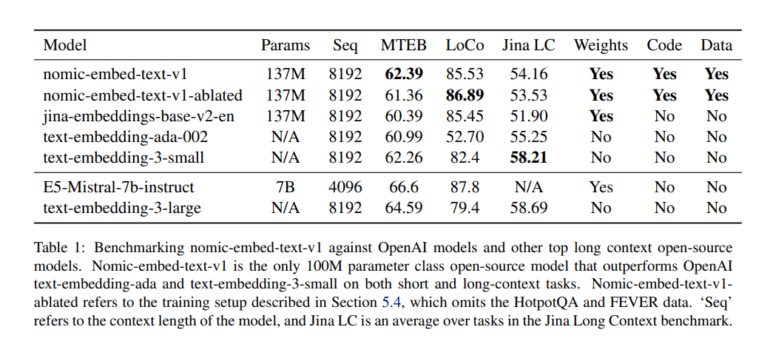

In this context, the unveiling of nomicembed-text-v1 signifies a remarkable breakthrough. This model, not only open-source but also boasting an impressive sequence length of 8192 tokens, surpasses its predecessors in both short and long-context evaluations. What distinguishes it is its holistic approach, amalgamating the advantages of open weights, open data, and a 137M parameter design under an Apache-2 license, thereby ensuring accessibility and transparency.

The journey to achieving this milestone entailed meticulous stages of data preparation and model training. Initially, a Masked Language Modeling Pretraining phase utilized resources like BooksCorpus and a Wikipedia dump from 2023, employing the bert-base-uncased tokenizer to craft data chunks suitable for long-context training. Subsequently, Unsupervised Contrastive Pretraining leveraged a vast collection of 470 million pairs across diverse datasets to refine the model’s comprehension through consistency filtering and selective embedding.

The architecture of nomicembed-text-v1 embodies a thoughtful adaptation of BERT to accommodate the extended sequence length. Innovations like rotary positional embeddings, SwiGLU activation, and the integration of Flash Attention signify a strategic overhaul aimed at enhancing performance and efficiency. The model’s training regimen, characterized by a 30% masking rate and optimized settings, further underscores the rigorous pursuit of optimal outcomes.

When subjected to benchmark assessments like GLUE, MTEB, and specialized long-context evaluations, nomicembed-text-v1 showcased exceptional prowess. Particularly noteworthy is its performance in the JinaAI Long Context Benchmark and the LoCo Benchmark, underscoring its superiority in handling extensive texts, a domain where many predecessors faltered.

However, the journey of nomicembed-text-v1 transcends mere performance metrics. Its development process, prioritizing end-to-end auditability and potential for replication, establishes a new benchmark for transparency and openness in the AI community. By releasing model weights, codebase, and a curated training dataset, the team behind nomicembed-text-v1 invites continuous innovation and scrutiny.

Conclusion:

The introduction of nomicembed-text-v1 by Nomic AI marks a significant advancement in the NLP landscape. Its superior performance, coupled with its open-source nature and comprehensive design, is poised to drive innovation and transparency in the market. Businesses should take note of this development as it offers new opportunities for leveraging advanced NLP capabilities in various applications.