- NVIDIA introduces ScaleFold, an optimized training method for AlphaFold, aimed at enhancing efficiency and scalability in protein structure prediction.

- ScaleFold addresses key challenges in AlphaFold’s training process, including memory consumption, computation time, and communication inefficiencies.

- NVIDIA researchers propose several optimizations, such as non-blocking data pipelines, CUDA Graphs, and specialized Triton kernels, to improve training performance.

- Comprehensive evaluations demonstrate ScaleFold’s superior training performance compared to existing solutions, achieving up to 6.2X speedup on NVIDIA H100 GPUs.

- Benchmarking on the Eos platform confirms ScaleFold’s efficiency, reducing training time to 8 minutes on 2080 NVIDIA H100 GPUs.

- With ScaleFold, AlphaFold pretraining can be completed in under 10 hours, showcasing its accelerated performance capabilities.

Main AI News:

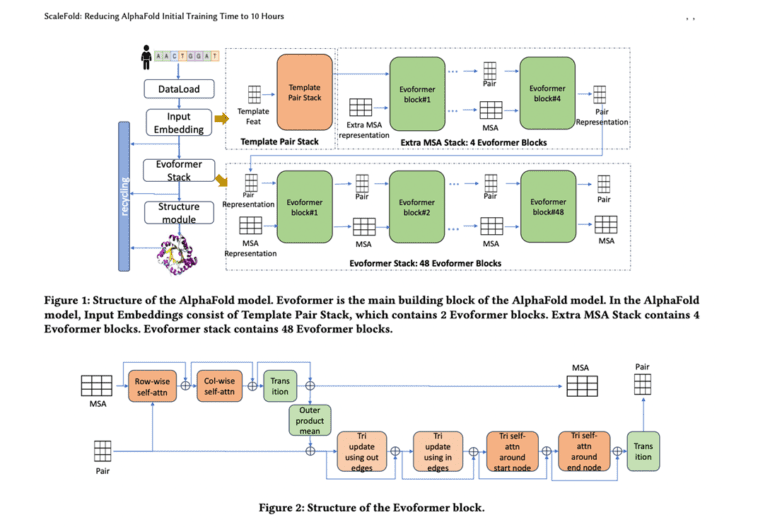

In the realm of high-performance computing (HPC), the integration of deep learning has proven immensely impactful. Surrogate models have continually evolved, surpassing traditional physics-based simulations in both accuracy and practicality. This paradigm shift is particularly evident in the field of protein folding, as demonstrated by groundbreaking models like RoseTTAFold, AlphaFold2, OpenFold, and FastFold, which have democratized protein structure prediction and accelerated drug discovery processes. Notably, AlphaFold, developed by DeepMind, has achieved accuracy levels comparable to experimental methods, effectively addressing a long-standing challenge in biology. However, despite its success, the training process for AlphaFold relies heavily on a sequence attention mechanism, resulting in significant time and resource consumption, thus hindering the pace of research.

In response to these challenges, various efforts have been made to enhance training efficiency. Initiatives such as those undertaken by OpenFold, DeepSpeed4Science, and FastFold have focused on scalability, a critical factor in accelerating the training of AlphaFold. Integrating AlphaFold training into the MLPerf HPC v3.0 benchmark underscores its significance within the HPC community. Yet, despite its relatively modest parameter count, AlphaFold’s unique attention mechanism demands substantial memory resources, posing a challenge to scalability. Additionally, the training process is dominated by memory-bound kernels and critical operations such as Multi-Head Attention and Layer Normalization, which significantly impact computation time and limit the effectiveness of data parallelism.

A recent study conducted by NVIDIA researchers offers a comprehensive analysis of AlphaFold’s training process, identifying key obstacles to scalability, including inefficient distributed communication and underutilization of compute resources. To address these issues, the researchers propose a series of optimizations aimed at enhancing overall efficiency and scalability. These optimizations include the implementation of a non-blocking data pipeline to mitigate slow-worker issues, the utilization of CUDA Graphs to reduce overhead, and the development of specialized Triton kernels for critical computation patterns. Furthermore, the researchers advocate for the fusion of fragmented computations and optimization of kernel configurations to further enhance performance.

The optimized training method, dubbed ScaleFold, represents a significant advancement in AlphaFold’s training process. By systematically addressing barriers to scalability, ScaleFold aims to deliver superior training performance compared to existing solutions such as OpenFold and FastFold. Comprehensive evaluations demonstrate remarkable speedups, with ScaleFold achieving up to 6.2X faster training times on NVIDIA H100 GPUs compared to reference models. Moreover, benchmarking on the Eos platform confirms ScaleFold’s efficiency, reducing training time to a mere 8 minutes on 2080 NVIDIA H100 GPUs, representing a 6X improvement over reference models. With ScaleFold, AlphaFold pretraining can now be completed in under 10 hours, showcasing its unparalleled performance acceleration capabilities.

Conclusion:

NVIDIA’s ScaleFold represents a significant advancement in protein structure prediction within the high-performance computing market. By addressing key challenges in training efficiency and scalability, ScaleFold offers unparalleled performance acceleration, potentially revolutionizing drug discovery processes and further advancing computational biology research. This innovation underscores NVIDIA’s commitment to pushing the boundaries of AI-driven solutions in scientific computing, positioning it as a leader in the field.