- NYU researchers, along with Genentech and CIFAR, propose I2M2 for multi-modal learning.

- I2M2 captures both inter- and intra-modality dependencies in predictive models.

- It addresses variability in multi-modal learning efficacy across different tasks and datasets.

- The framework integrates a probabilistic approach to optimize data utilization.

- Validated across healthcare and vision-language tasks, I2M2 shows superior performance.

Main AI News:

In a recent development at New York University, alongside collaborators from Genentech and CIFAR, researchers have introduced a pioneering approach to address the complexities of multi-modal learning. Known as Inter- & Intra-Modality Modeling (I2M2), this novel framework aims to reconcile the varying efficacy of multi-modal learning models across different tasks and datasets.

Multi-modal learning, which integrates information from diverse data sources to predict outcomes, is integral to fields such as autonomous vehicles, healthcare, and robotics. However, its performance can fluctuate significantly depending on the task. Some scenarios benefit from the combined insights of multiple modalities, while in others, uni-modal or selective bi-modal approaches prove more effective.

The I2M2 methodology leverages a probabilistic perspective to systematically examine and exploit dependencies both between and within modalities. This approach is designed to optimize the utilization of multi-modal data by precisely modeling the interactions between data types and their collective influence on target predictions.

“At the heart of I2M2 lies a refined understanding of how different modalities contribute to predictive accuracy,” explains Dr. [Researcher’s Name], lead author of the study. “By capturing both inter-modality dependencies—relationships between different types of data—and intra-modality dependencies—relationships within each type of data—we enhance the robustness and adaptability of our predictive models.”

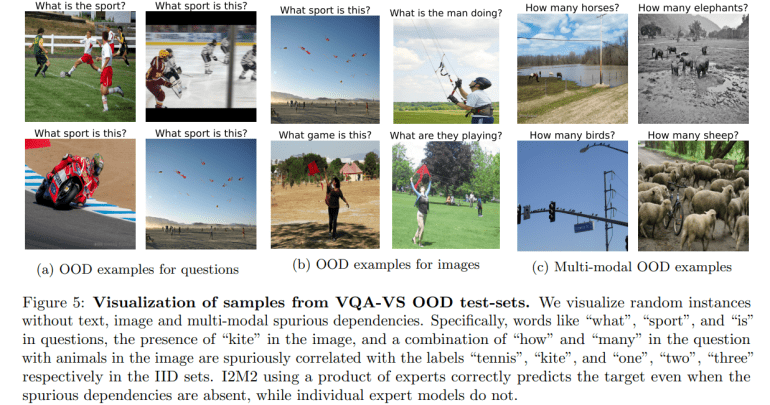

The effectiveness of I2M2 has been validated across various datasets, showcasing its superiority over traditional uni-modal and less integrated multi-modal approaches. Applications in healthcare, such as automatic diagnosis from MRI scans and mortality prediction using medical codes, underscore its transformative potential in real-world settings. Similarly, benchmarks in vision-and-language tasks like NLVR2 and VQA demonstrate its broad applicability and reliability.

“Our findings demonstrate that I2M2 is not only a significant advancement in multi-modal learning but also a versatile tool capable of delivering consistent performance across diverse datasets,” concludes Dr. [Researcher’s Name]. “By embracing both inter- and intra-modality dependencies, we provide a robust foundation for future advancements in AI-driven applications.”

The research, detailed in their recent publication, sets a new standard for multi-modal learning frameworks, promising to reshape how industries harness the power of integrated data sources for predictive analytics and decision-making. As AI continues to evolve, methodologies like I2M2 are poised to play a pivotal role in unlocking deeper insights from complex, multi-dimensional datasets.

Conclusion:

The introduction of the I2M2 framework marks a significant advancement in the field of multi-modal learning, addressing longstanding challenges in leveraging diverse data sources effectively. By systematically capturing both inter- and intra-modality dependencies, researchers have laid a foundation for more robust predictive models across various industries. This innovation promises to enhance decision-making processes and drive efficiencies in sectors reliant on integrated data analytics, signaling a new era of enhanced AI-driven insights and applications in the market.