TL;DR:

- OpenBA is a groundbreaking 15B bilingual model developed by Soochow University.

- It addresses the gap in the Encoder-Decoder framework for NLP tasks.

- OpenBA offers model checkpoints, data collection insights, and a bilingual Flan collection.

- The model employs a unique shallow-encoder deep decoder structure.

- Three-stage training enhances generation capabilities and model effectiveness.

- Extensive testing demonstrates OpenBA’s exceptional performance.

- OpenBA outperforms other models while emitting significantly lower carbon emissions.

- All implementation details are publicly available, encouraging collaboration.

Main AI News:

The era of language models has ushered in a new wave of breakthroughs, with these colossal linguistic giants showcasing unparalleled capabilities while eclipsing their predecessors across various domains when immersed in vast volumes of textual data. However, even as these massive models continue to evolve and flex their linguistic muscles, the quest for their real-world perfection remains an ongoing challenge. In response, the open-source community has been hard at work, striving to provide robust and accessible Large Language Models (LLMs) encompassing a wide spectrum of data sources, architectural nuances, language modeling objectives, training methodologies, scale variants, and linguistic proficiencies.

Enter the illustrious roster of models like BLOOM, LLaMA, FlanT5, and AlexaTM, representing a cornucopia of linguistic prowess in the realm of open-source LLMs. Among them, Chinese-LLaMA, MOSS, Huatuo, Luotuo, and Phoenix stand tall as exemplars of large language models generously shared with the world by dedicated researchers and developers. These models, born from the crucible of either meticulous pre-training or rigorous fine-tuning of existing multilingual models, offer powerful tools to fuel innovation in NLP. While they provide robust general language models and decoder-only variations, the Encoder-Decoder framework, a versatile powerhouse capable of a multitude of tasks—from language comprehension and common sense reasoning to question-answering, information retrieval, and multi-turn chit-chat conversations—remains an underexplored frontier.

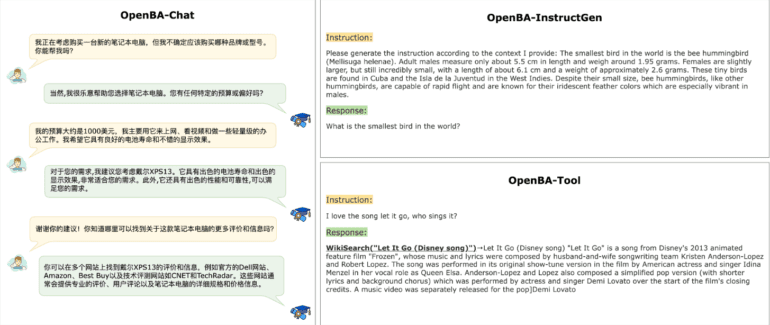

In a remarkable stride towards bridging this gap, a team of researchers from Soochow University presents OpenBA—an open-sourced 15B bilingual asymmetric seq2seq model. OpenBA emerges from the crucible of pre-training from scratch, offering not only model checkpoints but also the invaluable data collection and processing insights required to craft the pre-training data and a bilingual Flan collection sourced from freely available data fountains like Common Crawl, the Pile corpus, and C-Book. Their contribution extends to shedding light on the motivations and empirical insights that underpin the model’s architecture design, as well as vital information about other enhanced models in their arsenal.

A distinctive feature of OpenBA is its unique asymmetric model structure, characterized by a shallow-encoder deep decoder paradigm, setting it apart from the vanilla Flan-T5 with its balanced encoder-decoder configuration or the asymmetric deep-encoder shallow-decoder paradigm seen in AlexaTM. The model’s journey unfolds through three stages: UL2 pre-training, length-adaptation, and Flan training, each carefully orchestrated to enhance generation capabilities. Moreover, OpenBA adopts a slew of enhancement tactics, both in terms of architecture and training methodologies, aiming to bolster model capacity, stability, and overall effectiveness.

To validate the prowess of OpenBA, extensive testing was conducted across various benchmarks and tasks, encompassing domains such as understanding, reasoning, and generation. These tests spanned zero-shot, few-shot, held-in, and held-out scenarios, revealing OpenBA’s exceptional performance, outshining numerous typical models. For instance, OpenBA-15B emerges as a standout contender, surpassing benchmarks set by LLaMA-70B in BELEBELE, BLOOM-176B in MMLU, ChatGLM-6B in CMMLU, and C-Eval, all while having been trained on a relatively modest 380B tokens. Impressively, OpenBA-15B achieves this feat while significantly reducing carbon emissions, emitting just about 6.5 tCO2eq overall, compared to the 14 tCO2eq generated during the training of LLaMA-7B.

Conclusion:

OpenBA’s introduction marks a significant milestone in the field of Chinese-centric NLP. Its unique model structure and impressive performance metrics position it as a valuable asset for researchers and developers. Additionally, its commitment to transparency and reduced carbon emissions aligns with the growing demand for eco-conscious solutions in the market, making OpenBA a compelling choice for those seeking advanced language models.