- OpenRLHF introduces a groundbreaking framework for Reinforcement Learning from Human Feedback (RLHF) in AI.

- Challenges with existing RLHF approaches include memory fragmentation and communication bottlenecks.

- OpenRLHF leverages Ray, the Distributed Task Scheduler, and vLLM, the Distributed Inference Engine, to optimize training processes.

- It achieves faster training convergence and reduces overall training time compared to established frameworks.

- OpenRLHF’s advancements pave the way for more efficient utilization of massive Language Models (LLMs) in various AI applications.

Main AI News:

In the realm of Artificial Intelligence (AI), a profound transformation is underway, particularly in the training of massive Language Models (LLMs) with parameters exceeding 70 billion. These LLMs play a pivotal role across various domains, enabling tasks such as creative text generation, translation, and content creation. However, harnessing the full potential of these advanced LLMs demands human input through Reinforcement Learning from Human Feedback (RLHF), a challenge exacerbated by existing frameworks struggling to manage the substantial memory requirements associated with such massive models.

Conventionally, RLHF methodologies entail partitioning the LLM across multiple GPUs for training. However, this approach encounters limitations. Excessive partitioning results in memory fragmentation on individual GPUs, reducing the effective batch size for training and thereby slowing down the overall process. Additionally, communication overhead between fragmented components creates bottlenecks, impeding efficiency akin to a team constantly exchanging messages, thus hindering the training process.

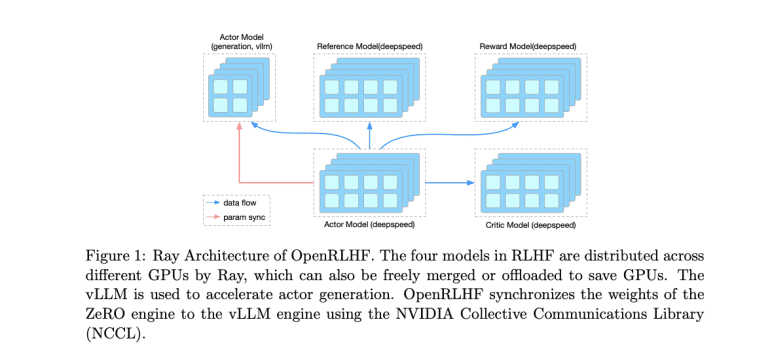

In response to these challenges, researchers have introduced a pioneering RLHF framework: OpenRLHF. By leveraging two critical technologies—Ray, the Distributed Task Scheduler, and vLLM, the Distributed Inference Engine—OpenRLHF revolutionizes RLHF training methodologies. Ray serves as an intelligent project manager, efficiently allocating the LLM across GPUs without excessive partitioning, optimizing memory utilization, and accelerating training by enabling larger batch sizes per GPU. Conversely, vLLM enhances computation speed by harnessing the parallel processing capabilities of multiple GPUs, resembling a network of high-performance computers collaboratively tackling complex problems.

A thorough comparative analysis, comparing OpenRLHF against established frameworks like DSChat during the training of a massive 7B parameter LLaMA2 model, highlighted significant enhancements. OpenRLHF exhibited accelerated training convergence, akin to a student swiftly grasping a concept due to an efficient learning approach. Furthermore, vLLM’s rapid generation capabilities led to a substantial reduction in overall training time, reminiscent of a manufacturing plant boosting production speed with a streamlined assembly line. Additionally, Ray’s intelligent scheduling minimized memory fragmentation, allowing for larger batch sizes and expediting the training process.

Conclusion:

The introduction of OpenRLHF marks a significant leap forward in the realm of AI training methodologies. By addressing key challenges associated with RLHF frameworks, such as memory fragmentation and communication bottlenecks, OpenRLHF not only accelerates training processes but also enhances the efficiency of utilizing massive Language Models (LLMs). This innovation signals a promising future for AI development, enabling organizations to leverage advanced AI technologies more effectively in various domains, from creative text generation to content creation and beyond. Businesses that adopt OpenRLHF stand to gain a competitive edge by harnessing the full potential of cutting-edge AI capabilities.