- Lifelong Learning Models (LLMs) show promise in approaching Artificial General Intelligence (AGI) but suffer from errors like biases and factual inaccuracies.

- Current methods for updating LLMs face challenges in cost-effectiveness and sustainability due to the constant evolution of knowledge.

- The WISE approach, developed by researchers from Zhejiang University and Alibaba Group, introduces a dual parametric memory scheme comprising a main memory for pretrained knowledge and a side memory for edits, with a routing mechanism for efficient memory access.

- WISE employs knowledge-sharing mechanisms and model editing techniques to facilitate continuous editing while mitigating conflicts and enhancing stability.

- Comparative evaluations demonstrate WISE’s superior performance over existing methods in both QA and Hallucination settings, showcasing improvements in stability and management of sequential edits.

- WISE strikes a balance between reliability, generalization, and locality, outperforming competitors across various tasks and exhibiting excellent generalization performance in out-of-distribution evaluations.

Main AI News:

In the realm of Lifelong Learning Models (LLMs), there’s a discernible evolution towards emergent intelligence characterized by expanded parameters, computational capabilities, and data assimilation, reminiscent of strides towards Artificial General Intelligence (AGI). However, despite these strides, deployed LLMs still grapple with persistent issues such as hallucinations, biases, and factual inaccuracies. Moreover, the perpetual evolution of knowledge poses a formidable challenge to their initial training. Addressing these errors in a timely manner post-deployment is paramount, as the costs associated with retraining or fine-tuning often prove prohibitive, thereby raising sustainability concerns in the face of lifelong knowledge expansion.

While the long-term memory of LLMs can undergo updates through processes such as (re)pretraining, fine-tuning, and model editing, the efficacy of such procedures is augmented by the working memory, bolstered by methodologies like GRACE. Yet, debates ensue regarding the comparative effectiveness of fine-tuning versus retrieval mechanisms. Current methods of injecting knowledge face hurdles like computational overheads and susceptibility to overfitting. Techniques for model editing, inclusive of constrained fine-tuning and meta-learning, endeavor to streamline the process of modifying LLMs. Recent advancements hone in on lifelong editing, albeit demanding extensive domain-specific training, thereby presenting challenges in anticipating forthcoming edits and accessing pertinent data.

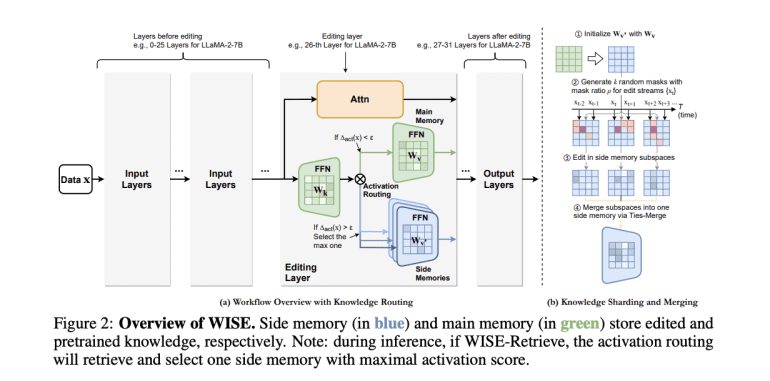

Following a comprehensive examination of these challenges and methodologies, researchers hailing from Zhejiang University and Alibaba Group propose their novel approach: WISE, a dual parametric memory architecture comprising a principal memory for pretrained knowledge and an ancillary memory for edited knowledge. Notably, only the ancillary memory undergoes modifications, with a routing mechanism determining which memory to access for inquiries. For continuous editing, WISE integrates a knowledge-sharing mechanism that partitions edits into distinct parameter subspaces to preempt conflicts before merging them into a shared memory pool.

WISE comprises two principal facets: Side Memory Design and Knowledge Sharding and Merging. The former entails the establishment of a secondary memory initialized as a replica of a designated Feedforward Neural Network (FFN) layer of the LLM, tasked with storing edits, alongside a routing mechanism facilitating memory selection during inference. The latter leverages knowledge sharding to segment edits into randomized subspaces for modification and employs knowledge merging techniques to amalgamate these subspaces into a cohesive side memory. Additionally, WISE introduces WISE-Retrieve, enabling retrieval across multiple side memories based on activation scores, thereby enriching lifelong editing scenarios.

In comparative evaluations, WISE demonstrates notable superiority over existing methodologies across both Question-Answering (QA) and Hallucination settings. Particularly in protracted editing sequences, WISE showcases significant enhancements in stability and adeptly manages sequential edits. While competitors like MEND and ROME display competitiveness initially, they falter with elongated edit sequences. Directly altering long-term memory precipitates substantial declines in locality, thereby impeding generalization. Although GRACE excels in locality, it compromises generalization in continuous editing scenarios. WISE strikes a harmonious balance between reliability, generalization, and locality, surpassing baselines across diverse tasks. In out-of-distribution evaluations, WISE exhibits exemplary generalization prowess, outshining its counterparts.

This research underscores the challenge of concurrently achieving reliability, generalization, and locality in contemporary lifelong modeling and editing approaches, attributing this challenge to the disjunction between working and long-term memory. To mitigate this challenge, WISE is posited, integrating side memory and model merging techniques. Findings indicate that WISE holds promise in concurrently achieving elevated metrics across assorted datasets and LLM models.

Conclusion:

The introduction of the WISE approach represents a significant advancement in optimizing lifelong learning processes. Its ability to simultaneously enhance reliability, generalization, and locality in LLMs holds substantial implications for the market, potentially driving increased adoption of lifelong learning technologies across industries seeking efficient and adaptable AI solutions.