- Princeton University researchers introduce USACO, a rigorous coding benchmark comprising 307 challenging tasks.

- USACO emphasizes diverse problem sets, thorough analyses, and comprehensive test suites to evaluate language models’ algorithmic reasoning abilities.

- Competitive programming principles underpin USACO, necessitating innovative solutions tailored to each challenge scenario.

- Despite its complexity, USACO exposes limitations in current language models, with even GPT-4 achieving only an 8.7% zero-shot pass rate.

- USACO provides official analyses, reference solutions, and instructional materials, fostering the exploration of novel inference techniques.

- Strategies combining self-reflection and retrieval significantly enhance performance but fall short of tackling challenges beyond the bronze difficulty tier.

- Tailored suggestions to GPT-4 enhance its problem-solving capabilities, outperforming previous methodologies in cracking previously unsolvable challenges.

Main AI News:

In the realm of evaluating and deploying Large Language Models (LLMs), code generation stands out as a critical domain. Despite the proliferation of coding benchmarks like HumanEval and MBPP, boasting solution rates surpassing 90%, the evolution of language models and inference techniques necessitates more rigorous evaluation metrics. This demand underscores the necessity for benchmarks that not only stress-test existing models but also offer avenues for enhancing their algorithmic reasoning capabilities.

One promising avenue is competitive programming, renowned for its ability to objectively gauge algorithmic prowess and human reasoning under duress. However, existing evaluations within this domain have often lacked the requisite problem diversity, depth of analysis, and comprehensive test suites to effectively assess algorithmic reasoning abilities.

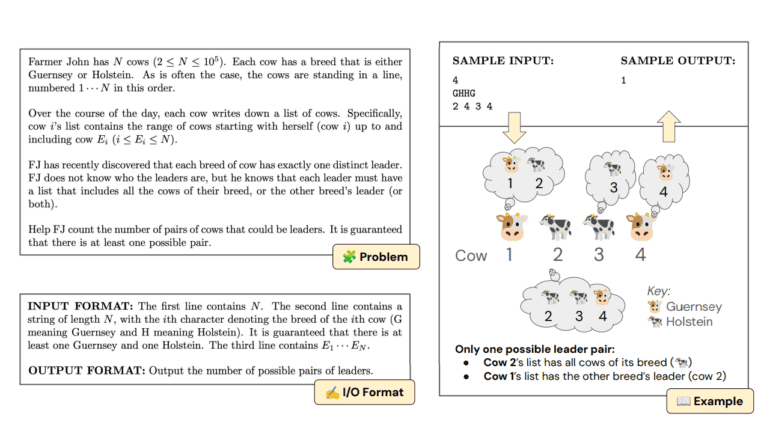

Addressing these shortcomings, a team of researchers has introduced USACO, a meticulously curated coding benchmark comprising 307 challenging tasks culled from prior USA Computing Olympiad contests. Each task is accompanied by an illustrative input-output tuple, an explanatory narrative, and a problem statement set within a hypothetical context. These tasks demand a blend of algorithmic acumen, mathematical proficiency, and common-sense reasoning, necessitating innovative and well-grounded approaches for resolution.

Unlike its predecessors, which focused primarily on program synthesis, USACO mandates models to exhibit reasoning prowess across diverse scenarios, devising bespoke algorithms tailored to each challenge. Even the most advanced language model, GPT-4, struggles with an 8.7% zero-shot pass rate@1 when subjected to USACO’s challenges.

Furthermore, USACO offers official analyses, reference solutions, meticulous unit tests, and instructional materials akin to competitive programming textbooks for each challenge. This comprehensive resource pool aims to catalyze the exploration of novel inference techniques, spawning a gamut of baseline strategies ranging from self-reflection to retrieval-based methods. Notably, strategies amalgamating retrieval and self-reflection demonstrate a substantial performance boost, tripling GPT-4’s zero-shot solve rate. Nonetheless, all approaches fall short of tackling challenges beyond the bronze difficulty tier.

Augmenting these findings, a human-in-the-loop study unveils deeper insights. Tailoring suggestions to GPT-4 enhances its problem-solving prowess, enabling it to crack 13 out of 15 previously insurmountable challenges, outshining all prior methodologies and models.

Conclusion:

The introduction of Princeton’s USACO Benchmark signifies a paradigm shift in evaluating code language models, highlighting the need for more rigorous assessment metrics in an evolving landscape. This development underscores the demand for enhanced algorithmic reasoning capabilities and the exploration of novel inference techniques, shaping the trajectory of language model development and deployment in the market.