- Microsoft introduces Recall, an AI tool for Windows computers, enabling users to retrieve past on-screen content.

- Privacy experts express concerns over potential misuse and lack of safeguards against unauthorized access.

- Regulators, including the UK’s ICO, investigate Recall’s privacy protections.

- Microsoft CEO assures data remains local and under user control, but critics emphasize potential vulnerabilities.

- Workplace implications and past privacy concerns with biometric security measures are highlighted.

- Advocates stress Recall’s utility, while skeptics view it as a privacy regression, citing potential exploitation by threat actors.

Main AI News:

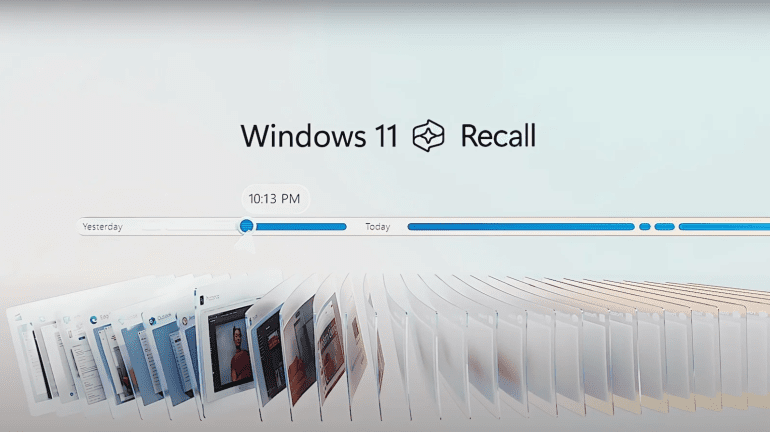

Privacy concerns are ringing alarm bells in response to Microsoft’s latest AI innovation. This week, the tech giant unveiled Recall, a new tool for Windows computers described as a personal “time machine,” capable of retrieving any past content displayed on screen, ranging from documents to images and websites. Unlike traditional keyword searches, Recall continuously saves screen screenshots locally, leveraging AI for data processing and searchability.

Despite its advancement in semantic search, the rapid pace of technological development coupled with evolving regulatory frameworks raises red flags regarding responsible usage. Jen Golbeck, an AI professor at the University of Maryland focusing on privacy, warns of potential nightmares if this tool falls into the wrong hands. She emphasizes that despite data being stored locally, user protection remains uncertain, with no safeguards against unauthorized access, even when using privacy measures like incognito mode.

Regulators, including the UK’s Information Commissioner’s Office (ICO), are scrutinizing Recall’s privacy safeguards. Microsoft’s CEO, Satya Nadella, reassures users that data remains local and confined to the device, limiting external exposure. Geoff Blaber, CEO of CCS Insight, echoes this sentiment, highlighting user control over feature activation and access permissions as mitigating factors.

However, Golbeck highlights scenarios where these protections may falter, particularly in sensitive contexts like oppressive regimes or abusive relationships. Workplace implications loom large, with personal activities on work devices potentially subject to scrutiny. Despite utility, Golbeck warns of pervasive privacy risks often overlooked in technology development, paralleling past concerns with biometric security measures.

While advocates like Blaber emphasize the feature’s potential benefits, Michela Menting of ABI Research sees it as a privacy regression. She argues that reliance on physical device access overlooks the ingenuity of threat actors, who may exploit Recall given enough time and effort. Addressing these concerns requires tangible proof of value, security, and privacy in real-world settings, a challenge Microsoft and its partners must confront head-on.

Conclusion:

The introduction of Microsoft’s Recall AI tool signifies a pivotal moment in the intersection of technology and privacy. While offering enhanced search capabilities, concerns over potential misuse and inadequate safeguards demand rigorous scrutiny. Regulatory investigations and industry responses will shape the trajectory of AI development, requiring a delicate balance between innovation and privacy protection in the market landscape.