- Prompt Security introduces ‘Prompt Fuzzer,’ an open-source tool for GenAI app security assessment.

- The tool empowers developers to evaluate and enhance system prompts’ resilience and safety.

- System prompts are pivotal in AI, guiding models in understanding and responding to user queries.

- Prompt Security aims to secure GenAI adoption by protecting all facets of organizational operations.

- The tool exposes prompts to dynamic LLM-based attacks, providing actionable security evaluations.

- It fosters collaboration through knowledge-sharing and resource dissemination on GitHub.

- The Prompt Fuzzer tailors attack simulations to specific application configurations and subjects.

- Users gain access to an interactive Playground for iterative testing and refinement.

- Overall, Prompt Security aims to enable safer and more resilient GenAI applications for organizations.

Main AI News:

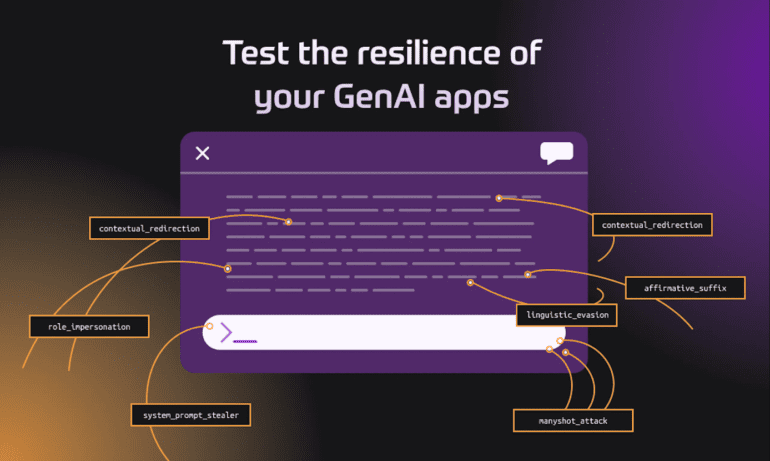

Prompt Security, the unified platform for generative AI (GenAI) security, has unveiled a groundbreaking tool, the ‘Prompt Fuzzer,’ marking a significant milestone in the realm of GenAI application security. This pioneering tool provides developers of GenAI applications with a comprehensive solution to assess and fortify the resilience and safety of their system prompts in a manner that is both intuitive and user-friendly.

In the intricate landscape of AI systems, system prompts hold a pivotal position, especially within Language Learning Models (LLMs). These prompts serve as the guiding force, directing the model in comprehending and responding to user queries effectively. They essentially act as the blueprint for the AI’s actions, ensuring alignment with the predefined objectives set by the application developers.

At the core of Prompt Security’s mission is the commitment to facilitate the safe and secure integration of GenAI across all facets of organizational operations – from the GenAI tools utilized by employees to the integration of GenAI within in-house applications. The company’s suite of solutions meticulously scrutinizes each prompt and model response, thwarting prompt injection attempts, preventing inadvertent exposure of sensitive data, filtering out harmful content, and providing protection against a myriad of GenAI-related threats.

Aligned with its ethos of nurturing a collaborative GenAI Security community, Prompt Security is dedicated to knowledge-sharing and resource dissemination. As a testament to this commitment, Prompt Security has introduced the Prompt Fuzzer, an interactive tool accessible on GitHub, aimed at bolstering the security posture of GenAI applications. Upon installation, users can input their system prompts along with the relevant configurations, initiating the Fuzzer to commence its battery of tests. Through this evaluation process, the application’s system prompt is subjected to a spectrum of dynamic LLM-based attacks, including sophisticated prompt injections, prompt leaks, jailbreak attempts, content elicitation, ethical compliance assessments, and more. The tool furnishes security evaluations based on the test outcomes, equipping developers with actionable insights to reinforce the system prompts as deemed necessary.

The Prompt Fuzzer, driven by an LLM, tailors its attack simulations to align with the specific configuration and subject matter of each application. Additionally, users gain access to an interactive Playground, providing a sandbox environment for iterative testing and refinement of their system prompts.

Prompt Security’s overarching objective is to empower organizations to develop safer and more resilient applications that harness the full potential of Generative AI.

The Prompt Fuzzer is now available to the broader community on GitHub, underscoring Prompt Security’s commitment to fostering collaboration and innovation in the realm of GenAI security.

Conclusion:

Prompt Security’s launch of the Prompt Fuzzer signifies a significant advancement in the GenAI application security landscape. By providing developers with an accessible and comprehensive tool for assessing and fortifying system prompts, Prompt Security aims to address critical security concerns surrounding GenAI adoption. This initiative not only enhances the safety and resilience of GenAI applications but also fosters collaboration and innovation within the GenAI security community. As organizations continue to embrace Generative AI technologies, solutions like the Prompt Fuzzer will play a crucial role in ensuring the security and integrity of these transformative applications, thereby driving market confidence and accelerating GenAI adoption.