- PyramidInfer optimizes LLM inference by compressing the KV cache while considering inter-layer dependencies and pre-computation memory demands.

- Experimental results demonstrate a 2.2x improvement in throughput and a 54% reduction in KV cache memory compared to existing methods.

- Strategies for efficient chatbot queries include maximizing GPU parallelism through pipeline parallelism, KV cache offload, or reducing the KV cache footprint.

- PyramidInfer stands out by incorporating layer-specific compression in both prefill and generation phases.

- Fundamental hypotheses, including Inference Context Redundancy (ICR) and Recent Attention Consistency (RAC), underpin PyramidInfer’s design and effectiveness.

- Rigorous evaluations across various tasks and models confirm PyramidInfer’s significant reductions in GPU memory usage and increased throughput while maintaining generation quality.

Main AI News:

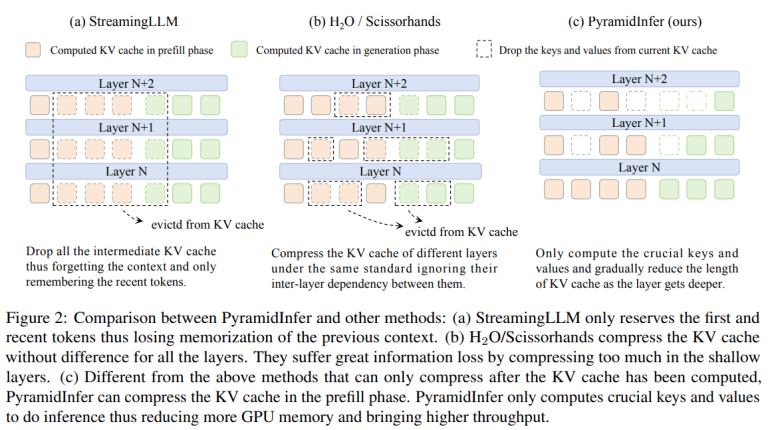

In the realm of natural language processing, the advent of large language models (LLMs) like GPT-4 has undoubtedly heralded a new era of language comprehension. However, despite their remarkable capabilities, these models grapple with the challenge of high GPU memory consumption during inference, thus impeding their scalability for real-time applications such as chatbots. While existing methods have attempted to mitigate this issue by compressing the key-value (KV) cache, they often fail to account for critical factors like inter-layer dependencies and pre-computation memory demands, leaving much room for improvement.

Enter PyramidInfer, a groundbreaking solution developed collaboratively by researchers from Shanghai Jiao Tong University, Xiaohongshu Inc., and South China University of Technology. Unlike its predecessors, PyramidInfer takes a holistic approach to enhance LLM inference by meticulously compressing the KV cache while retaining essential inter-layer dependencies and pre-computation memory demands. Drawing inspiration from recent advancements in attention weight consistency among tokens, PyramidInfer optimizes GPU memory usage significantly, unlocking unprecedented efficiency in LLM inference.

Experimental validation showcases the prowess of PyramidInfer, demonstrating a remarkable 2.2x improvement in throughput and a staggering 54% reduction in KV cache memory compared to existing methods. These results underscore the effectiveness of PyramidInfer across diverse tasks and models, signaling a paradigm shift in LLM inference efficiency.

In today’s dynamic business landscape, where the demand for efficient chatbot queries continues to surge, strategies to maximize GPU parallelism are paramount. While some advocate for increasing GPU memory through pipeline parallelism and KV cache offload, others emphasize the importance of reducing the KV cache footprint. Techniques such as FlashAttention 2 and PagedAttention offer viable solutions by minimizing memory waste through optimized CUDA operations. However, PyramidInfer stands out by addressing critical gaps in existing methodologies, notably by incorporating layer-specific compression in both prefill and generation phases.

At the heart of PyramidInfer’s design lie two fundamental hypotheses: Inference Context Redundancy (ICR) and Recent Attention Consistency (RAC). Through meticulous experimentation with a 40-layer LLaMA 2-13B model, researchers validated the ICR hypothesis, revealing that deeper layers exhibit higher redundancy in context keys and values, thus enabling significant KV cache reduction without compromising output quality. Furthermore, the RAC hypothesis affirmed that certain keys and values consistently attract attention from recent tokens, paving the way for the identification of pivotal contexts (PVCs) crucial for efficient inference. Leveraging these insights, PyramidInfer orchestrates an effective compression of the KV cache, thereby optimizing both prefill and generation phases.

The performance of PyramidInfer transcends theoretical prowess, as evidenced by rigorous evaluations across a spectrum of tasks and models. From language modeling on wikitext-v2 to mathematical reasoning with GSM8K, PyramidInfer consistently delivers substantial reductions in GPU memory usage and enhanced throughput without sacrificing generation quality. Its versatility is further underscored by successful tests on various models, including LLaMA 2, LLaMA 2-Chat, Vicuna 1.5-16k, and CodeLLaMA, across different sizes. In comparison to full cache methods and local strategies, PyramidInfer emerges as a clear frontrunner, setting a new standard for LLM inference efficiency in the digital age.

Conclusion:

The introduction of PyramidInfer marks a significant advancement in the realm of LLM inference efficiency. Its ability to optimize GPU memory usage, enhance throughput, and maintain generation quality positions it as a game-changer in the market. With its comprehensive approach to KV cache compression and validation through rigorous experimentation, PyramidInfer sets a new standard for LLM inference solutions, catering to the growing demand for efficient and scalable natural language processing technologies. Businesses leveraging PyramidInfer can expect enhanced performance, reduced infrastructure costs, and a competitive edge in delivering real-time language-based applications.