TL;DR:

- Perseus, a groundbreaking framework, slashes energy consumption in large-scale AI model training by up to 30%.

- Large language models like GPT-3 demand substantial energy, equivalent to multiple households’ electricity consumption over weeks.

- Researchers from the University of Michigan and the University of Washington introduced Perseus to optimize energy without compromising efficiency.

- Energy challenge breakdown: Intrinsic (computation imbalance) and Extrinsic (parallel pipelines) energy bloat.

- Perseus pre-characterizes iteration time energy and minimizes intrinsic energy bloat while strategically reducing energy in non-straggler pipelines.

- Simulations validate Perseus’ efficacy, resulting in substantial energy savings.

- Implications for the future: Perseus enhances sustainability in distributed training for Large Language Models and GenAI.

Main AI News:

In the realm of artificial intelligence, energy consumption has emerged as a critical concern, particularly in the context of training large language models like GPT-3. These computational behemoths demand substantial amounts of energy during their training and inference phases, and the environmental impact is undeniable. In this era of heightened environmental consciousness, addressing this issue is paramount.

A Paradigm Shift in Energy Optimization

Efforts to optimize energy consumption without compromising model efficiency have been the focus of researchers from the University of Michigan and the University of Washington. Their groundbreaking work has given birth to Perseus, a pioneering framework that promises to slash energy bloat in large-scale machine learning and AI model training by up to 30%.

Understanding the Energy Challenge

The energy usage of these models is multifaceted, influenced by factors such as model size, task complexity, hardware specifications, and operational duration. Training them necessitates substantial computational resources, often leveraging high-performance GPUs or TPUs, leading to a significant carbon footprint. The sheer magnitude of energy consumption during the training of models like GPT-3 can equate to the electricity consumption of multiple households over an extended period.

Diving into the Energy Quandary

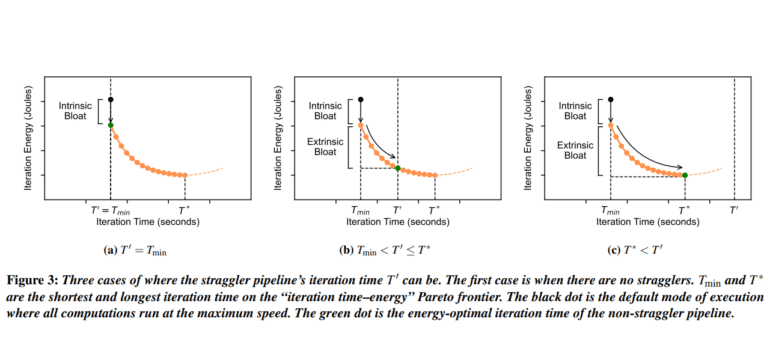

To address this challenge, researchers recognized the need to dissect energy consumption into intrinsic and extrinsic sources of bloat. Intrinsic energy bloat is attributed to computation imbalances, while extrinsic energy bloat arises from multiple pipelines running in parallel to scale out training with massive datasets. The latter, especially, can lead to wasteful energy consumption, with pipelines running faster than the straggler pipeline.

The Perseus Solution

Perseus represents a holistic approach to tackling energy inefficiency in machine learning and AI model training. It efficiently pre-characterizes the entire iteration time energy, minimizing intrinsic energy bloat under normal operating conditions. Moreover, it effectively mitigates extrinsic energy bloat by strategically reducing energy in non-straggler pipelines.

A Pioneering Framework

Researchers conducted simulations to validate Perseus’ efficacy, employing hybrid parallelism in various strong scaling configurations. They measured the reduction in energy bloat and the consequential extrinsic energy savings. By reducing the number of micro-batches and optimizing the pipeline iterations, they successfully eliminated intrinsic energy bloat, resulting in a substantial reduction in energy consumption.

Implications for the Future

The integration of Perseus into the training workflow holds immense promise for the future of AI development. It stands to make distributed training for Large Language Models (LLMs) and GenAI significantly more sustainable. With Perseus leading the charge, the AI community is poised to not only enhance efficiency but also reduce its carbon footprint, ensuring a greener and more responsible AI ecosystem.

Conclusion:

The introduction of Perseus represents a significant breakthrough in the field of machine learning and AI training. Its ability to reduce energy bloat by up to 30% while maintaining model efficiency has the potential to reshape the market. Companies and organizations that adopt Perseus can significantly lower their energy costs and environmental footprint, positioning them as leaders in sustainable AI development. This innovation aligns with the growing demand for environmentally responsible AI solutions and sets a new standard for energy-efficient machine learning.