TL;DR:

- UCLA’s research introduces ‘SPIN’ (Self-Play fIne-tuNing) method for enhancing Large Language Models (LLMs).

- LLMs have widespread applications but require resource-intensive fine-tuning processes.

- SPIN allows LLMs to self-improve without additional human-annotated data.

- SPIN operates as a two-player game, refining LLM responses to align with human-generated ones.

- Promising results show substantial model score improvements with SPIN.

- SPIN has the potential to revolutionize AI model development.

Main AI News:

In the latest groundbreaking research paper from UCLA, a revolutionary approach called ‘SPIN’ (Self-Play fIne-tuNing) has been introduced, designed to elevate the capabilities of Large Language Models (LLMs) without the need for copious amounts of human-annotated data. This innovation, detailed in the paper, aims to unlock the full potential of weak LLMs and transform them into robust, high-performance AI models.

Large Language Models have undeniably transformed the landscape of Artificial Intelligence, demonstrating exceptional prowess in natural language processing across various domains, from mathematical problem-solving to code generation and even the composition of legal opinions. Their versatility and adaptability make them indispensable in nearly every facet of AI research and application.

However, enhancing the performance of LLMs typically relies on resource-intensive and time-consuming processes like Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF), both of which necessitate substantial human-annotated data. This limitation has posed a significant challenge in the quest to refine LLMs efficiently.

Enter ‘SPIN,’ a pioneering fine-tuning method conceived by UCLA researchers. Unlike traditional approaches, SPIN leverages a unique concept: self-play. This means that LLMs engage in a kind of intellectual contest with themselves, refining their capabilities without external guidance or supervision.

While previous attempts have explored alternatives, such as using synthetic data with binary feedback or employing weaker models to guide stronger ones, SPIN takes a more streamlined and effective route. It eliminates the need for human binary feedback and operates seamlessly with just one LLM, simplifying the entire process.

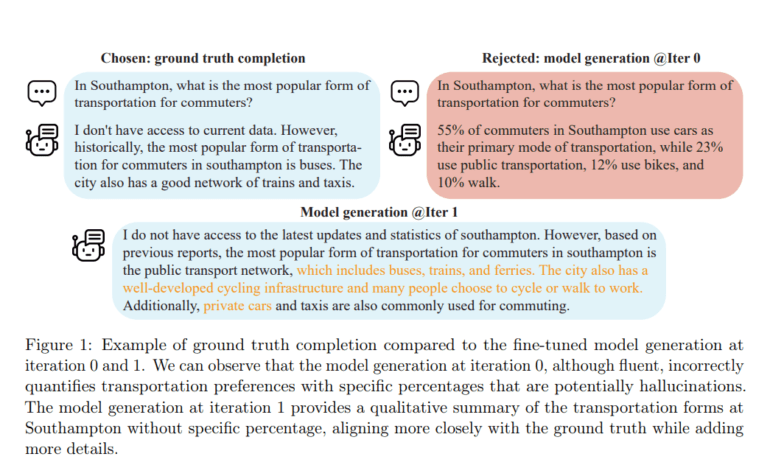

The essence of SPIN can be likened to a two-player game. In this scenario, the first LLM generates responses, striving to mimic the human-annotated dataset’s responses as closely as possible. Meanwhile, the second LLM assumes the role of a discerning judge, distinguishing between the responses generated by the first LLM and authentic human-generated responses. Through iterative fine-tuning, the first LLM gradually improves, aiming to generate responses indistinguishable from those created by humans.

The results of this innovative method are promising. For instance, when prompted to list popular forms of transportation in Southampton, an LLM initially struggled to provide accurate information. However, after applying SPIN, the model progressively improved its responses, aligning more closely with the ground truth.

To assess the efficacy of SPIN, the researchers employed the zephyr-7b-sft-full model, derived from the pre-trained Mistral-7B and further fine-tuned on an SFT dataset. The base model generated synthetic responses for 50,000 randomly sampled prompts from the dataset. The outcomes revealed that SPIN led to a remarkable 2.66% improvement in the average model score at iteration 0. Subsequently, in the next iteration, the LLM from the previous cycle generated new responses for SPIN, resulting in an additional 1.32% enhancement in the average score.

Conclusion:

SPIN represents a game-changing approach to enhancing the capabilities of Large Language Models. By leveraging self-play and minimizing the reliance on human-annotated data, this method offers a more efficient and effective means of refining AI models, paving the way for even more remarkable advancements in the field of Artificial Intelligence.