- UK at forefront of global initiative to test advanced AI models for safety risks before public release

- International collaboration includes countries like South Korea, US, Japan, and France

- Seoul AI Summit aims to create unified regulatory framework akin to Montreal Protocol

- UK’s AI Safety Institute announced as world’s first government-backed organization for AI safety

- Network of institutes to share information and resources to enhance understanding of AI safety

- Urgency emphasized as leaders prepare for Paris summit to transition to regulatory discussions

- Funding allocated for AI safety testing; more substantial program needed for comprehensive risk understanding

- Summit criticized for lack of inclusivity; key voices, like Korean civil society groups, absent

Main AI News:

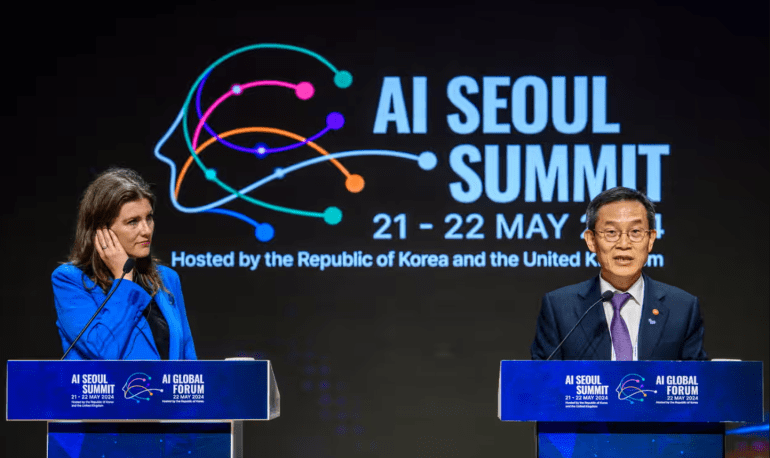

In the pursuit of safeguarding the public from the potential risks posed by advanced AI, the United Kingdom is spearheading an international initiative to thoroughly test cutting-edge AI models for safety concerns prior to their widespread deployment. With regulatory bodies racing against time to establish a viable safety framework before the upcoming Paris summit in six months, the UK’s AI Safety Institute, a pioneering endeavor, now finds itself joined by analogous institutions from across the globe, including South Korea, the United States, Singapore, Japan, and France.

At the Seoul AI Summit, regulators aspire to foster collaboration among these entities, envisioning a 21st-century equivalent of the Montreal Protocol—an influential accord aimed at regulating CFCs to mend the ozone layer. However, before such aspirations can materialize, consensus must be reached among these institutes on how to harmonize disparate approaches and regulations into a cohesive endeavor to oversee AI research.

Michelle Donelan, the UK’s technology secretary, underscored the significance of these developments, noting, “At Bletchley, we introduced the UK’s AI Safety Institute—the world’s inaugural government-backed organization dedicated to advanced AI safety for the greater good.” She attributed the emergence of a global network of similar entities to what she termed the “Bletchley effect.”

These institutes are poised to commence sharing vital information concerning AI models, encompassing their capabilities, limitations, and associated risks. Moreover, they will collaborate on monitoring specific instances of “AI harms and safety incidents,” while pooling resources to enhance global comprehension of AI safety science.

During the inaugural meeting of these nations, Donelan cautioned that the establishment of the network marks only the initial phase. She stressed, “We must not become complacent. As AI development accelerates, our efforts must match that pace if we are to mitigate risks and capitalize on the boundless opportunities for our society.”

With a looming deadline, the safety institutes face intense scrutiny. This autumn, leaders will convene once more, this time in Paris, for the first comprehensive AI summit post-Bletchley. There, to transition from deliberating on AI model testing to regulatory frameworks, these institutes must demonstrate mastery over what Donelan described as “the nascent science of frontier AI testing and evaluation.”

Jack Clark, co-founder and head of policy at AI lab Anthropic, hailed the establishment of a functional safety institute as positioning the UK “a hundred miles” ahead in the quest for safe AI compared to two years prior. He emphasized the imperative for governments to sustain investments in these institutes to cultivate the requisite expertise and generate substantial evidence.

As part of this scientific endeavor, Donelan unveiled a £8.5 million funding allocation to propel advancements in AI safety testing. Francine Bennett, interim director of the Ada Lovelace Institute, deemed this funding a commendable start, emphasizing the need for a more extensive program to comprehend and mitigate social and systemic risks.

While lauded for its objectives, the summit faced criticism for its exclusionary nature. Absent from the dialogue were Korean civil society groups, with the host nation represented solely by academia, government, and industry. Moreover, participation was restricted to major AI corporations, prompting concerns voiced by Roeland Decorte, president of the AI Founders Association, regarding the focus on large-scale models at the expense of broader industry inclusivity and sustainability. Decorte posed a crucial question: “Do we aspire to regulate and construct a framework for a future AI economy that fosters sustainability for the majority of industry players?“

Conclusion:

The concerted efforts led by the UK and supported by a global network of AI safety institutes signify a crucial step towards mitigating risks associated with advanced AI deployment. However, the exclusionary nature of the summit underscores the necessity for broader inclusivity and collaboration across diverse stakeholders to ensure comprehensive regulatory frameworks that foster sustainability and innovation in the AI market.