- Large Language Models (LLMs) face challenges in complex reasoning and planning scenarios due to limitations in training methodologies.

- AlphaLLM integrates Monte Carlo Tree Search (MCTS) with LLMs, enabling autonomous self-improvement without additional data annotations.

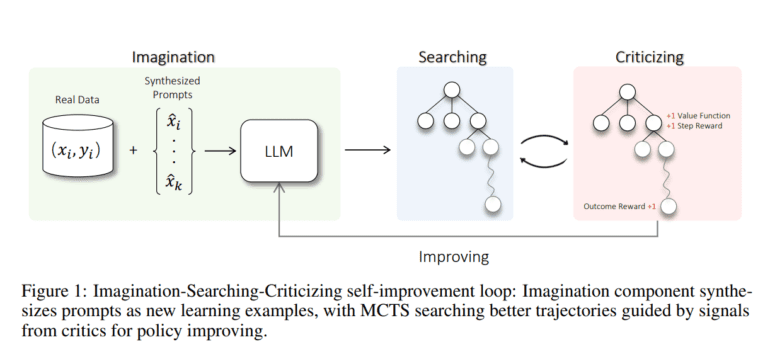

- The framework comprises imagination, MCTS, and critic models, fostering independent simulation and evaluation of potential responses.

- Empirical testing on mathematical reasoning tasks demonstrates significant performance boosts, validating AlphaLLM’s efficacy.

- AlphaLLM achieves notable accuracy improvements on GSM8K and MATH datasets, showcasing its prowess in strategic decision-making.

Main AI News:

In the realm of artificial intelligence, Large Language Models (LLMs) reign supreme for their unparalleled ability to comprehend and generate text reminiscent of human language across a myriad of applications. These models serve as the backbone of technologies that streamline and augment text-based tasks, revolutionizing industries ranging from customer service to content creation. However, amidst their remarkable prowess, modern LLMs grapple with formidable obstacles in scenarios necessitating intricate reasoning and strategic planning. These challenges emanate from the inherent limitations of prevailing training methodologies, which heavily rely on copious amounts of meticulously annotated data—a resource that is often scarce or impractical to amass.

Recent advancements in the field have introduced sophisticated prompting techniques like GPT -4’s Chain-of-Thought, aimed at bolstering reasoning capabilities by elucidating intermediate steps. While some models showcase the potential of refining LLMs through fine-tuning with high-quality data, such endeavors are hampered by the scarcity of accessible data. Enter self-correction strategies, which empower LLMs to iteratively refine their outputs through internal feedback loops. Moreover, drawing inspiration from strategic games like Go, the integration of Monte Carlo Tree Search (MCTS) has been instrumental in augmenting decision-making processes within language models, exemplified by the likes of AlphaZero.

Pioneering a paradigm shift in the landscape of language model development, researchers at Tencent AI Lab have unveiled AlphaLLM—a groundbreaking framework that seamlessly merges MCTS with LLMs to catalyze self-improvement sans additional data annotations. What sets this innovative framework apart is its assimilation of strategic planning methodologies from board games into the domain of language processing, enabling the model to autonomously simulate and evaluate potential responses.

At the heart of the AlphaLLM methodology lie three pivotal components: the imagination module, which generates novel prompts to broaden the spectrum of learning scenarios; the MCTS mechanism, which traverses through prospective responses; and critic models tasked with evaluating the efficacy of these responses. Empirical validation of the framework was conducted utilizing the GSM8K and MATH datasets, with a particular focus on mathematical reasoning tasks. Through this approach, AlphaLLM fortifies its problem-solving prowess by assimilating insights from simulated outcomes and internal feedback loops, thereby honing its strategic decision-making acumen devoid of external data dependencies.

Empirical assessments of AlphaLLM underscore its remarkable performance enhancements in mathematical reasoning tasks. Notably, the model’s accuracy surged from 57.8% to 92.0% on the GSM8K dataset, while on the MATH dataset, it exhibited a substantial improvement from 20.7% to 51.0%. These findings unequivocally validate the efficacy of AlphaLLM’s self-improvement mechanism in augmenting LLM capabilities. By harnessing the power of internal feedback loops and strategic simulations, AlphaLLM emerges as a formidable force, unlocking unprecedented gains in task-specific performance sans additional data annotations.

Conclusion:

AlphaLLM’s innovative integration of MCTS with LLMs marks a significant advancement in the realm of language model development. Its ability to autonomously enhance performance through strategic planning and internal feedback heralds a new era of self-improving AI technologies. This breakthrough holds promising implications for industries reliant on text-based tasks, offering enhanced efficiency and accuracy in various applications, from customer service automation to content creation platforms. Companies in these sectors should closely monitor the evolution of AlphaLLM and consider its integration into their systems to stay competitive in an increasingly AI-driven market landscape.