- The Linux Foundation launches the Open Platform for Enterprise AI (OPEA) to foster open, modular generative AI systems.

- OPEA aims to standardize components and frameworks, enabling interoperability and accelerated deployment of AI solutions.

- Industry heavyweights like Cloudera, Intel, Red Hat, and VMware collaborate within OPEA to drive innovation in enterprise AI.

- Evaluation criteria established by OPEA ensure the performance, features, trustworthiness, and enterprise readiness of generative AI systems.

- OPEA promotes collaboration with the open-source community to refine and improve generative AI technologies.

Main AI News:

Intel and other industry leaders have pledged their commitment to developing open generative AI tools tailored for enterprise applications. Can these AI systems, designed to streamline tasks such as report completion and spreadsheet formulation, achieve interoperability? With the collaboration of prominent entities like Cloudera and Intel, the Linux Foundation has initiated the Open Platform for Enterprise AI (OPEA) project to explore this question.

The Linux Foundation’s announcement of OPEA signifies a concerted effort to nurture open, multi-provider, and modular generative AI frameworks. Ibrahim Haddad, Executive Director of LF AI and Data, emphasized OPEA’s mission to facilitate the creation of robust and scalable AI systems by leveraging the collective innovation of the open-source community.

Under the umbrella of OPEA, leading companies, including Cloudera, Intel, Red Hat, and VMware, are pooling their expertise to advance the field of enterprise AI. By fostering collaboration, OPEA aims to unlock new frontiers in AI technology, empowering organizations to harness the full potential of open-source innovation.

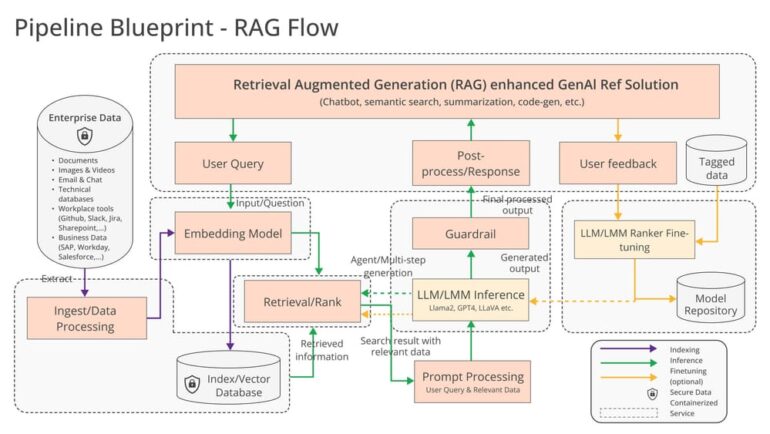

What exactly will these industry giants develop through OPEA? Haddad suggests several possibilities, such as optimizing AI toolchains and compilers for diverse hardware environments and creating heterogeneous pipelines for retrieval-augmented generation (RAG). RAG, a technique gaining traction in enterprise AI, extends the knowledge base of AI models beyond their training data, enabling them to generate responses based on external information sources.

Intel, in its press release, elaborated on OPEA’s objectives, highlighting the need for standardized components to facilitate the adoption of RAG solutions by enterprises. By establishing industry standards and best practices, OPEA aims to streamline the deployment of generative AI systems, enabling organizations to bring innovative solutions to market more efficiently.

Evaluation will be a crucial aspect of OPEA’s efforts. The project proposes a comprehensive rubric for assessing generative AI systems based on performance, features, trustworthiness, and enterprise readiness. By providing transparent criteria for evaluation, OPEA seeks to instill confidence in the reliability and effectiveness of AI deployments.

Rachel Roumeliotis, Director of Open Source Strategy at Intel, emphasized OPEA’s commitment to collaboration with the open-source community. Through shared testing protocols and assessments, OPEA aims to drive continuous improvement in generative AI technologies, ensuring they meet the evolving needs of enterprises.

Looking ahead, OPEA envisions further advancements in open model development, akin to initiatives pursued by industry leaders like Meta and Databricks. Intel has already contributed reference implementations for generative AI applications, including chatbots and document summarizers, optimized for specific hardware architectures.

While OPEA’s members are deeply invested in advancing enterprise generative AI, the ultimate test lies in their ability to collaborate effectively. Despite individual interests, the success of OPEA hinges on the willingness of vendors to prioritize interoperability over vendor lock-in. Only through concerted efforts can OPEA realize its vision of an inclusive and innovative AI ecosystem for enterprises worldwide.

Conclusion:

The launch of the Open Platform for Enterprise AI (OPEA) initiative signifies a concerted effort to foster collaboration and innovation in the enterprise AI space. By promoting open standards and interoperability, OPEA has the potential to drive market growth and empower organizations to leverage AI technologies more effectively. However, the ultimate success of OPEA will depend on the willingness of industry players to prioritize collaboration and embrace open-source principles in their AI initiatives.