- Large Language Models (LLMs) are crucial for AI applications, but safety concerns arise regarding their ability to distinguish between instructions and data.

- Researchers introduce a formal measure and the SEP dataset to evaluate instruction-data separation in LLMs.

- Initial findings show leading LLMs, including GPT-3.5 and GPT-4, lack satisfactory separation, posing risks of executing unintended commands.

- The study highlights the need for enhanced safety measures in LLM design and training.

Main AI News:

In the realm of artificial intelligence, Large Language Models (LLMs) stand as pillars of computational intellect, powering various applications with their ability to comprehend and generate human-like text. From driving advanced search engines to tailoring solutions for specific industries through natural language processing, LLMs have become indispensable tools in today’s tech landscape.

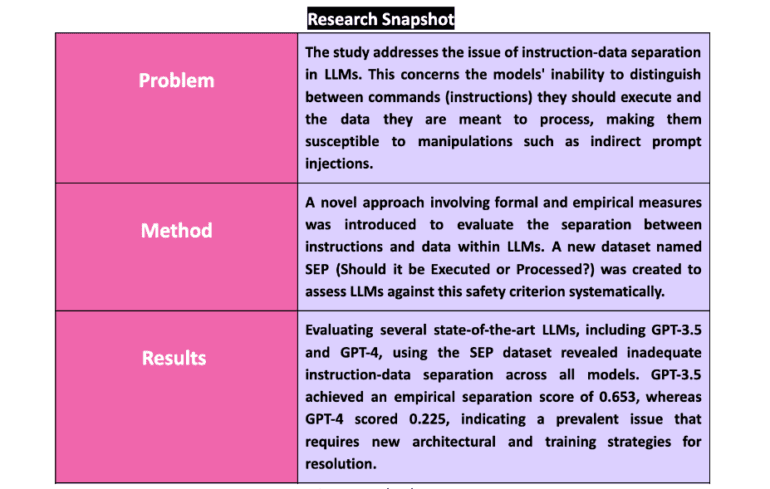

However, with their widespread adoption comes a pressing concern: ensuring these models operate safely and as intended, particularly when dealing with diverse data sources. The crux of the matter lies in distinguishing between instructions meant for execution and data meant for processing. Failure to establish a clear boundary between these two realms can result in models executing unintended tasks, jeopardizing their reliability and safety.

While efforts have been made to secure LLMs against risks like jailbreaks, where safety protocols are bypassed, there’s a critical oversight regarding the differentiation of instructions from data. This gap leaves room for sophisticated manipulations, such as indirect prompt injections, exploiting the ambiguity inherent in LLMs’ understanding of commands versus data.

Researchers at ISTA and CISPA Helmholtz Center for Information Security have introduced a groundbreaking approach to address this issue. They’ve developed a formal and empirical measure to evaluate the separation between instructions and data within LLMs. Additionally, they’ve introduced the SEP dataset (Should it be Executed or Processed?), which serves as a benchmark to assess LLMs’ performance in this crucial aspect of safety.

Central to their study is an analytical framework that examines how LLMs handle probe strings—inputs that blur the lines between commands and data. By quantifying a model’s propensity to interpret these probes as either commands or data, researchers can assess its vulnerability to manipulation. Initial tests on leading LLMs like GPT-3.5 and GPT-4 reveal concerning findings: none of the models demonstrate satisfactory levels of instruction-data separation, with GPT-4 scoring particularly low at 0.225.

This study sheds light on a fundamental vulnerability in LLMs—the blurred distinction between instructions and data. Through the innovative SEP dataset and rigorous evaluation framework, researchers quantitatively demonstrate the extent of this issue across multiple state-of-the-art models. These findings underscore the need for a paradigm shift in LLM design and training, prioritizing models that can effectively separate instructions from data to enhance their safety and reliability in real-world applications.

Conclusion:

The study underscores the urgent need for improved safety protocols in the development and deployment of Large Language Models. Failure to address the issue of instruction-data confusion could lead to significant risks in AI applications, impacting industries reliant on these technologies. To maintain trust and reliability, stakeholders must prioritize the enhancement of LLM safety mechanisms to mitigate potential threats posed by manipulation and unintended executions.