- UC Berkeley researchers unveil GOEX, a groundbreaking runtime for Large Language Models (LLMs).

- GOEX features intuitive undo and damage confinement abstractions, enhancing LLM deployment safety.

- Challenges in ubiquitous LLM deployments, such as delayed feedback and disrupted testing methodologies, are addressed by GOEX.

- The concept of “post-facto LLM validation” shifts validation focus to output rather than process, minimizing risks.

- GOEX supports various actions, including RESTful API requests and database operations, ensuring flexibility.

- Security features like local credential storage protect sensitive data in LLM-hosted environments.

Main AI News:

In a groundbreaking development, researchers at UC Berkeley have unveiled GOEX, a cutting-edge runtime tailored for Large Language Models (LLMs). Designed with intuitive undo and damage confinement abstractions, GOEX promises to revolutionize the deployment of LLM agents in real-world applications, ensuring enhanced safety and reliability.

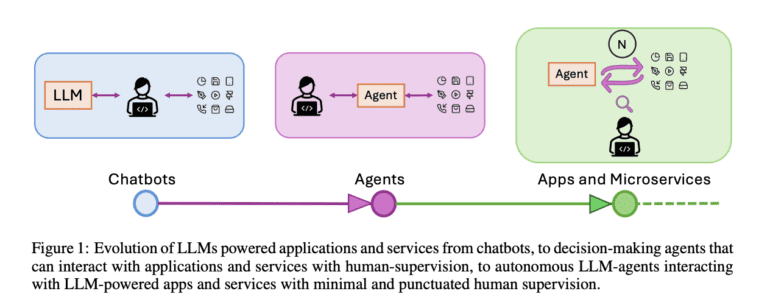

LLMs are no longer confined to dialogue systems; they are now actively engaged in a myriad of real-world tasks. The prospect of LLM-powered interactions dominating the internet landscape is fast becoming a reality. However, the complexity of LLM-generated code poses significant challenges, necessitating human verification before implementation.

The introduction of GOEX addresses the critical need for robust system design to mitigate potential errors. By leveraging intuitive undo and damage confinement abstractions, GOEX empowers developers to deploy LLM agents with confidence, minimizing the risk of unintended actions and errors.

The challenges associated with ubiquitous LLM deployments are multifaceted. Delayed feedback, aggregate signal analysis, and the disruption of traditional testing methodologies are just a few of the hurdles that developers face. GOEX tackles these challenges head-on by providing a secure and flexible runtime environment for executing LLM-generated actions.

The concept of “post-facto LLM validation” proposed by the UC Berkeley researchers represents a paradigm shift in validation methodologies. By focusing on the output of LLM-generated actions rather than the process itself, developers can effectively mitigate risks while maximizing efficiency.

GOEX’s innovative features, including abstractions for “undo” and “damage confinement,” provide developers with the tools they need to navigate diverse deployment contexts seamlessly. Whether executing RESTful API requests, database operations, or filesystem actions, GOEX ensures unparalleled security and flexibility.

With GOEX, developers can rest assured that sensitive data remains protected at all times. By storing credentials locally and providing secure access to database state information, GOEX minimizes the risk of unauthorized access, safeguarding the integrity of LLM-hosted environments.

Conclusion:

UC Berkeley’s GOEX runtime solution marks a significant advancement in the deployment of Large Language Models. With its intuitive abstractions and robust security features, GOEX not only addresses existing challenges but also opens up new possibilities for innovative applications in the market. Its introduction heralds a new era of enhanced safety and reliability in LLM-powered systems, offering developers unprecedented confidence in their deployments. This innovation is poised to reshape the landscape of LLM deployment, driving market growth and fostering greater trust in AI-driven solutions.