TL;DR:

- UC Berkeley introduces SERL, a software suite for robotic reinforcement learning (RL) efficiency.

- SERL integrates sample-efficient off-policy deep RL methods, reward computation tools, and environment resetting capabilities.

- The suite includes a premium controller tailored for a widely adopted robot and a diverse set of challenging tasks.

- Empirical evaluations demonstrate significant performance improvements over baseline policies.

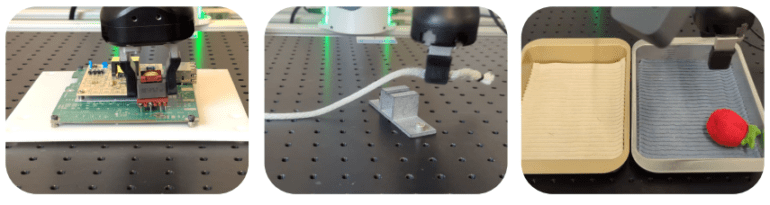

- Learned RL policies excel in tasks such as object relocation, cable routing, and PCB insertion.

- Implementation achieves highly efficient learning with average training times of 25 to 50 minutes per policy.

- Policies exhibit exceptional success rates, resilience to perturbations, and emergent recovery behaviors.

Main AI News:

In recent years, the realm of robotic reinforcement learning (RL) has witnessed remarkable strides, culminating in the development of methodologies adept at processing intricate image inputs, navigating real-world scenarios, and assimilating auxiliary data like demonstrations and prior experiences. Despite this progress, practitioners are keenly aware of the inherent challenges in effectively harnessing robotic RL, stressing that the nuanced implementation nuances of these algorithms are often pivotal, if not more so, than the algorithms themselves.

Acknowledging the formidable barrier posed by the relative inaccessibility of robotic RL methodologies, hindering their widespread utilization and evolution, a meticulously curated library has emerged. This comprehensive library integrates a sample-efficient off-policy deep RL methodology alongside tools for reward computation and environment resetting. Furthermore, it encompasses a premium controller specifically tailored for a universally adopted robot, complemented by an array of demanding sample tasks. This resource is unveiled to the community as a concerted endeavor to tackle accessibility issues head-on, furnishing a transparent exposition of its design rationale while presenting compelling empirical validations.

Upon scrutiny across 100 trials per task, the learned RL policies outshone BC policies by a significant margin, surpassing them by 1.7x for Object Relocation, 5x for Cable Routing, and an impressive 10x for PCB Insertion!

This implementation showcases a remarkable capacity for achieving highly efficient learning, yielding policies for tasks such as PCB board assembly, cable routing, and object relocation within an average training window of 25 to 50 minutes per policy. These findings mark a notable enhancement over prevailing benchmarks documented for analogous tasks in scholarly literature.

Of particular note, the policies derived from this implementation demonstrate exemplary or near-exemplary success rates, exhibiting exceptional resilience even in the face of perturbations, and exemplifying emergent recovery and corrective behaviors. Researchers are optimistic that these promising outcomes, together with the dissemination of a top-tier open-source implementation, will serve as a pivotal asset for the robotics community, catalyzing further strides in the domain of robotic RL.

Conclusion:

The introduction of SERL marks a significant advancement in the field of robotic reinforcement learning, addressing accessibility barriers and driving efficiency gains. This innovation has the potential to revolutionize various industries reliant on robotics, enabling faster deployment of robust RL solutions for complex tasks. Businesses leveraging SERL can anticipate enhanced operational efficiency, cost savings, and accelerated innovation in their robotic systems.