TL;DR:

- UC Berkeley researchers introduce SERL, a software suite for sample-efficient robotic reinforcement learning.

- The suite addresses accessibility concerns in RL with off-policy deep RL methods, reward computation tools, and a high-quality controller.

- SERL outperforms baseline policies across various tasks, with notable improvements in Object Relocation, Cable Routing, and PCB Insertion.

- Implementation achieves efficient learning with policies for tasks like PCB board assembly and cable routing within 25 to 50 minutes per policy.

- Results demonstrate high success rates, robustness against perturbations, and emergent recovery and correction behaviors.

Main AI News:

Advancements in robotic reinforcement learning (RL) have been remarkable in recent years, with researchers tackling challenges such as handling complex image observations and training in real-world scenarios. Despite this progress, the practical implementation of RL algorithms remains a daunting task, often overshadowing the algorithmic choices themselves.

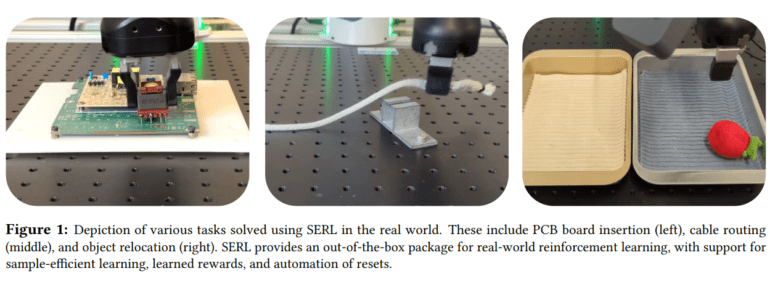

Recognizing this challenge, a meticulously crafted software suite has emerged to address the accessibility concerns in robotic RL. This suite incorporates a sample-efficient off-policy deep RL method along with tools for reward computation and environment resetting. Moreover, it features a high-quality controller customized for a widely adopted robot, accompanied by a variety of challenging example tasks. By offering transparency in design decisions and presenting compelling experimental results, this resource aims to demystify RL methods for the broader community.

Upon evaluation, RL policies learned using this suite significantly outperformed baseline policies across various tasks. For instance, in Object Relocation, the improvement was 1.7 times, while in Cable Routing and PCB Insertion, it soared to 5 and 10 times, respectively.

The implementation showcases efficient learning, achieving policies for tasks like PCB board assembly and cable routing within an average training time of 25 to 50 minutes per policy. These results mark an advancement over existing outcomes reported in the literature for similar tasks. Notably, the derived policies demonstrate high success rates, robustness against perturbations, and exhibit emergent recovery and correction behaviors.

With the release of this high-quality open-source implementation, researchers anticipate fostering further advancements in robotic RL. The promising outcomes coupled with the accessible tooling provided by SERL are poised to accelerate progress in this field, empowering researchers and practitioners alike.

Conclusion:

The introduction of SERL by UC Berkeley researchers marks a significant leap in the field of robotic reinforcement learning. By addressing accessibility concerns and showcasing remarkable performance improvements over baseline policies, this software suite has the potential to revolutionize the robotics market, enabling faster and more efficient development of robotic systems.