TL;DR:

- Deep learning’s growth across industries, including data mining and natural language processing.

- Deep neural networks face issues with accuracy and reliability.

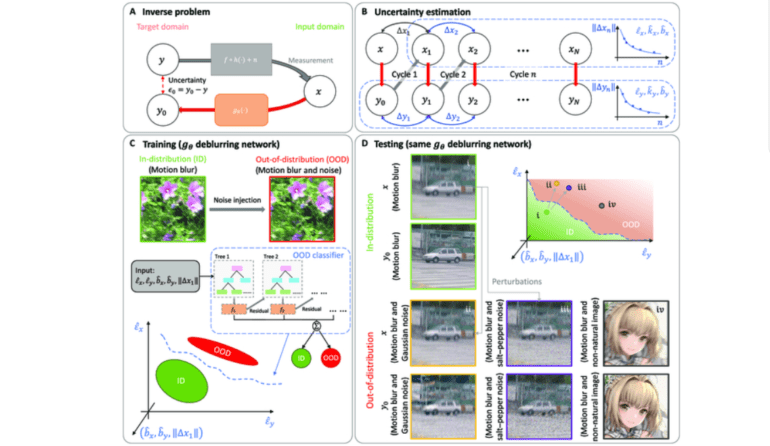

- UCLA researchers introduce uncertainty quantification (UQ) for confidence in predictions.

- Cycle consistency-based UQ enhances reliability in inverse imaging problems.

- Forward–backward cycles with a physical model and iterative-trained neural network were used.

- Upper and lower limits for cycle consistency reveal its link to output uncertainty.

- The machine learning model categorizes images based on disturbances, improving classification precision.

- OOD image identification for super-resolution using forward-backward cycles.

- UCLA’s approach outperforms alternative methods in identifying OOD photos.

Main AI News:

With the rapid expansion of deep learning’s application across various industries, from data mining to natural language processing, it has become a transformative force. Deep neural networks are now pivotal in resolving intricate challenges, such as image denoising and super-resolution imaging. Yet, they are not without their limitations, often yielding imprecise and unreliable outcomes.

In response to this pressing issue, dedicated researchers have embarked on a journey of exploration. Their quest led to a profound revelation: the integration of uncertainty quantification (UQ) into deep learning models could be the key to gauging their predictive confidence. This groundbreaking approach equips these models to discern anomalies within data and thwart potential malicious attacks. However, many deep learning models fall short in possessing robust UQ capabilities to detect shifts in data distribution during testing phases.

Enter the researchers from the University of California, Los Angeles (UCLA), who have devised a novel UQ technique grounded in the concept of cycle consistency. This innovative methodology promises to enhance the reliability of deep neural networks when confronted with inverse imaging challenges. Their potent UQ method systematically assesses the uncertainty inherent in neural network outputs, effortlessly identifying obscure data corruption and distribution variations. The core of this model lies in executing forward–backward cycles utilizing a physical forward model and an iteratively-trained neural network. It accumulates and estimates uncertainty by merging a computational representation of underlying processes with a neural network, repeatedly cycling between input and output data.

The researchers have introduced clearly defined upper and lower limits for cycle consistency. These limits elucidate its direct correlation with the uncertainty present in a given neural network’s outputs. By employing expressions for converging and diverging cycle outputs, these limits enable the estimation of uncertainty even in cases where ground truth data remains undisclosed. Furthermore, a machine learning model has been developed by the researchers, capable of categorizing images based on the disturbances they exhibit through forward-backward cycles. This emphasis on cycle consistency metrics has shown remarkable enhancements in the precision of final classifications.

In their relentless pursuit of perfection, the UCLA researchers have also addressed the challenge of identifying out-of-distribution (OOD) images related to image super-resolution. They meticulously curated three distinct categories of low-resolution images: animé, microscopy, and human faces. Employing separate super-resolution neural networks tailored to each image category, they conducted comprehensive evaluations across all three systems. Subsequently, a machine learning algorithm was employed to detect discrepancies in data distribution using forward-backward cycles. The results were nothing short of astonishing, with model-triggered alerts consistently classifying OOD instances when the animé-image super-resolution network was applied to other inputs, such as microscopic and facial images. Similar outcomes were observed when evaluating the other two networks. This compelling evidence underscores the superior accuracy achieved in identifying OOD photos compared to alternative methodologies.

Conclusion:

UCLA’s innovative UQ technique for deep neural networks promises increased reliability and precision in various applications. This breakthrough has the potential to reshape the market by instilling confidence in the performance of deep learning models, especially in image processing and data analysis, fostering greater adoption and trust among businesses and industries.