- R2I integrates state space models into MBRL, enhancing long-term memory and decision-making.

- Extensive evaluations demonstrate R2I’s superiority in memory-intensive tasks and adaptability in standard RL benchmarks.

- R2I builds upon DreamerV3, offering up to 9 times faster convergence while maintaining generality.

- Ablation tests validate R2I’s architectural efficiency and effectiveness in optimizing memory-dependent tasks.

Main AI News:

In the realm of Machine Learning (ML) breakthroughs, Reinforcement Learning (RL) stands out as a prominent domain, garnering significant attention. RL involves agents acquiring skills to navigate and interact with their environment, aiming to maximize cumulative rewards through strategic actions.

A pivotal advancement within RL is the integration of world models, which offer a blueprint of the environment’s dynamics. Model-Based Reinforcement Learning (MBRL) leverages these models to forecast action outcomes and optimize decision-making processes. However, MBRL encounters challenges in handling long-term dependencies, where agents must recall distant observations for informed decision-making.

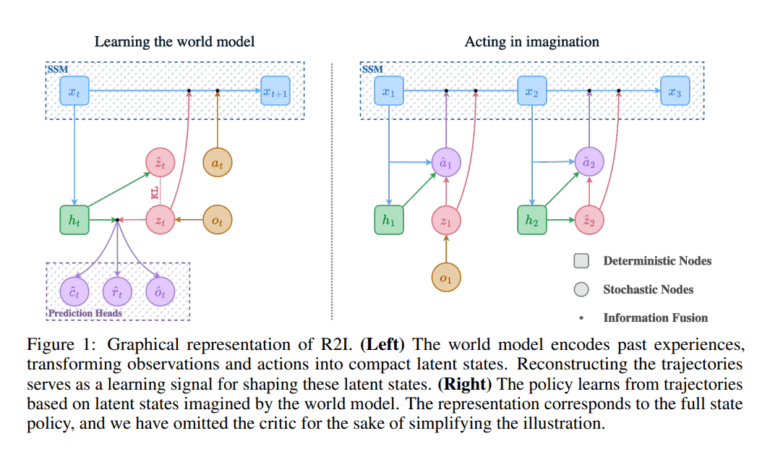

Enter “Recall to Imagine” (R2I), a pioneering method proposed by a team of researchers to address this very challenge. R2I incorporates state space models (SSMs) into MBRL, enhancing agents’ long-term memory and credit assignment capabilities. By bridging temporal gaps, R2I equips agents to tackle complex tasks requiring temporal coherence effectively.

Extensive evaluations showcase R2I’s prowess across diverse scenarios. From outperforming competitors in memory-intensive tasks like POPGym and Memory Maze to demonstrating adaptability in standard RL benchmarks such as DMC and Atari, R2I proves its mettle. Notably, its convergence speed surpasses that of DreamerV3, the leading MBRL approach, underscoring its viability for real-world applications.

Key contributions of R2I include its foundation on DreamerV3, featuring enhanced memory capabilities via a modified S4 version. Furthermore, rigorous evaluations, including ablation tests, shed light on R2I’s architectural efficiency and performance superiority.

Conclusion:

The introduction of the R2I methodology marks a significant advancement in the field of reinforcement learning. Its ability to enhance long-term memory and decision-making processes while maintaining efficiency and adaptability signifies a substantial opportunity for businesses to leverage advanced machine learning techniques for improved task performance and problem-solving capabilities. R2I’s proven superiority in memory-intensive domains and rapid convergence rate positions it as a viable solution for real-world applications, promising enhanced efficiency and effectiveness across various industries. Businesses that integrate R2I into their operations stand to gain a competitive edge by leveraging cutting-edge technology to optimize decision-making processes and tackle complex challenges with greater ease and precision.