- Traditional regression methods struggle with the complexity of real-world experiments.

- OmniPred, a collaborative effort by Google DeepMind, Carnegie Mellon University, and Google, introduces a groundbreaking framework.

- It transforms language models into versatile regressors, utilizing textual representations of parameters for precise metric prediction.

- OmniPred surpasses traditional models in adaptability and accuracy, thanks to its reliance on textual inputs and multi-task learning.

- Through experimentation with Google Vizier’s dataset, OmniPred demonstrates significant improvements over baseline models.

Main AI News:

In the realm of predicting outcomes from diverse parameters, traditional regression methods have long held sway. Yet, their efficacy often wanes when faced with the intricate tapestry of real-world experiments. The crux lies not merely in prognostication but in fashioning a tool adaptable enough to traverse the wide array of tasks, each laden with its unique parameters and consequences, sans the need for bespoke tailoring.

Various regression tools have emerged to tackle this predictive challenge, leveraging statistical prowess and neural networks to gauge outcomes based on input parameters. While Gaussian Processes, tree-based methodologies, and neural networks have showcased promise within their niches, they stumble when confronted with the universality of experiments or the demands of multi-task learning, often requiring convoluted feature manipulation or intricate normalization routines to function optimally.

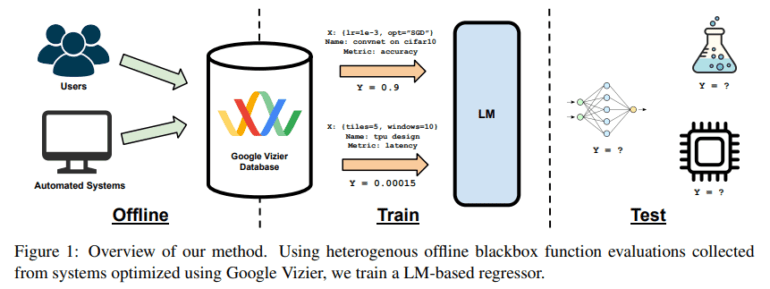

Enter OmniPred, a pioneering framework born out of a collaborative synergy between researchers at Google DeepMind, Carnegie Mellon University, and Google. This groundbreaking paradigm redefines the role of language models, metamorphosing them into all-encompassing end-to-end regressors. OmniPred’s brilliance lies in its adept utilization of textual depictions of mathematical parameters and values, empowering it to prognosticate metrics across a myriad of experimental designs with finesse. Leveraging the expansive dataset of Google Vizier, OmniPred showcases unparalleled prowess in numerical regression, eclipsing traditional models in both adaptability and precision.

At the heart of OmniPred resides a streamlined yet scalable metric prediction framework that forsakes dependency on constrained representations in favor of versatile textual inputs. This strategy equips OmniPred with the acumen to navigate the labyrinthine landscape of experimental data with remarkable precision. Furthermore, the framework’s efficacy is amplified through multi-task learning, enabling it to outstrip conventional regression models by harnessing the nuanced insights gleaned from textual and token-based representations.

The framework’s adeptness in processing textual data and its scalability heralds a new era in metric prediction. Through exhaustive experimentation using Google Vizier’s expansive dataset, OmniPred has showcased a substantial leap over baseline models, underscoring the potency of multi-task learning and the prospect of fine-tuning to bolster accuracy across uncharted territories.

Conclusion:

OmniPred’s emergence signifies a transformative shift in the landscape of experimental design and regression modeling. Its ability to outperform traditional methods in adaptability and accuracy heralds a new era of predictive analytics. This innovation presents immense opportunities for businesses and researchers seeking enhanced precision and efficiency in experimental outcomes and underscores the imperative of integrating advanced machine learning frameworks into their workflows.