- Large Language Models (LLMs) have revolutionized Natural Language Processing (NLP) with their advanced text generation and translation capabilities.

- Recent trends show an increase in the size of context windows in LLMs, with models like Llama 2, GPT-4 Turbo, and Gemini 1.5 handling significantly larger token counts.

- Evaluating LLM capabilities, particularly in recall performance, is crucial for selecting the right model. Tools like benchmark leaderboards and innovative evaluation techniques aid in this process.

- The needle-in-a-haystack method is used to assess recall performance, revealing dependencies on prompt content and potential biases in training data.

- Adjustments to LLM architecture, training, and fine-tuning can enhance recall performance, offering valuable insights for LLM applications.

Main AI News:

The evolution of Large Language Models (LLMs) has transformed the landscape of Natural Language Processing (NLP), facilitating notable advancements in text generation and machine translation. Central to these models is their capacity to extract and analyze information from textual inputs to deliver contextually appropriate outputs. Recent developments have witnessed a trend towards expanding the size of contextual windows, exemplified by models like Llama 2, which operates at 4,096 tokens, and GPT-4 Turbo and Gemini 1.5, handling 128,000 and an impressive 10M tokens, respectively. Nevertheless, the realization of the benefits associated with elongated context windows relies heavily on the LLM’s ability to recall information from them consistently.

Amidst the proliferation of LLMs, assessing their capabilities becomes paramount in selecting the most suitable model. Novel tools and methodologies, including benchmark leaderboards, evaluation software, and innovative evaluation techniques, have emerged to tackle this challenge. In LLM evaluation, the concept of “recall” scrutinizes a model’s adeptness at retrieving factoids from prompts placed at various positions, gauged through the needle-in-a-haystack approach. Unlike conventional metrics employed in Information Retrieval systems for Natural Language Processing, LLM recall delves into multiple needles for a comprehensive evaluation.

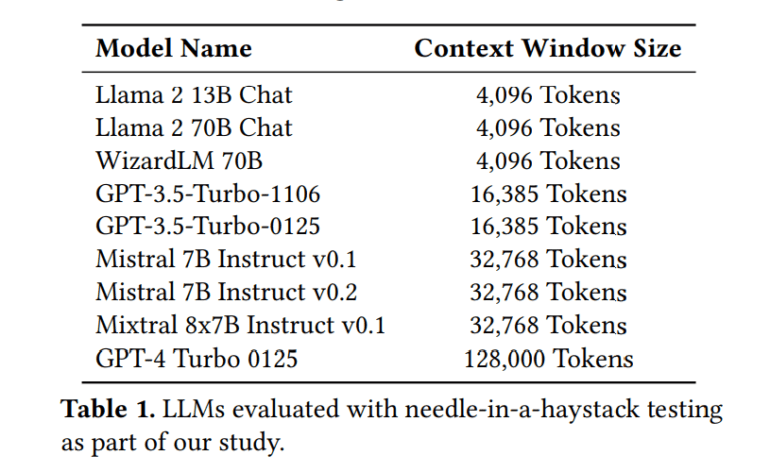

Researchers from VMware NLP Lab delve into the recall performance of various LLMs utilizing the needle-in-a-haystack methodology. Factoids, symbolizing needles, are concealed within filler text, or haystacks, for retrieval. The evaluation of recall performance spans across haystack lengths and needle placements to discern underlying patterns. The findings unveil that recall proficiency is contingent upon the content of the prompt and might be susceptible to biases in training data. Alterations in architecture, training methodologies, or fine-tuning procedures can bolster performance, furnishing valuable insights for the practical applications of LLMs.

The methodology scrutinizes recall performance by introducing a solitary needle into a filler text haystack, prompting the model to retrieve it. Variations in haystack lengths and needle positions scrutinize the robustness and performance patterns of recall. Visual representations such as heatmaps are employed to illustrate the outcomes. Haystack length, quantified in tokens, and needle depth, expressed as a percentage, undergo systematic variations. The tests encompass 35 different haystack lengths and placements for most models, meticulously adjusted to emulate natural text flow. Prompts entail a system message, a haystack containing the needle, and a retrieval inquiry.

A comparative analysis of recall performance across nine models on three distinct tests unveils that even a minor alteration in a single sentence within a prompt filling a context window can significantly influence an LLM’s recall capability. Augmenting parameter counts augments recall capacity, evident in instances such as Llama 2 13B and Llama 2 70B. Examination of Mistral indicates that adjustments in architecture and training strategies hold the potential to enhance recall. Results pertaining to WizardLM and GPT-3.5 Turbo imply that fine-tuning complements the recall capabilities of these models.

Conclusion:

The evolving capabilities of Large Language Models, particularly in recall performance, signify a shift in the NLP market towards more nuanced evaluation metrics and optimization strategies. Understanding and leveraging these capabilities will be essential for businesses seeking to deploy LLMs effectively in various applications, from customer service chatbots to content generation platforms.