TL;DR:

- Deep Neural Networks (DNNs) are a potent tool in AI, but their computational complexity presents challenges.

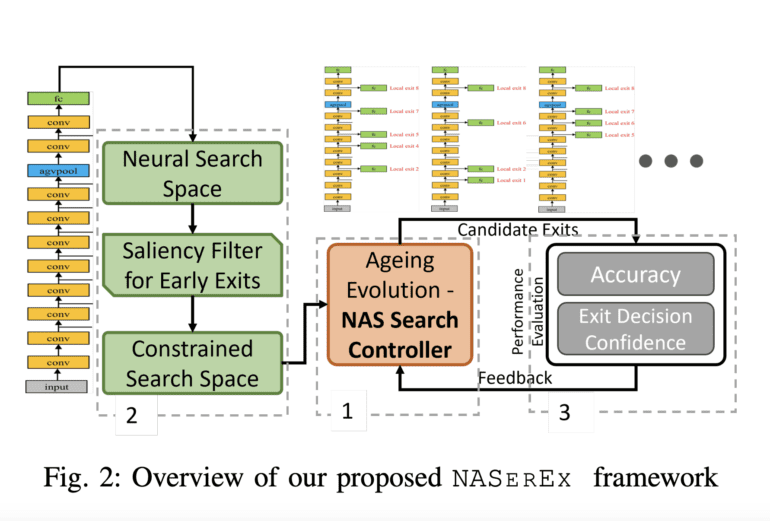

- Researchers from Cornell, Sony, and Qualcomm have introduced NASerEx, a neural architecture search framework.

- NASerEx automates the discovery of efficient early exit structures for DNNs.

- It offers task-specific, adaptable inference for core models dealing with extensive image streams.

- The framework simplifies exit gates to minimize computational complexity.

- Although exit gates could theoretically be placed anywhere, the complexity of contemporary DNNs makes it challenging.

- Striking a balance between comprehensive search space exploration and limited computational resources is crucial.

- NASerEx’s versatility applies to various model types and tasks, both discriminative and generative.

- Ongoing efforts include expanding the framework’s implementation and empowering developers and designers to enhance networks.

Main AI News:

Artificial Intelligence (AI) has undeniably reshaped the landscape of technology and innovation. In particular, Deep Neural Networks (DNNs), a subset of artificial neural networks, have emerged as a formidable force, capable of unraveling complex data patterns and correlations. These intricate networks, composed of multiple interconnected layers, have paved the way for breakthroughs in computer vision, natural language processing, and pattern recognition.

However, with great power comes great computational complexity. Scaling these DNNs to achieve optimal operational efficiency in AI applications, especially on resource-constrained hardware, presents a formidable challenge. The quest for a solution has brought together researchers from Cornell University, Sony Research, and Qualcomm.

Their mission is to maximize operational efficiency in machine learning models designed to handle large-scale big data streams. Focusing on embedded AI applications, they set out to explore the untapped potential of early exits in optimizing DNNs.

Enter NASerEx, a Neural Architecture Search (NAS) framework tailored for discovering the most effective early exit structure. This ingenious approach offers an automated solution to enhance task-specific, efficient, and adaptable inference for core models dealing with substantial image streams. The team also presents a robust metric ensuring precise early exit determinations for input stream samples, coupled with an implementable strategy for industrial-scale deployment.

Crucially, their optimization problem liberates itself from the shackles of specific baseline model features, opening the door to flexibility in model selection. The simplicity of exit gates, strategically designed to minimize computational complexity, is a testament to their commitment to efficiency.

In an ideal world, these exit gates could be placed anywhere within the network. However, the intricacies of contemporary DNNs introduce challenges, primarily due to the constraints of discrete search spaces.

Yet, there exists a delicate equilibrium between exploring the search space comprehensively and managing the computational costs associated with NAS. The limited resources available for training, divided between extensive dataset loading and executing the search algorithm, pose a significant hurdle.

The beauty of their methodology lies in its versatility. It applies to various model types and tasks, be it discriminative or generative. The researchers are not content with resting on their laurels. Their ongoing and future endeavors include extending the framework’s implementation, empowering developers and designers to craft exit-enhanced networks, employing post-pruning techniques across diverse model types and datasets, and conducting thorough evaluations.

Conclusion:

NASerEx presents a groundbreaking solution to the challenge of AI efficiency. Its potential impact on the market lies in its ability to optimize AI models for resource-constrained hardware, unlocking new possibilities for AI applications across industries. This innovation could lead to more efficient and cost-effective AI solutions, driving further adoption and innovation in the AI market.