- Large language models (LLMs) are pivotal in diverse tasks, with reasoning enhancement crucial for development.

- Methods to boost reasoning involve increasing model size and context length through various techniques.

- Studies on LLMs explore mechanistic frameworks, pattern analysis, and domain-specific approaches.

- Tenyx’s research focuses on transformer layers, highlighting the significance of token interaction density in the multi-head attention (MHA) module.

- The study correlates increased model size and context length with heightened attention density and improved reasoning capabilities.

- Analysis of the self-attention block’s intrinsic dimension and attention head graph density aims to deepen understanding of LLMs’ behavior.

Main AI News:

The research from Tenyx delves into the geometric analysis of large language models (LLMs), focusing on their reasoning capabilities. It highlights that LLMs have shown significant advancements in various tasks, with reasoning being a pivotal area for development. Enhancing reasoning often involves increasing model size and context length through techniques like chain of thought, retrieval augmented generation, and example-based prompting. These methods, though effective, also escalate computational costs and inference latency in practical applications.

Studies on LLMs have explored different approaches to understanding their capabilities. Some have employed mechanistic frameworks or pattern analysis through empirical results, while others have focused on input-output relationships using domain-specific techniques such as graph problems and algorithmic reasoning. Researchers have also scrutinized aspects like initialization, training dynamics, and embedding geometry in intermediate and final layers of transformers. However, these approaches often lack a holistic geometric perspective and fail to fully grasp the sequence dimension’s impact on LLMs’ reasoning capabilities, especially concerning model size and context length.

Tenyx’s study examines transformer layers in LLMs, pinpointing key factors influencing their expressive power. It identifies the density of token interactions in the multi-head attention (MHA) module as critical, indicative of the complexity of function representation achievable by subsequent multi-layer perceptrons (MLPs). Moreover, the study underscores how increased model size and context length correlate with heightened attention density and enhanced reasoning. By analyzing the self-attention block’s intrinsic dimension and graph density of each attention head, Tenyx aims to elucidate LLMs’ expressive potential and deepen understanding of their behavior, potentially paving the way for further advancements.

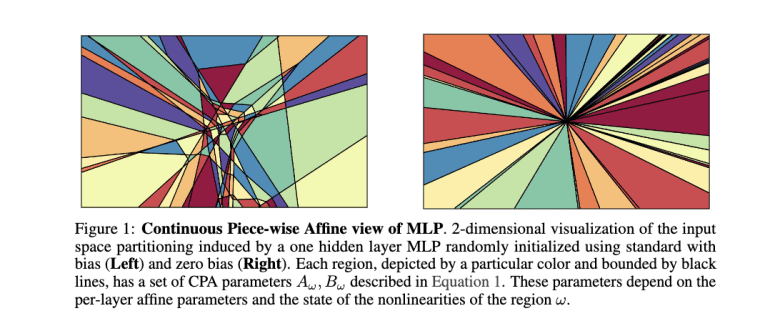

The research emphasizes that the intrinsic dimension (ID) of the final layers correlates strongly with response correctness across various model sizes. Experimentation with question-answer pairs and random tokens on the GSM8K-Zero dataset demonstrates that higher ID changes are associated with increased accuracy in responses, particularly when prompts include context relevant to the question. This results in finer partitioning around tokens and reduced prediction errors, showcasing the potential for adaptive transformations within MLPs. However, the study highlights that while these geometric insights enhance reasoning capabilities, their implications for the generalization and adaptability of LLMs across different contexts warrant further exploration.

Conclusion:

Tenyx’s exploration into the geometric aspects of large language models (LLMs) underscores the critical role of token interaction density and model scaling in enhancing reasoning capabilities. This research signifies a pathway towards optimizing LLM performance by leveraging insights into attention mechanisms and geometric properties, potentially influencing advancements in artificial intelligence applications across various sectors.